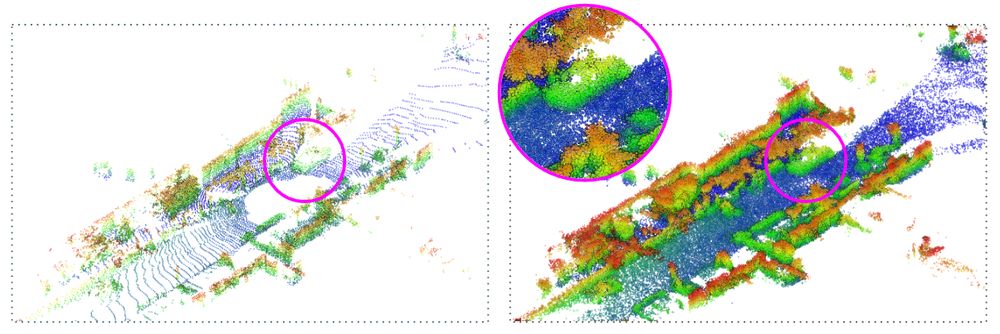

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

Today, Corentin Sautier is defending his PhD on "Learning Actionable LiDAR Representations without Annotations".

Good luck! 🚀

His thesis «Learning Actionable LiDAR Representations w/o Annotations» covers the papers BEVContrast (learning self-sup LiDAR features), SLidR, ScaLR (distillation), UNIT and Alpine (solving tasks w/o labels).

Today, Corentin Sautier is defending his PhD on "Learning Actionable LiDAR Representations without Annotations".

Good luck! 🚀

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

Papers popped up on different platforms, but mainly on ResearchGate with ~80 papers in just 3 weeks.

[1/]

Salma and Nermin put a tremendous amount of work in it, there's everything: the tasks, all the methods organized, datasets, numbers, challenges and opportunities.

Salma and Nermin put a tremendous amount of work in it, there's everything: the tasks, all the methods organized, datasets, numbers, challenges and opportunities.

Papers popped up on different platforms, but mainly on ResearchGate with ~80 papers in just 3 weeks.

[1/]

Papers popped up on different platforms, but mainly on ResearchGate with ~80 papers in just 3 weeks.

[1/]

Training and inference code available, along with the model checkpoint.

Github repo: github.com/astra-vision...

#IV2025

Training and inference code available, along with the model checkpoint.

Github repo: github.com/astra-vision...

#IV2025

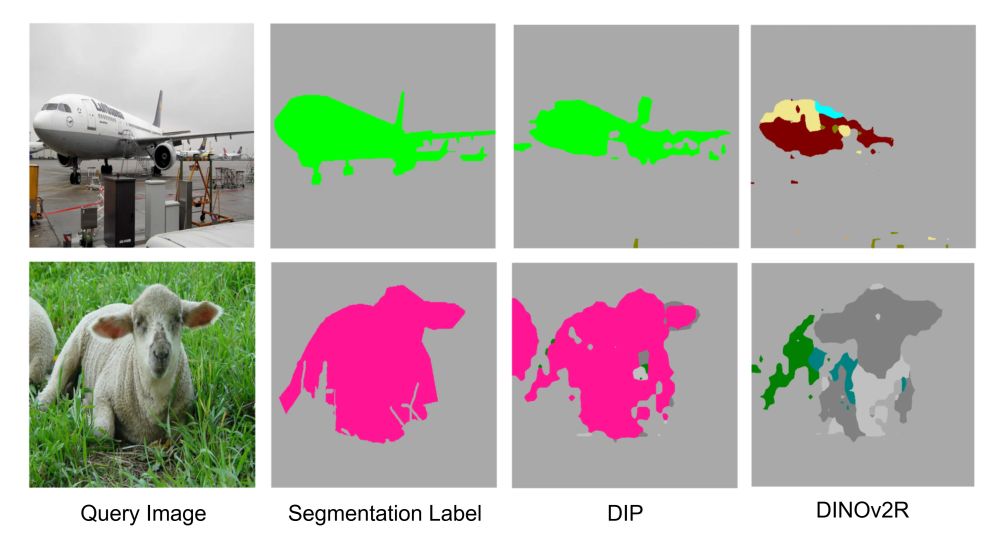

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Project page: astra-vision.github.io/LiDPM/

w/ @gillespuy.bsky.social, @alexandreboulch.bsky.social, Renaud Marlet, Raoul de Charette

Also, see our poster at 3pm in the Caravaggio room and AMA 😉

Project page: astra-vision.github.io/LiDPM/

w/ @gillespuy.bsky.social, @alexandreboulch.bsky.social, Renaud Marlet, Raoul de Charette

Also, see our poster at 3pm in the Caravaggio room and AMA 😉

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

These are 6 months projects that typically correspond to the end-of-study project in the French curriculum.

Probably more offers to come, check it regularly.

These are 6 months projects that typically correspond to the end-of-study project in the French curriculum.

Probably more offers to come, check it regularly.