Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

Want a safer social media? Then we need more transparency from the platforms about the scale, cause, and nature of harms. A key way to change the incentives of the companies.

www.techpolicy.press/making-socia...

Want a safer social media? Then we need more transparency from the platforms about the scale, cause, and nature of harms. A key way to change the incentives of the companies.

www.techpolicy.press/making-socia...

Main Link | Techmeme Permalink

Main Link | Techmeme Permalink

Details in the 🧵 below...

www.science.org/content/arti...

🧵1/7

Details in the 🧵 below...

arstechnica.com/tech-policy/...

arstechnica.com/tech-policy/...

www.cigionline.org/publications...

www.cigionline.org/publications...

www.thefai.org/posts/shapin...

www.thefai.org/posts/shapin...

I wrote the 📖 during a wild year as Jim Jordan subpoenaed & Stephen Miller sued me & my colleagues. Now it’s my turn to tell the story.

Preorders are open —they really help! If you hate AMZN, small shops on my website.

amzn.to/4bbiDJD

I wrote the 📖 during a wild year as Jim Jordan subpoenaed & Stephen Miller sued me & my colleagues. Now it’s my turn to tell the story.

Preorders are open —they really help! If you hate AMZN, small shops on my website.

amzn.to/4bbiDJD

www.consumerreports.org/stories?ques...

www.consumerreports.org/stories?ques...

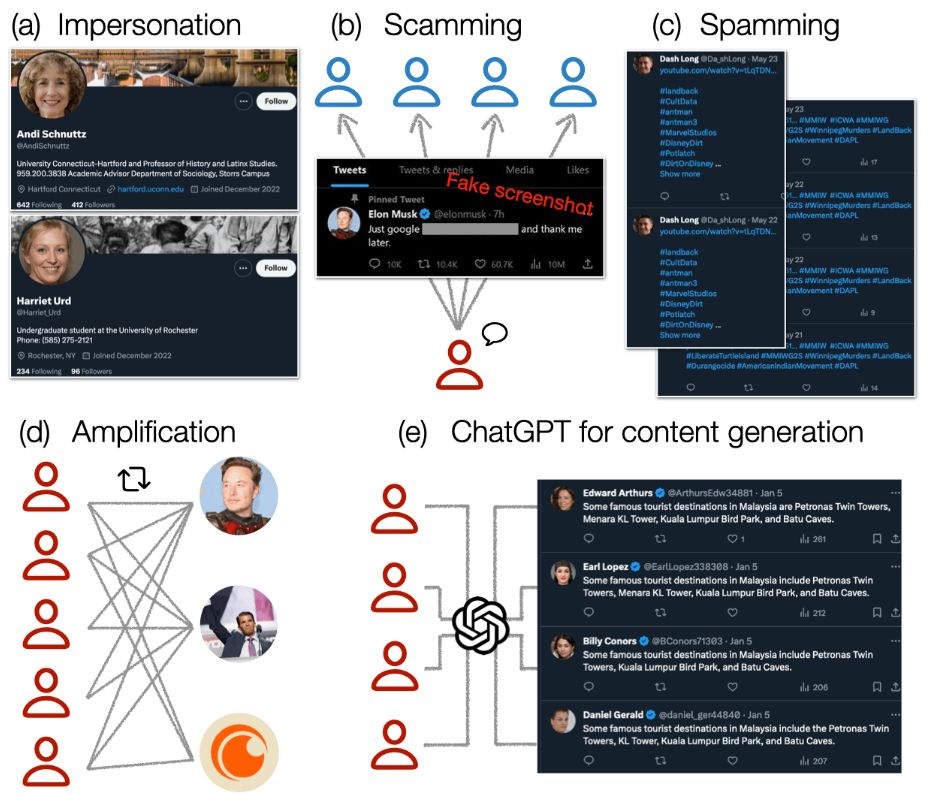

Characteristics and Prevalence of Fake Social Media Profiles with AI-generated Faces

doi.org/10.54501/jot...

tl;dr: At least 9-18k daily active X accounts use AI profiles to spread scams, spam, amplify coordinated messages, etc.

Characteristics and Prevalence of Fake Social Media Profiles with AI-generated Faces

doi.org/10.54501/jot...

tl;dr: At least 9-18k daily active X accounts use AI profiles to spread scams, spam, amplify coordinated messages, etc.