👨🔬 RS DeepMind

Past:

👨🔬 R Midjourney 1y 🧑🎓 DPhil AIMS Uni of Oxford 4.5y

🧙♂️ RE DeepMind 1y 📺 SWE Google 3y 🎓 TUM

👤 @nwspk

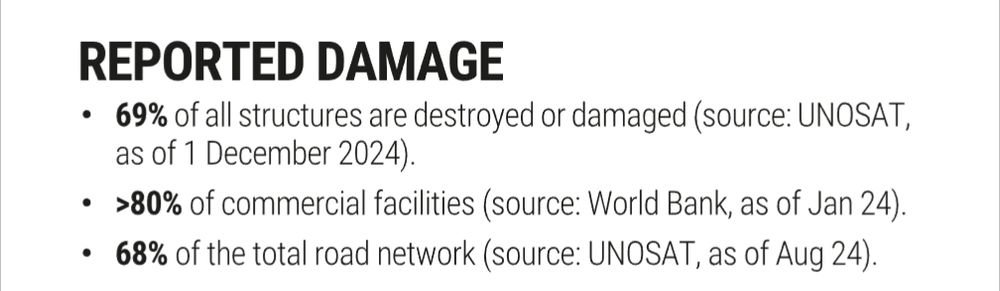

Compare this to, I dunno, the Dresden bomb raids:

25000 deaths over 4 days from just 3900t of bombs

Was that genocide?

en.m.wikipedia.org/wiki/Bombing...

Compare this to, I dunno, the Dresden bomb raids:

25000 deaths over 4 days from just 3900t of bombs

Was that genocide?

en.m.wikipedia.org/wiki/Bombing...

How is this war in Gaza different to that war?

en.m.wikipedia.org/wiki/Falluja...

How is this war in Gaza different to that war?

en.m.wikipedia.org/wiki/Falluja...

Of course, @GaryMarcus likes it very much:

garymarcus.substack.com/p/a-knockou...

Of course, @GaryMarcus likes it very much:

garymarcus.substack.com/p/a-knockou...

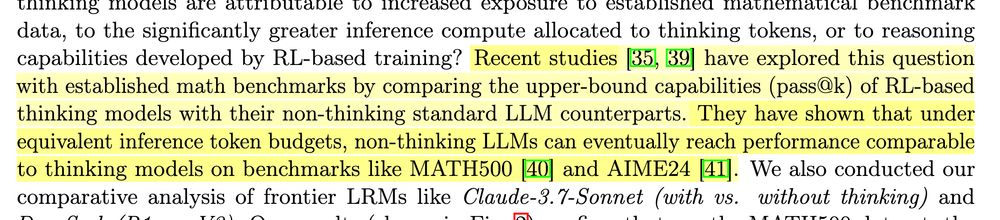

The ProRL paper by Nvidia has shown that RL-based models can truly learn new things - if you run RL long enough!

arxiv.org/abs/2505.24864

The ProRL paper by Nvidia has shown that RL-based models can truly learn new things - if you run RL long enough!

arxiv.org/abs/2505.24864

That models fail here is a lot more interesting and points towards areas of improvements.

That models fail here is a lot more interesting and points towards areas of improvements.

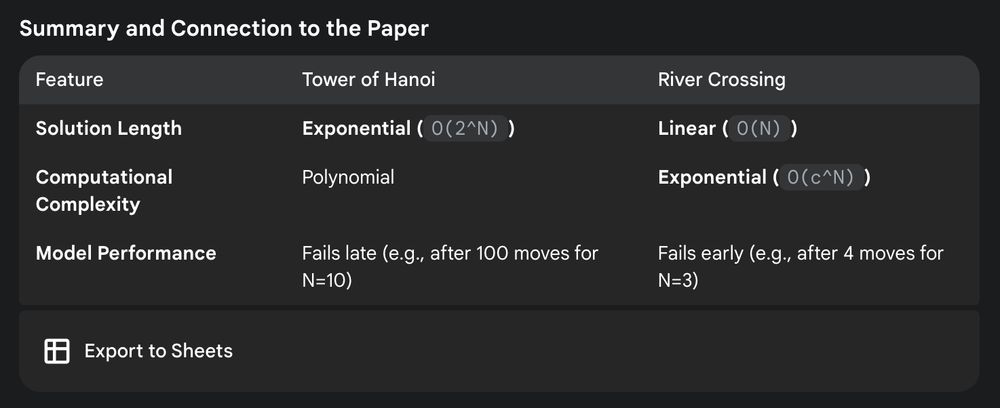

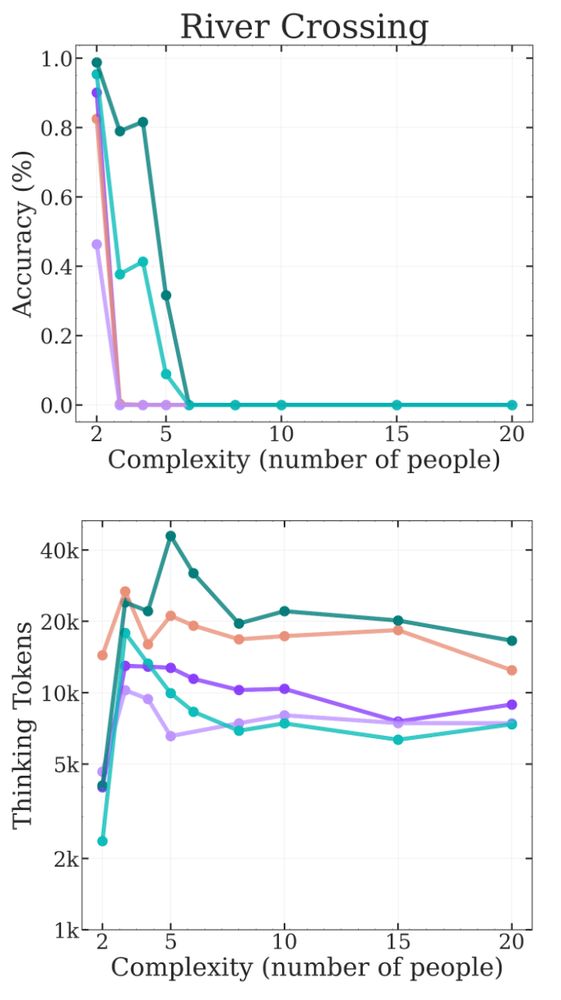

However, I don't think the other games hit the same issues. If we look at River Crossing it seems to hit high token counts very quickly:

However, I don't think the other games hit the same issues. If we look at River Crossing it seems to hit high token counts very quickly:

x.com/scaling01/s...

x.com/scaling01/s...

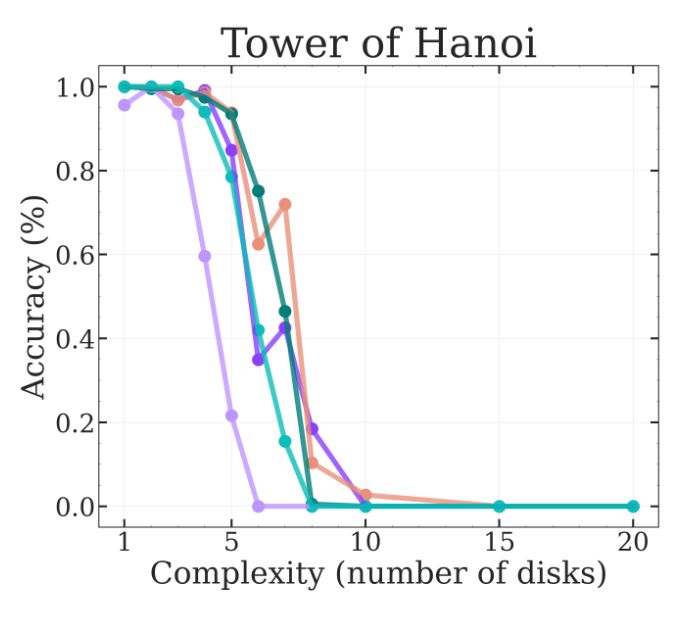

Apparently, the models say so at N=9 in ToH or refuse the write out the whole solution "by hand" and write code instead:

x.com/scaling01/s...

Apparently, the models say so at N=9 in ToH or refuse the write out the whole solution "by hand" and write code instead:

x.com/scaling01/s...

But again, this can be explained away sadly for ToH (but only ToH!).

x.com/MFarajtabar...

But again, this can be explained away sadly for ToH (but only ToH!).

x.com/MFarajtabar...

For N >= 12 or 13 disks, the LRM could not even output all the moves for the solution even if it wanted because the models can only output 64k tokens. Half of the plots of the ToH are essentially meaningless anyway.

x.com/scaling01/s...

For N >= 12 or 13 disks, the LRM could not even output all the moves for the solution even if it wanted because the models can only output 64k tokens. Half of the plots of the ToH are essentially meaningless anyway.

x.com/scaling01/s...

It just happens because the correct solution is so long and is not allowed to contain any typos.

(IMO the paper should really consider dropping the ToH environment from their results.)

It just happens because the correct solution is so long and is not allowed to contain any typos.

(IMO the paper should really consider dropping the ToH environment from their results.)

p("all correct") = p**(2^N - 1)

and p=0.999 matches the ToH results in the paper above.

p("all correct") = p**(2^N - 1)

and p=0.999 matches the ToH results in the paper above.

@scaling01 has a nice toy model that matches the paper results:

@scaling01 has a nice toy model that matches the paper results: