👨🔬 RS DeepMind

Past:

👨🔬 R Midjourney 1y 🧑🎓 DPhil AIMS Uni of Oxford 4.5y

🧙♂️ RE DeepMind 1y 📺 SWE Google 3y 🎓 TUM

👤 @nwspk

How is this war in Gaza different to that war?

en.m.wikipedia.org/wiki/Falluja...

How is this war in Gaza different to that war?

en.m.wikipedia.org/wiki/Falluja...

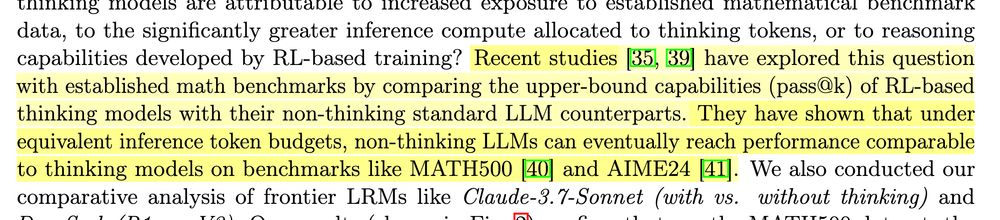

The ProRL paper by Nvidia has shown that RL-based models can truly learn new things - if you run RL long enough!

arxiv.org/abs/2505.24864

The ProRL paper by Nvidia has shown that RL-based models can truly learn new things - if you run RL long enough!

arxiv.org/abs/2505.24864

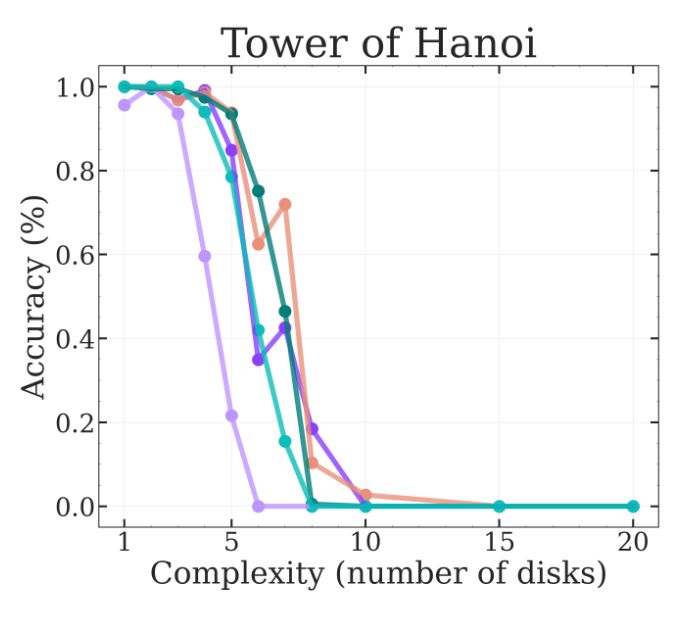

That models fail here is a lot more interesting and points towards areas of improvements.

That models fail here is a lot more interesting and points towards areas of improvements.

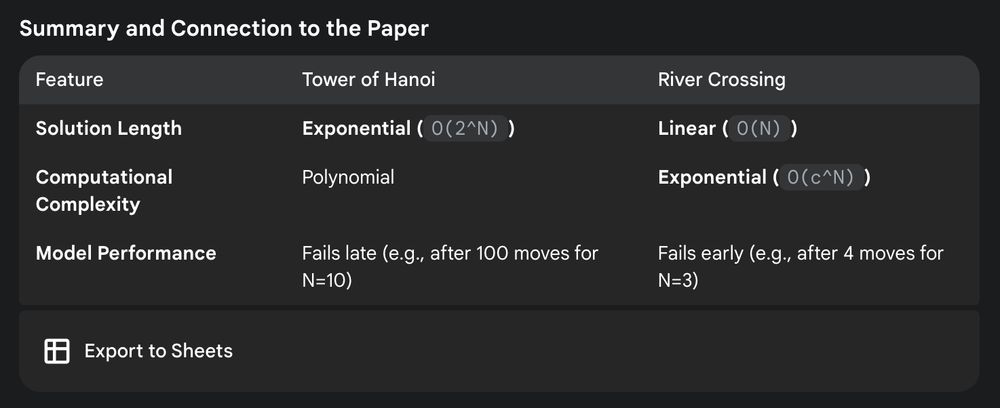

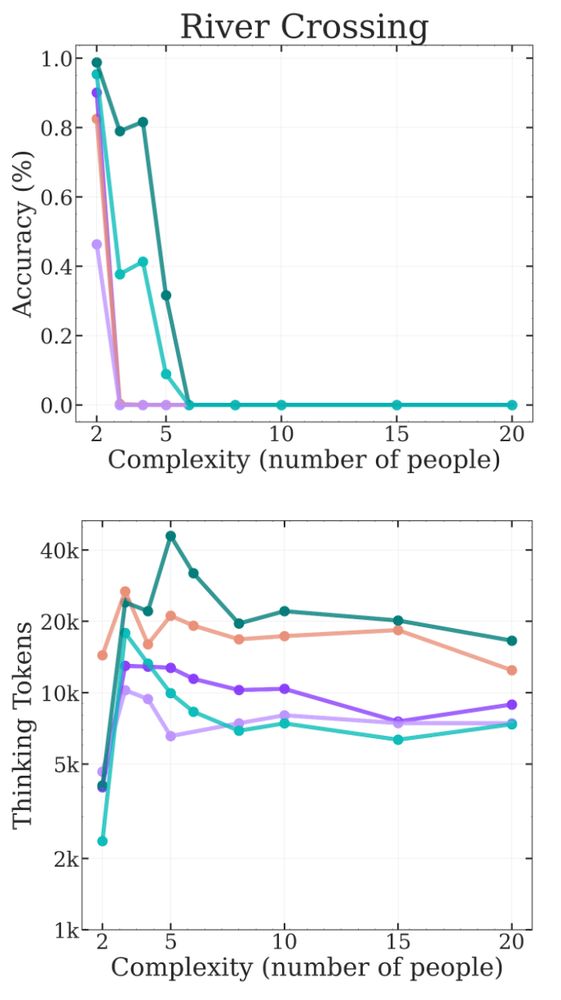

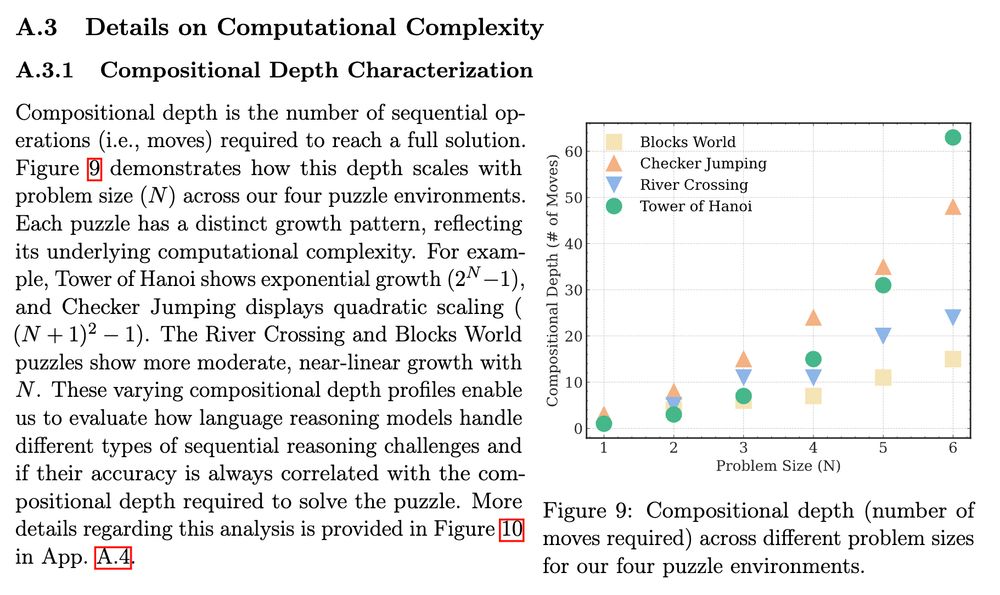

However, I don't think the other games hit the same issues. If we look at River Crossing it seems to hit high token counts very quickly:

However, I don't think the other games hit the same issues. If we look at River Crossing it seems to hit high token counts very quickly:

@scaling01 has a nice toy model that matches the paper results:

@scaling01 has a nice toy model that matches the paper results:

It finds some "surprising" behavior of LRMs: they can perform 100 correct steps on the Tower of Hanoi, but only 4 steps on River Crossing.

x.com/MFarajtabar...

It finds some "surprising" behavior of LRMs: they can perform 100 correct steps on the Tower of Hanoi, but only 4 steps on River Crossing.

x.com/MFarajtabar...

Real-world scenarios introduce complexities—approximation errors, model dynamics, and training stochasticity can affect submodularity assumptions.

Practical methods must balance theoretical ideals with these realities.

8/11

Real-world scenarios introduce complexities—approximation errors, model dynamics, and training stochasticity can affect submodularity assumptions.

Practical methods must balance theoretical ideals with these realities.

8/11

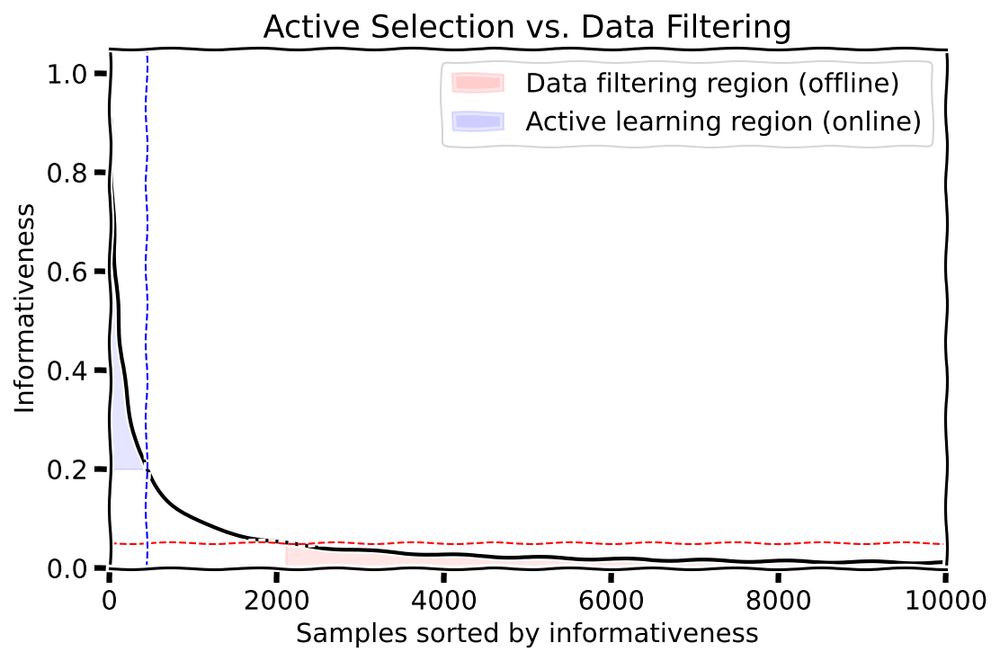

An active learning experiment on MNIST using a LeNet-5 model with Monte Carlo dropout selecting via BALD scores (expected information gain).

We can visualize how sample informativeness evolves dynamically during training (w/ EMA for visualization):

6/11

An active learning experiment on MNIST using a LeNet-5 model with Monte Carlo dropout selecting via BALD scores (expected information gain).

We can visualize how sample informativeness evolves dynamically during training (w/ EMA for visualization):

6/11

It confidently rejects samples early, knowing that initially uninformative samples rarely become valuable later. This makes early filtering safe and efficient.

5/11

It confidently rejects samples early, knowing that initially uninformative samples rarely become valuable later. This makes early filtering safe and efficient.

5/11

It continuously re-evaluates sample informativeness during training. A sample highly informative initially might become redundant after training on similar examples.

4/11

It continuously re-evaluates sample informativeness during training. A sample highly informative initially might become redundant after training on similar examples.

4/11

3/11

3/11

Data Filtering rejects samples upfront before training starts (offline).

This significantly impacts how we approach data selection, but why should we phrase this as "selection vs. rejection"?

2/11

Data Filtering rejects samples upfront before training starts (offline).

This significantly impacts how we approach data selection, but why should we phrase this as "selection vs. rejection"?

2/11

What is the fundamental difference between active learning and data filtering?

Well, obviously, the difference is that:

1/11

What is the fundamental difference between active learning and data filtering?

Well, obviously, the difference is that:

1/11

- Up to 94% reduction in labeling costs compared to baselines

- Consistently identifies the best model with significantly fewer labels

- Even in worst-case scenarios, selects models very close to the best

- Up to 94% reduction in labeling costs compared to baselines

- Consistently identifies the best model with significantly fewer labels

- Even in worst-case scenarios, selects models very close to the best

With thousands of pre-trained models available on platforms like @huggingface, how do we select the BEST one for a custom task when labeling data is expensive?

Blog post with details: www.blackhc.net/blog/2025/m...

With thousands of pre-trained models available on platforms like @huggingface, how do we select the BEST one for a custom task when labeling data is expensive?

Blog post with details: www.blackhc.net/blog/2025/m...

With @pokanovic.bsky.social, Jannes Kasper, @thoefler.bsky.social, @arkrause.bsky.social, and @nmervegurel.bsky.social

With @pokanovic.bsky.social, Jannes Kasper, @thoefler.bsky.social, @arkrause.bsky.social, and @nmervegurel.bsky.social

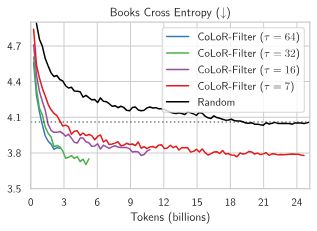

* Selection requires 2τn forward passes (fully parallelizable)

* 5× reduction in total compute when matching baseline performance

* Selection requires 2τn forward passes (fully parallelizable)

* 5× reduction in total compute when matching baseline performance

* Small auxiliary models (150M params) effectively select data for larger models (1.2B params)

* Continues improving even when selecting just 1 in 64 candidates from the training data

* Small auxiliary models (150M params) effectively select data for larger models (1.2B params)

* Continues improving even when selecting just 1 in 64 candidates from the training data

25× data reduction for Books domain adaptation

11× data reduction for downstream tasks

All while maintaining the same performance levels!

25× data reduction for Books domain adaptation

11× data reduction for downstream tasks

All while maintaining the same performance levels!