Blog → sakana.ai/ctm

Modern AI is powerful, but it's still distinct from human-like flexible intelligence. We believe neural timing is key. Our Continuous Thought Machine is built from the ground up to use neural dynamics as a powerful representation for intelligence.

Blog → sakana.ai/ctm

Modern AI is powerful, but it's still distinct from human-like flexible intelligence. We believe neural timing is key. Our Continuous Thought Machine is built from the ground up to use neural dynamics as a powerful representation for intelligence.

huggingface.co/moonshotai/K...

✨ 7B

✨ 13M+ hours of pretraining data

✨ Novel hybrid input architecture

✨ Universal audio capabilities (ASR, AQA, AAC, SER, SEC/ASC, end-to-end conversation)

huggingface.co/moonshotai/K...

✨ 7B

✨ 13M+ hours of pretraining data

✨ Novel hybrid input architecture

✨ Universal audio capabilities (ASR, AQA, AAC, SER, SEC/ASC, end-to-end conversation)

but first they release the paper describing generative reward modeling (GRM) via Self-Principled Critique Tuning (SPCT)

looking forward to DeepSeek-GRM!

arxiv.org/abs/2504.02495

but first they release the paper describing generative reward modeling (GRM) via Self-Principled Critique Tuning (SPCT)

looking forward to DeepSeek-GRM!

arxiv.org/abs/2504.02495

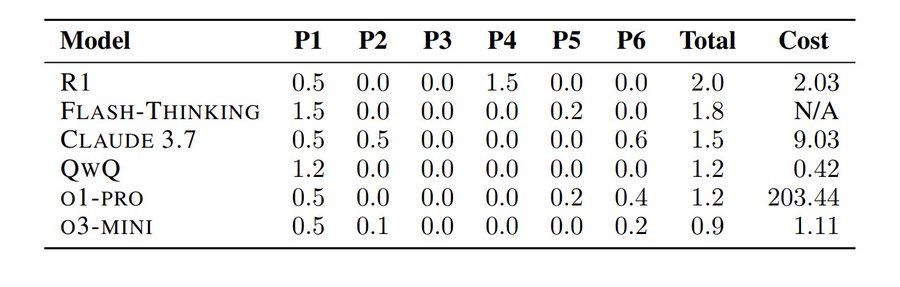

So naturally, some people put it to the test — hours after the 2025 US Math Olympiad problems were released.

The result: They all sucked!

So naturally, some people put it to the test — hours after the 2025 US Math Olympiad problems were released.

The result: They all sucked!

Moonlight: 3B/16B MoE model trained with Muon on 5.7T tokens, advancing the Pareto frontier with better performance at fewer FLOPs.

huggingface.co/moonshotai

Moonlight: 3B/16B MoE model trained with Muon on 5.7T tokens, advancing the Pareto frontier with better performance at fewer FLOPs.

huggingface.co/moonshotai

It uses four major design components: serverless abstraction and infrastructure, serving engine, scheduling algorithms, and scaling optimizations.

It uses four major design components: serverless abstraction and infrastructure, serving engine, scheduling algorithms, and scaling optimizations.

LLMs suck at long context.

This paper shows what I have seen in most deployments.

With longer contexts, performance degrades.

LLMs suck at long context.

This paper shows what I have seen in most deployments.

With longer contexts, performance degrades.

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

Particle filtering approach to Improved inference w/o any training!

Check out probabilistic-inference-scaling.github.io

By Aisha Puri et al📈🤖

Joint MIT-CSAIL & RedHat

Particle filtering approach to Improved inference w/o any training!

Check out probabilistic-inference-scaling.github.io

By Aisha Puri et al📈🤖

Joint MIT-CSAIL & RedHat

A guide to post-training a LLM using GRPO. It's particularly effective for scaling test-time compute for extended reasoning, making it an ideal approach for solving complex tasks, such as mathematical problem-solving

A guide to post-training a LLM using GRPO. It's particularly effective for scaling test-time compute for extended reasoning, making it an ideal approach for solving complex tasks, such as mathematical problem-solving

z @polsa.studenci @astro_peggy @astro_slawosz @tibor_to_orbit

z @polsa.studenci @astro_peggy @astro_slawosz @tibor_to_orbit

uv run --python 3.12 --with '.[test]' pytest

https://til.simonwillison.net/pytest/pytest-uv

uv run --python 3.12 --with '.[test]' pytest

https://til.simonwillison.net/pytest/pytest-uv

Starting with Qwen2VL-Instruct-2B, they spent $3 on compute and got it to outperform the 72B

github.com/Deep-Agent/R...

Starting with Qwen2VL-Instruct-2B, they spent $3 on compute and got it to outperform the 72B

github.com/Deep-Agent/R...

📄 Blog: qwenlm.github.io/blog/qwen2.5...

📄 Blog: qwenlm.github.io/blog/qwen2.5...

This is an attempt to consolidate the dizzying rate of AI developments since Christmas. If you're into AI but not deep enough, this should get you oriented again.

timkellogg.me/blog/2025/01...

This is an attempt to consolidate the dizzying rate of AI developments since Christmas. If you're into AI but not deep enough, this should get you oriented again.

timkellogg.me/blog/2025/01...

Enums are objects, why not give them attributes?

https://blog.glyph.im/2025/01/active-enum.html

Enums are objects, why not give them attributes?

https://blog.glyph.im/2025/01/active-enum.html

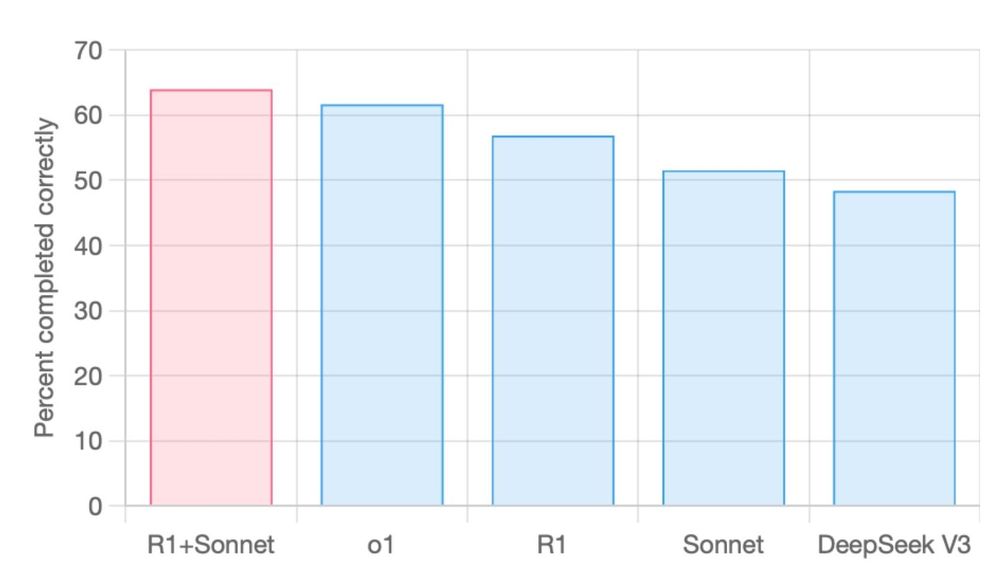

64% R1+Sonnet

62% o1

57% R1

52% Sonnet

48% DeepSeek V3

aider.chat/2025/01/24/r...

64% R1+Sonnet

62% o1

57% R1

52% Sonnet

48% DeepSeek V3

aider.chat/2025/01/24/r...

That’s 100% on needle in the haystack all the way through 4M, as i understand (seems like a benchmark mistake tbqh, it’s too good)

www.minimaxi.com/en/news/mini...

That’s 100% on needle in the haystack all the way through 4M, as i understand (seems like a benchmark mistake tbqh, it’s too good)

www.minimaxi.com/en/news/mini...

- Performance surpasses models like Llama3.1-8B and Qwen2.5-7B

- Capable of deep reasoning with system prompts

- Trained only on 4T high-quality tokens

huggingface.co/collections/...

- Performance surpasses models like Llama3.1-8B and Qwen2.5-7B

- Capable of deep reasoning with system prompts

- Trained only on 4T high-quality tokens

huggingface.co/collections/...

Details in 🧵

Details in 🧵

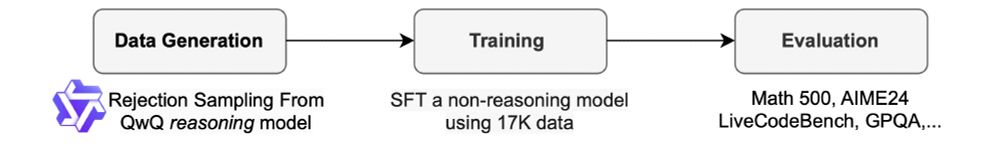

That was quick! Is this already the Alpaca moment for reasoning models?

Source: novasky-ai.github.io/posts/sky-t1/

That was quick! Is this already the Alpaca moment for reasoning models?

Source: novasky-ai.github.io/posts/sky-t1/

Here's everything released, find text-readable version here huggingface.co/posts/merve/...

All models are here huggingface.co/collections/...

Here's everything released, find text-readable version here huggingface.co/posts/merve/...

All models are here huggingface.co/collections/...

Let the LLM 'contemplate' for a bit before answering using this simple system prompt, which might (in most cases) lead to the correct final answer!

maharshi.bearblog.dev/contemplativ...

Let the LLM 'contemplate' for a bit before answering using this simple system prompt, which might (in most cases) lead to the correct final answer!

maharshi.bearblog.dev/contemplativ...