huggingface.co/collections/...

huggingface.co/collections/...

https://huggingface.co/docs/api-inference

https://huggingface.co/docs/api-inference

https://huggingface.co/playground

https://huggingface.co/playground

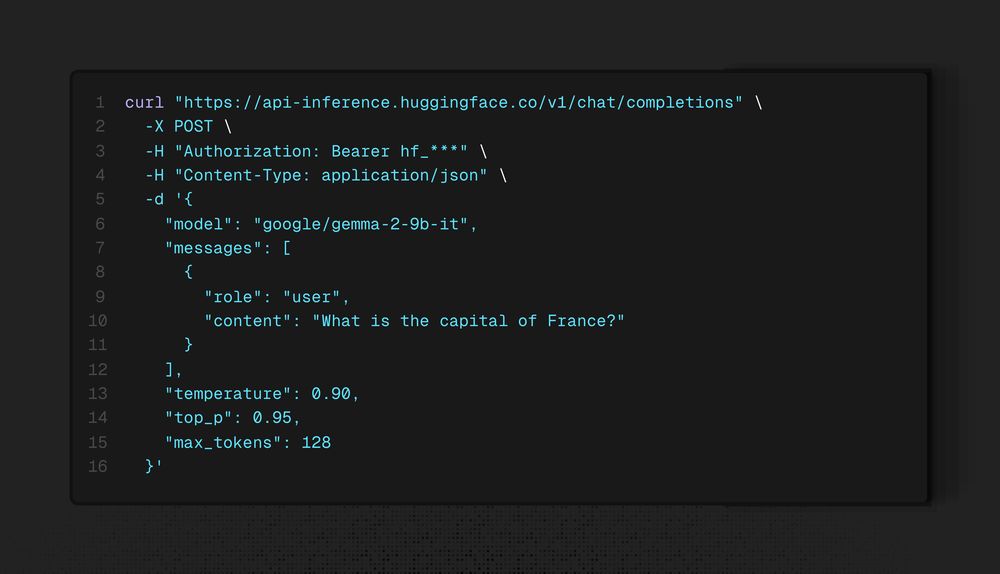

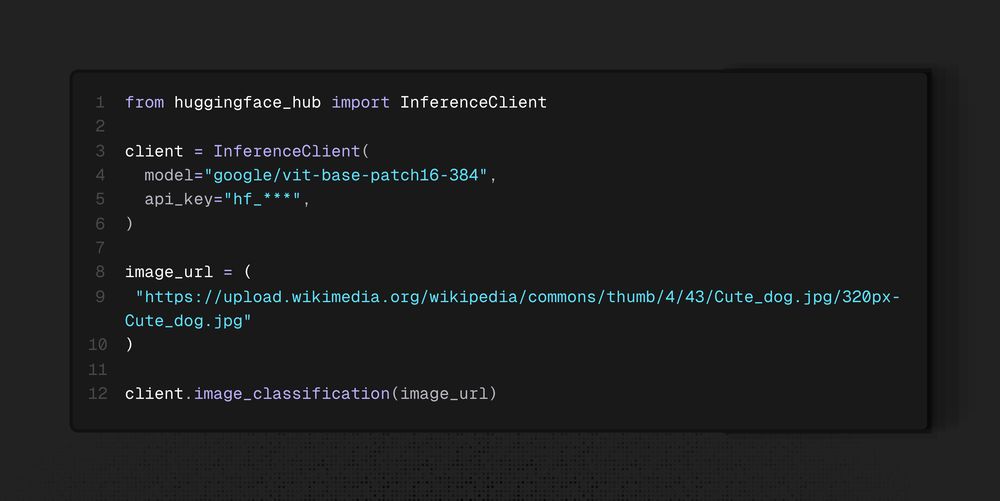

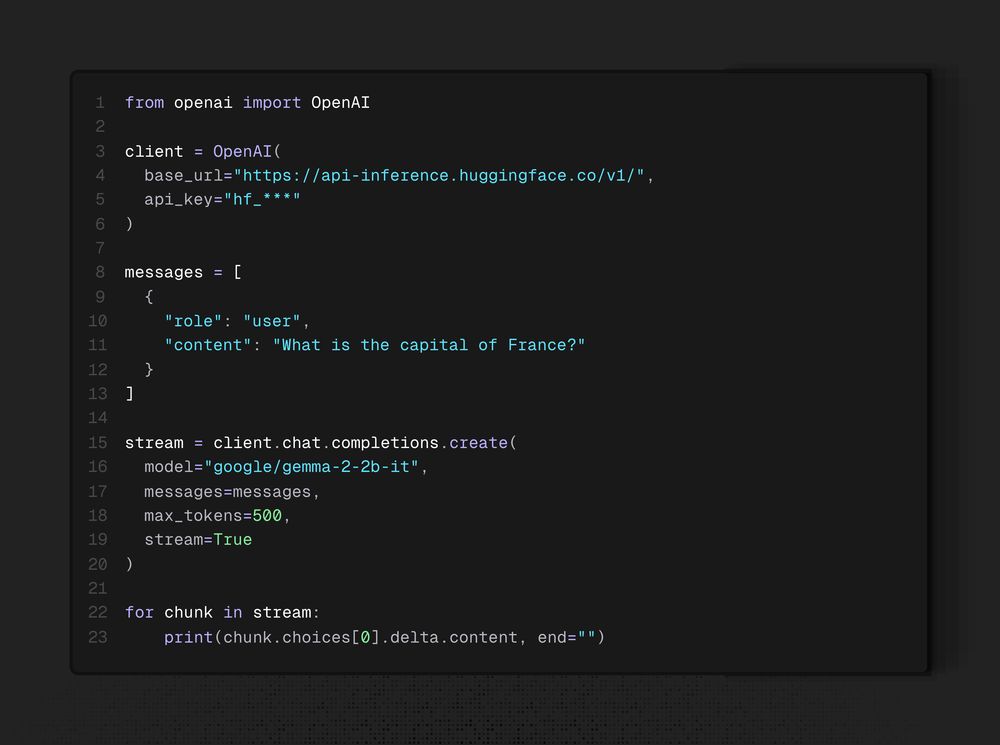

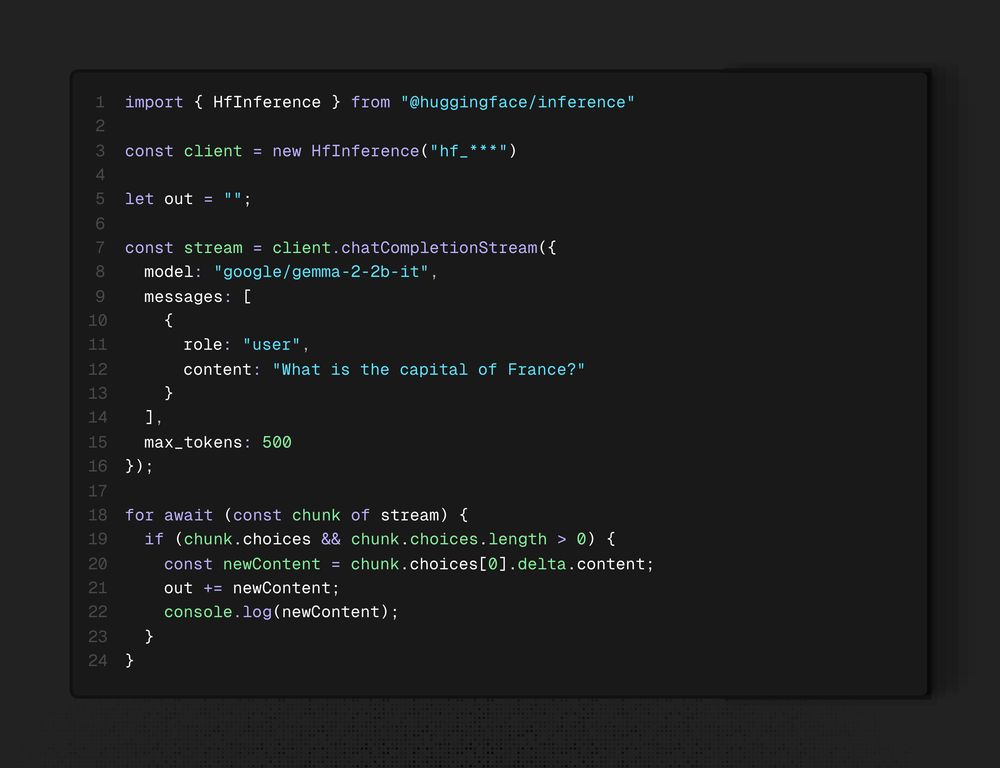

Find all the alternatives at https://huggingface.co/docs/api-inference

Find all the alternatives at https://huggingface.co/docs/api-inference

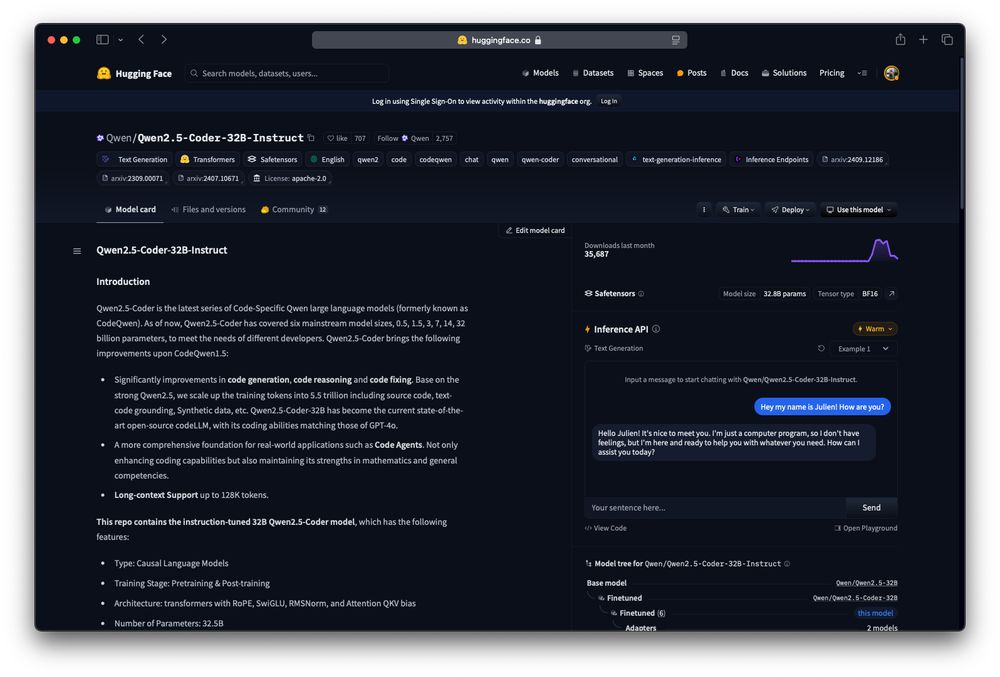

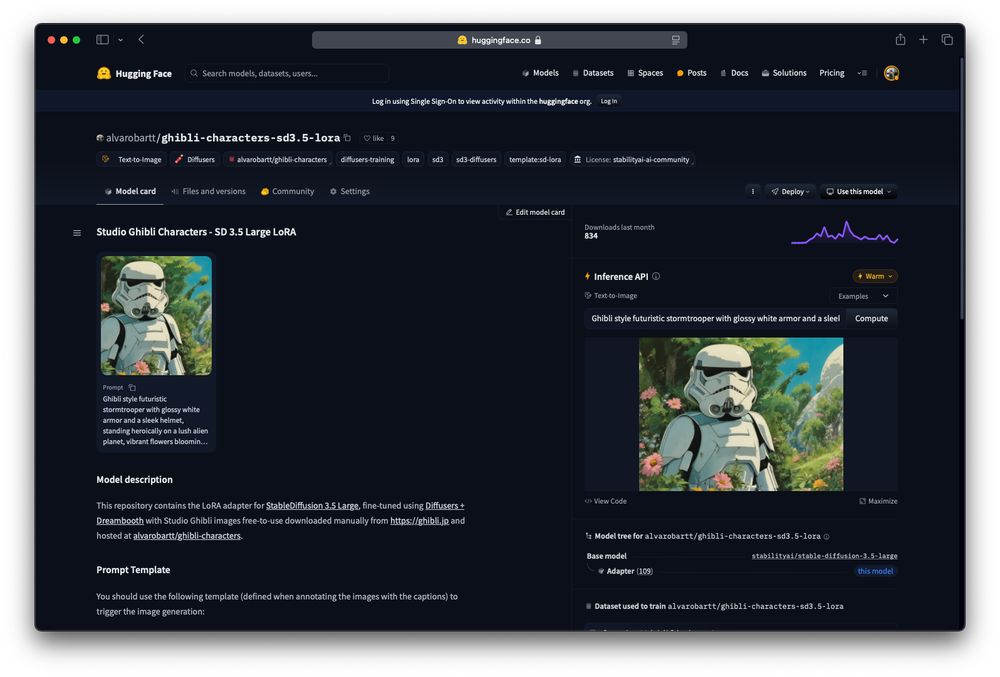

The most straightforward one is via the Hugging Face Hub available on the model card of the Serverless API supported models!

The most straightforward one is via the Hugging Face Hub available on the model card of the Serverless API supported models!