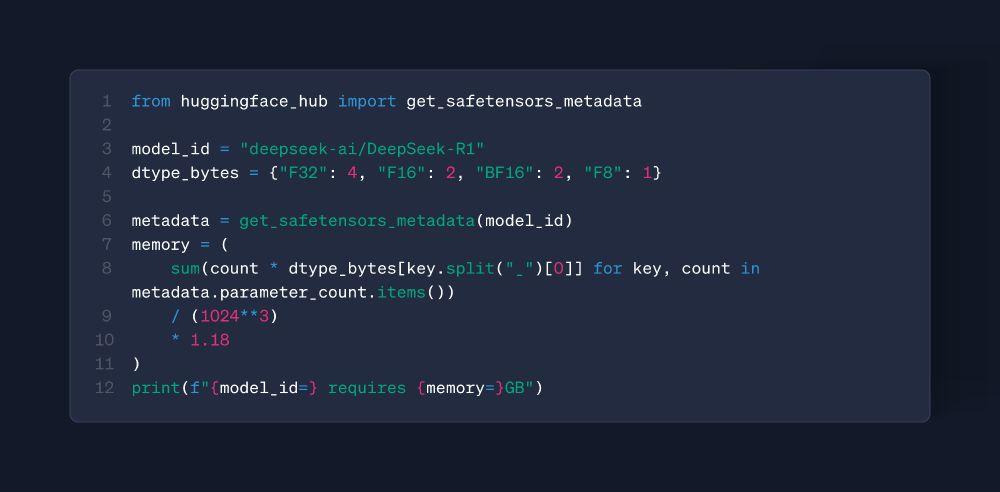

P.S. The result of the script above is: "model_id='deepseek-ai/DeepSeek-R1' requires memory=756.716GB"

P.S. The result of the script above is: "model_id='deepseek-ai/DeepSeek-R1' requires memory=756.716GB"

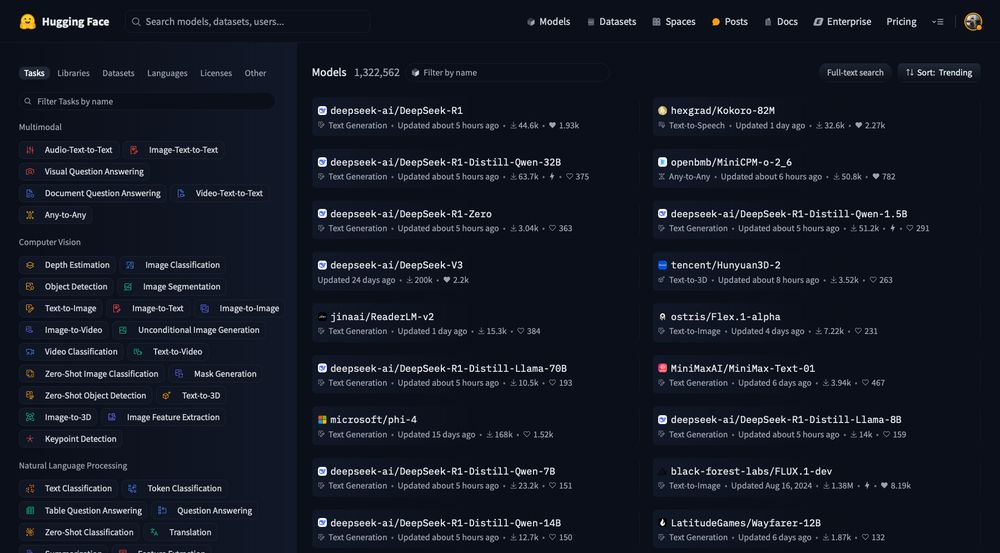

Amazing stuff from the DeepSeek team, ICYMI they recently released some reasoning models (DeepSeek-R1 and DeepSeek-R1-Zero), fully open-source, their performance is on par with OpenAI-o1 and it's MIT licensed!

Amazing stuff from the DeepSeek team, ICYMI they recently released some reasoning models (DeepSeek-R1 and DeepSeek-R1-Zero), fully open-source, their performance is on par with OpenAI-o1 and it's MIT licensed!

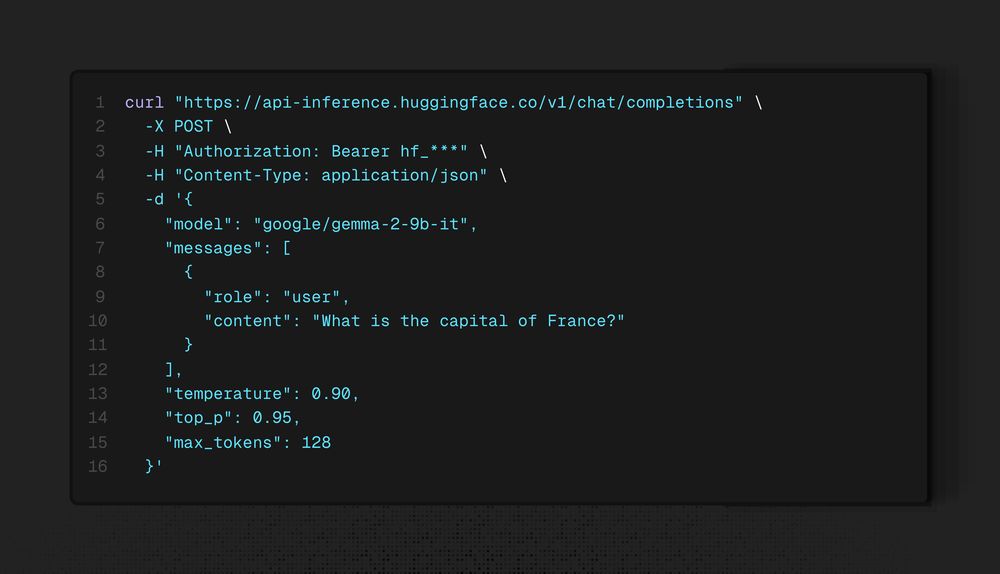

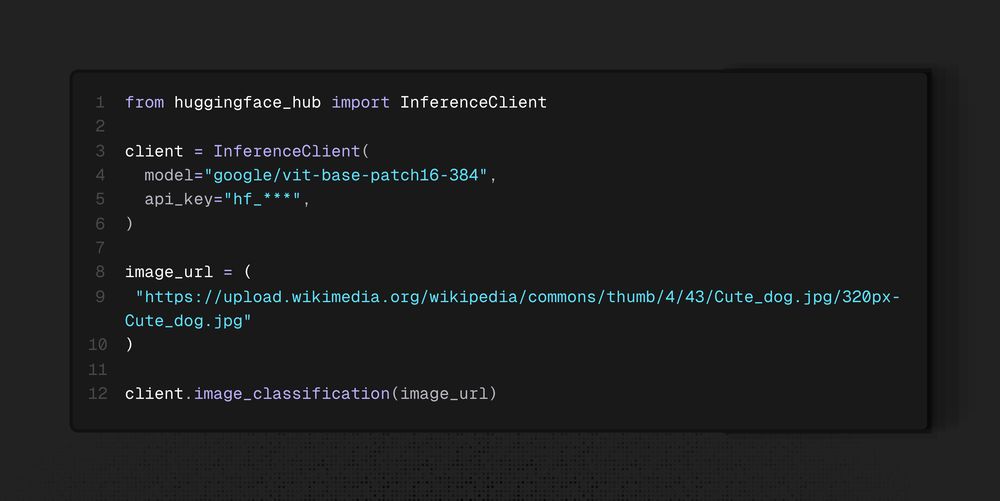

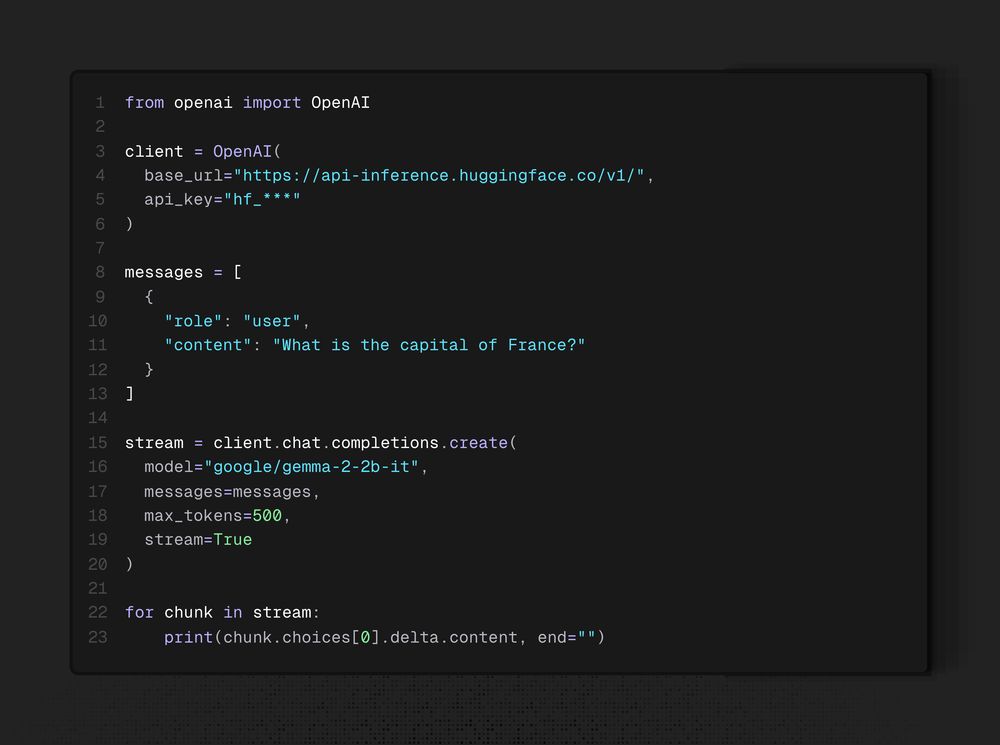

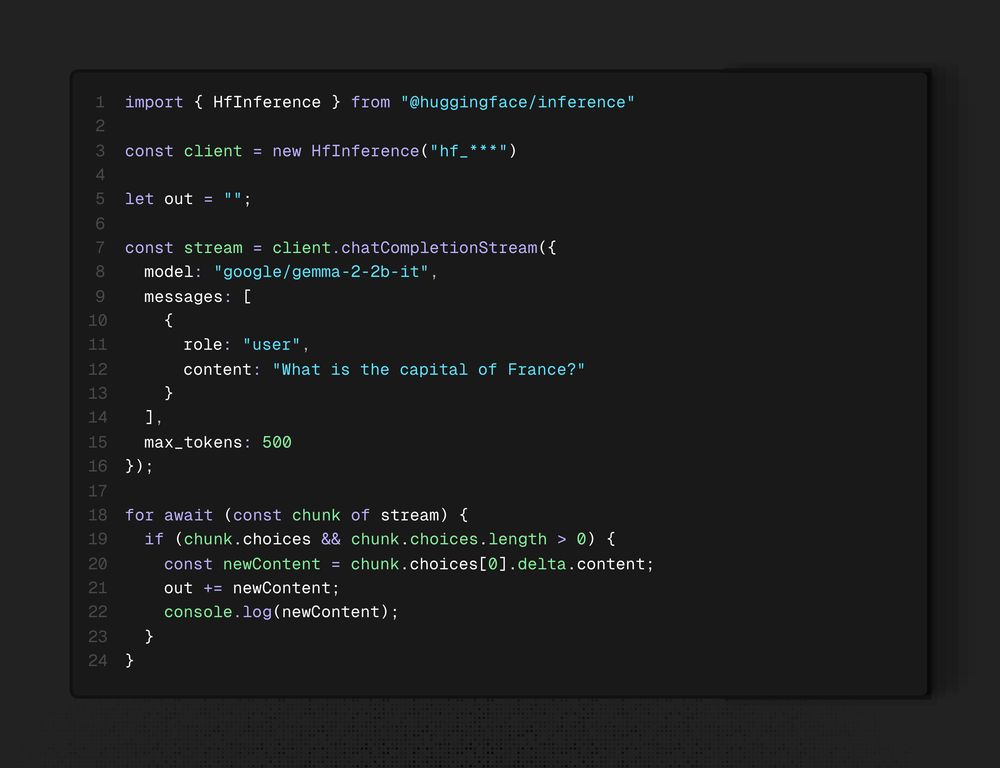

https://huggingface.co/docs/api-inference

https://huggingface.co/docs/api-inference

https://huggingface.co/playground

https://huggingface.co/playground

Find all the alternatives at https://huggingface.co/docs/api-inference

Find all the alternatives at https://huggingface.co/docs/api-inference

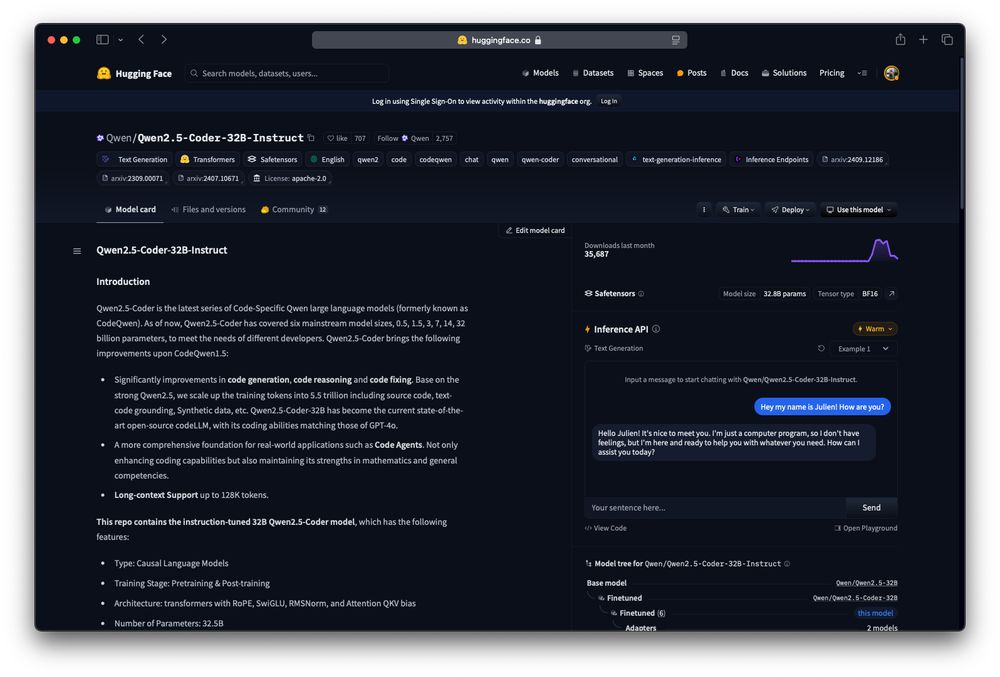

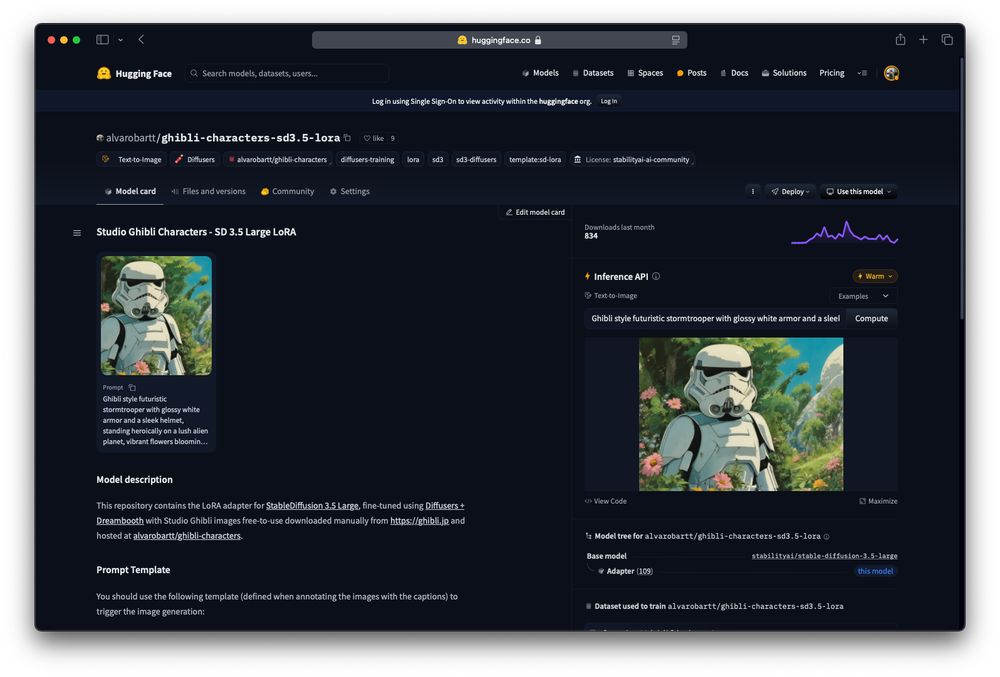

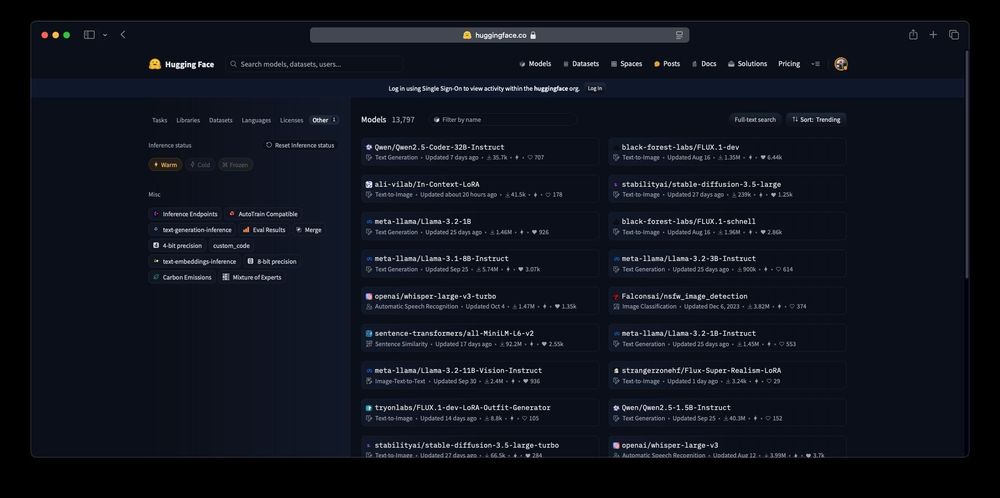

The most straightforward one is via the Hugging Face Hub available on the model card of the Serverless API supported models!

The most straightforward one is via the Hugging Face Hub available on the model card of the Serverless API supported models!

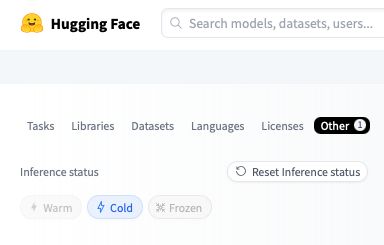

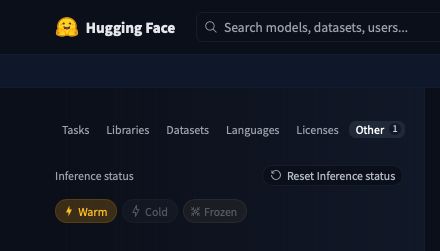

Well, we have the "Warm" tag that indicates that a model is loaded in the Serverless API and ready to be used 🔥

Well, we have the "Warm" tag that indicates that a model is loaded in the Serverless API and ready to be used 🔥

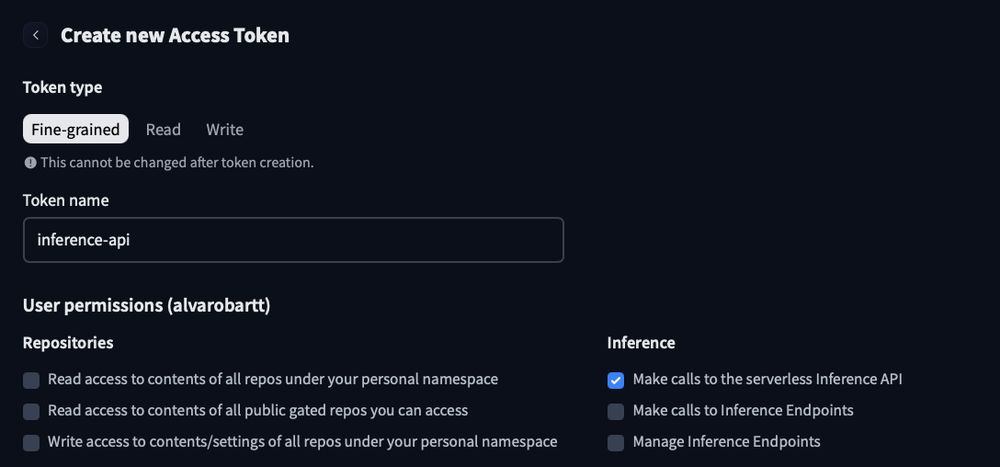

You just need a free account on the Hugging Face Hub (you can also subscribe to PRO to increase the requests per hour)

More details on the thread 🧵

You just need a free account on the Hugging Face Hub (you can also subscribe to PRO to increase the requests per hour)

More details on the thread 🧵