Can we make Perlin Noise stretch along some underlying vector field? Well it turns out it's possible with two simple additions to the original method! No need for advection or convolutions.

Find the paper and implementations here:

github.com/jakericedesi...

Definitely need more sites and some better approach to accelerating sampling....

Definitely need more sites and some better approach to accelerating sampling....

30,000 Voronoi sites. Probably not enough for the pighead, but it's just a fun first test.

30,000 Voronoi sites. Probably not enough for the pighead, but it's just a fun first test.

I've compiled all of my recent voronoi experiments into a collab:

colab.research.google.com/github/jaker...

I've compiled all of my recent voronoi experiments into a collab:

colab.research.google.com/github/jaker...

We weight the result of the softmax at any site i by sin(F_i*(x_i-x_j)•u_i) where F_i is learned frequency and u_i is a learned anisotropy direction. I call it Gaboroni

We weight the result of the softmax at any site i by sin(F_i*(x_i-x_j)•u_i) where F_i is learned frequency and u_i is a learned anisotropy direction. I call it Gaboroni

It's not at all a good compression method but it's fun and cool :)

It's not at all a good compression method but it's fun and cool :)

If anyone has a simple method for finding the exact distance to a plane clipped by a bounding box I'd be eternally grateful.

If anyone has a simple method for finding the exact distance to a plane clipped by a bounding box I'd be eternally grateful.

In our SIGGRAPH Asia 2025 paper: “BSP-OT: Sparse transport plans between discrete measures in log-linear time” we get one with typically 1% of error in a few seconds on CPU!

In our SIGGRAPH Asia 2025 paper: “BSP-OT: Sparse transport plans between discrete measures in log-linear time” we get one with typically 1% of error in a few seconds on CPU!

(2/2)

s2025.conference-schedule.org/presentation...

s2025.conference-schedule.org/presentation...

Can we make Perlin Noise stretch along some underlying vector field? Well it turns out it's possible with two simple additions to the original method! No need for advection or convolutions.

Find the paper and implementations here:

github.com/jakericedesi...

Can we make Perlin Noise stretch along some underlying vector field? Well it turns out it's possible with two simple additions to the original method! No need for advection or convolutions.

Find the paper and implementations here:

github.com/jakericedesi...

github.com/jakericedesi...

github.com/jakericedesi...

theorangeduck.com/page/filteri...

theorangeduck.com/page/filteri...

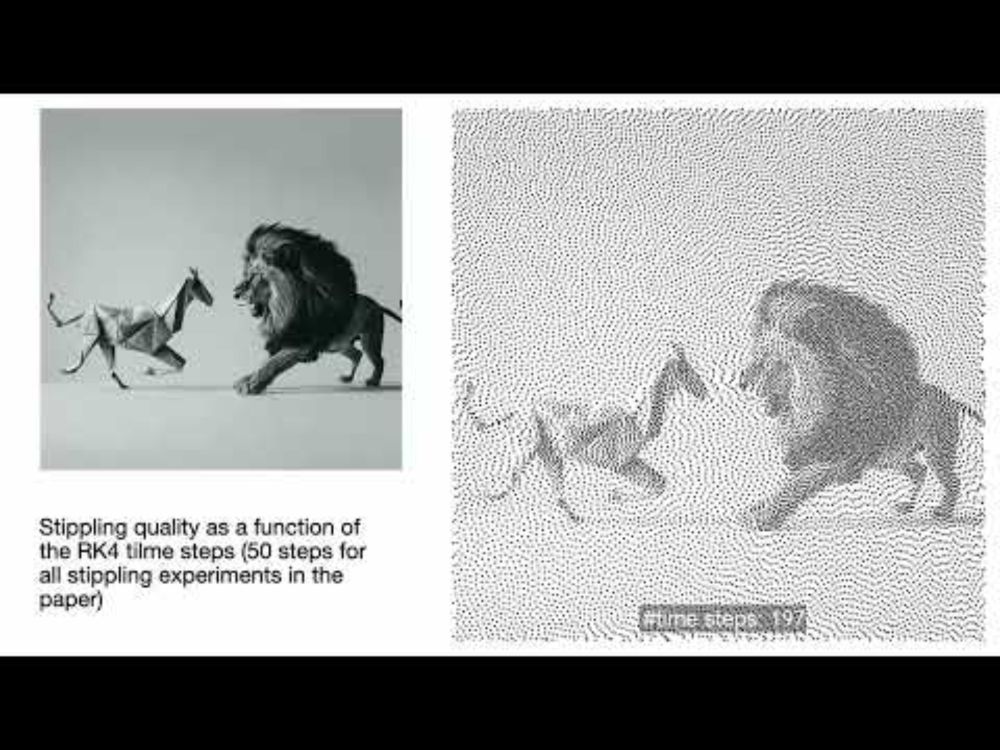

Paper here: perso.liris.cnrs.fr/nicolas.bonn...

Results in video: youtu.be/EcfKnSe6mhk

Paper here: perso.liris.cnrs.fr/nicolas.bonn...

Results in video: youtu.be/EcfKnSe6mhk

www.linkedin.com/pulse/creati...

www.linkedin.com/pulse/creati...

Can we get anything interesting from a single cell?

For this we'll be using a standard MAC grid, which means we'll represent horizontal velocities (red) on the vertical edges and vertical velocities (green) on the horizontal edges of each cell.

Can we get anything interesting from a single cell?

For this we'll be using a standard MAC grid, which means we'll represent horizontal velocities (red) on the vertical edges and vertical velocities (green) on the horizontal edges of each cell.

shadertoy.com/view/lfKfWV

Hopefully it doesn't crash webgl on your device! :)

shadertoy.com/view/lfKfWV

Hopefully it doesn't crash webgl on your device! :)