We trained 3 models - 1.5B, 8B, 24B - from scratch on 2-4T tokens of custom data

(TLDR: we cheat and get good scores)

@wissamantoun.bsky.social @rachelbawden.bsky.social @bensagot.bsky.social @zehavoc.bsky.social

We trained 3 models - 1.5B, 8B, 24B - from scratch on 2-4T tokens of custom data

(TLDR: we cheat and get good scores)

@wissamantoun.bsky.social @rachelbawden.bsky.social @bensagot.bsky.social @zehavoc.bsky.social

What does Riemann Zeta have to do with Brownian Motion?

youtu.be/YTQKbgxbtiw

What does Riemann Zeta have to do with Brownian Motion?

youtu.be/YTQKbgxbtiw

It unifies message structures, asynchronous tool orchestration, and pluggable chat providers so you can build agents with ease and avoid vendor lock-in.

GitHub: github.com/MoonshotAI/k...

Docs: moonshotai.github.io/kosong/

It unifies message structures, asynchronous tool orchestration, and pluggable chat providers so you can build agents with ease and avoid vendor lock-in.

GitHub: github.com/MoonshotAI/k...

Docs: moonshotai.github.io/kosong/

www.dbreunig.com/2025/07/31/h...

www.dbreunig.com/2025/07/31/h...

Check out my student Annabelle’s paper in collaboration with @lestermackey.bsky.social and colleagues on low-rank thinning!

New theory, dataset compression, efficient attention and more:

arxiv.org/abs/2502.12063

Check out my student Annabelle’s paper in collaboration with @lestermackey.bsky.social and colleagues on low-rank thinning!

New theory, dataset compression, efficient attention and more:

arxiv.org/abs/2502.12063

BlenderMCP is a tool that connects Blender with Claude AI via the Model Context Protocol (MCP).

BlenderMCP is a tool that connects Blender with Claude AI via the Model Context Protocol (MCP).

It's an honour to be part of such a great event with our folk horror adventure game set on the misty moors of Victorian England.

store.steampowered.com/app/1182310/...

It's an honour to be part of such a great event with our folk horror adventure game set on the misty moors of Victorian England.

store.steampowered.com/app/1182310/...

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

My goal is not to argue who should get credit for what, but to show a progression of closely related ideas over time and across neighboring fields.

1/n

My goal is not to argue who should get credit for what, but to show a progression of closely related ideas over time and across neighboring fields.

1/n

We want this to enable multi-disciplinary foundation model research.

We want this to enable multi-disciplinary foundation model research.

About a month ago I told some students that one could do geometry instead of pixels and solve persistence/hallucination issues. But they only had a week runway because someone else was probably working on it too

About a month ago I told some students that one could do geometry instead of pixels and solve persistence/hallucination issues. But they only had a week runway because someone else was probably working on it too

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

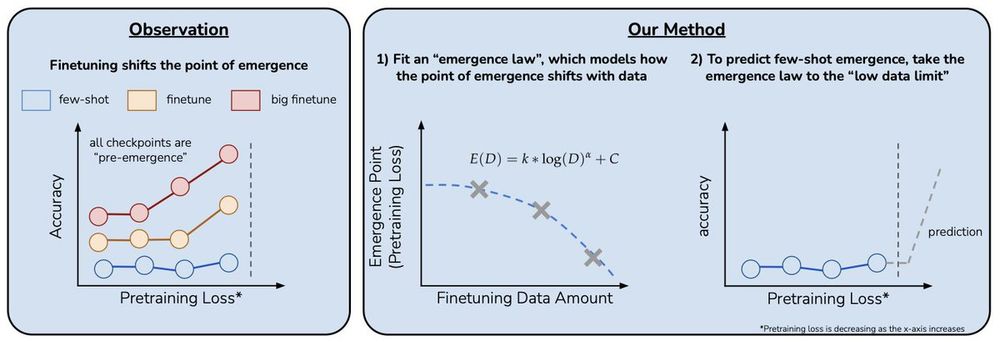

We propose a method for doing exactly this in our paper “Predicting Emergent Capabilities by Finetuning”🧵

We propose a method for doing exactly this in our paper “Predicting Emergent Capabilities by Finetuning”🧵

We got this idea after their cool work on improving Plug and Play with FM: arxiv.org/abs/2410.02423

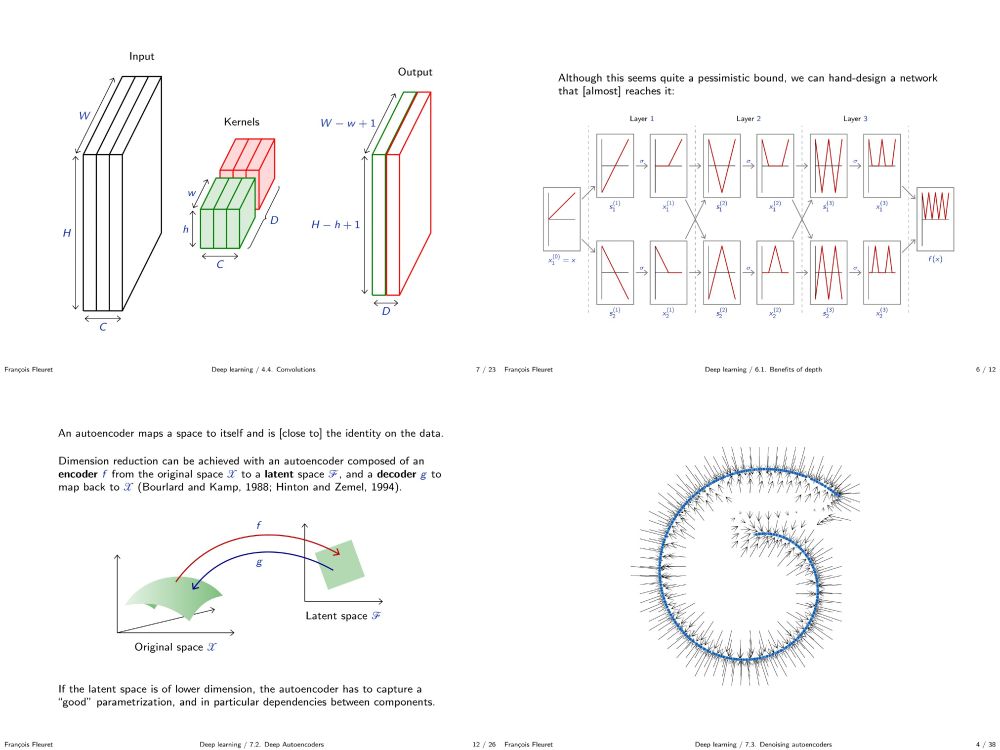

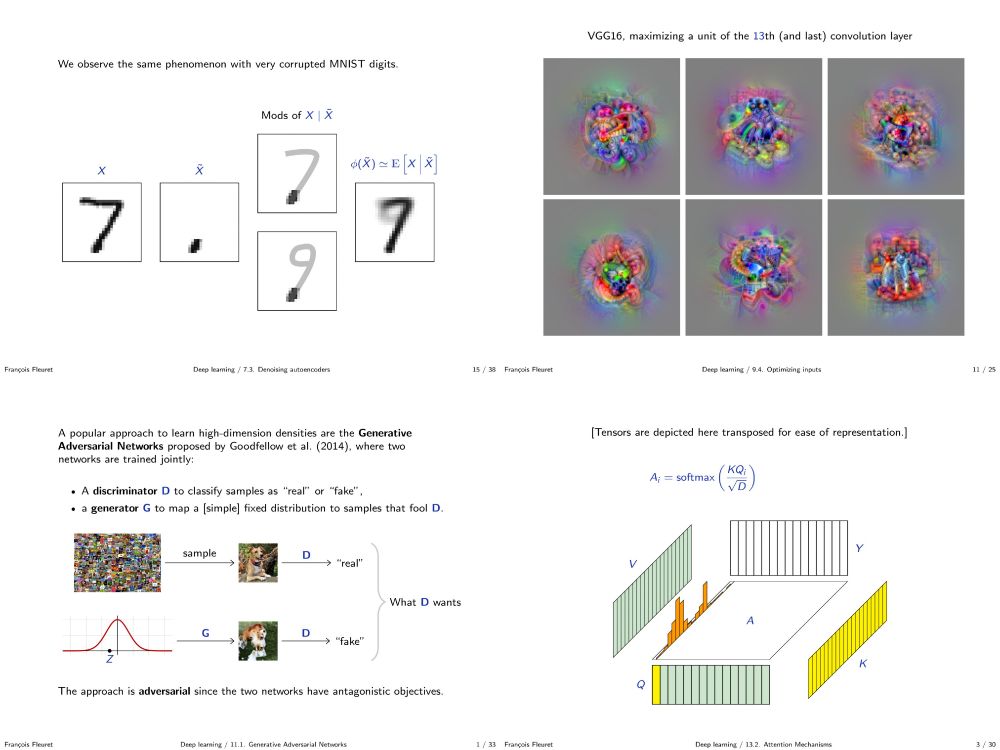

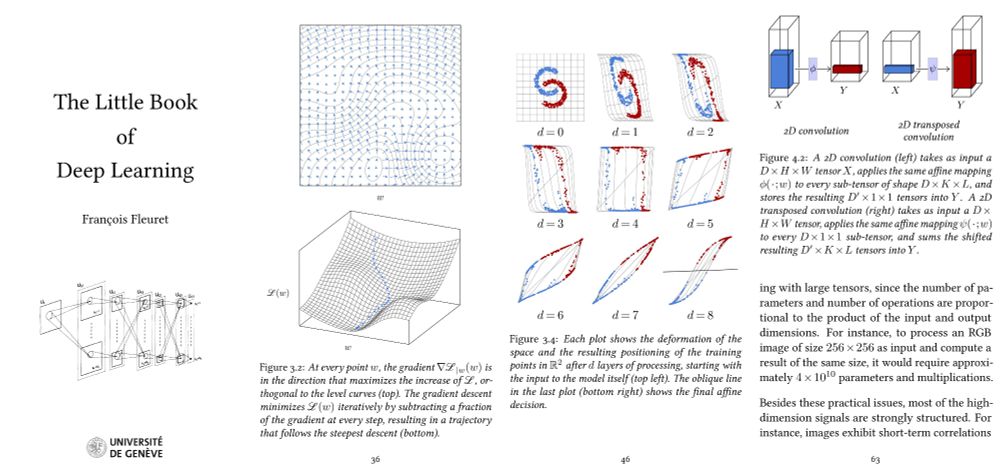

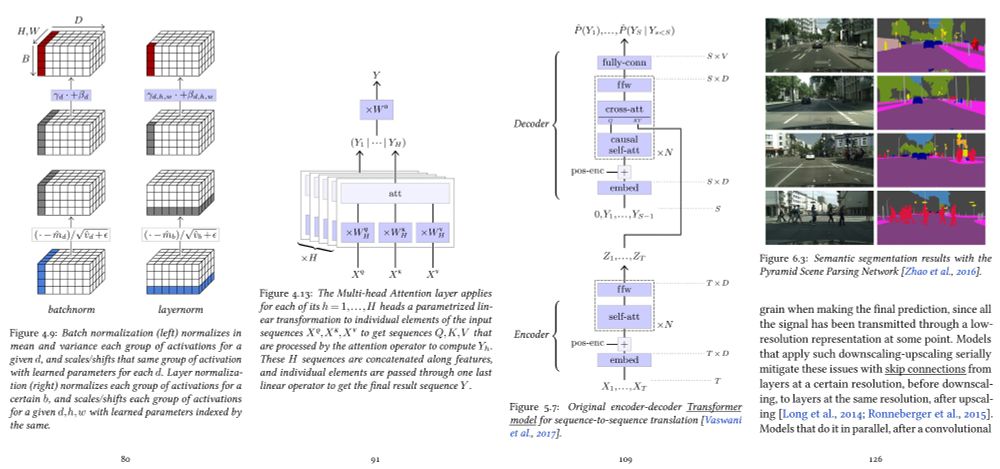

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

fleuret.org/dlc/

And my "Little Book of Deep Learning" is available as a phone-formatted pdf (nearing 700k downloads!)

fleuret.org/lbdl/

Consider a non-abelian group. Take two elements at random. What is the probability that they commute? 🧵

doi.org/10.1080/0002...

Consider a non-abelian group. Take two elements at random. What is the probability that they commute? 🧵

doi.org/10.1080/0002...