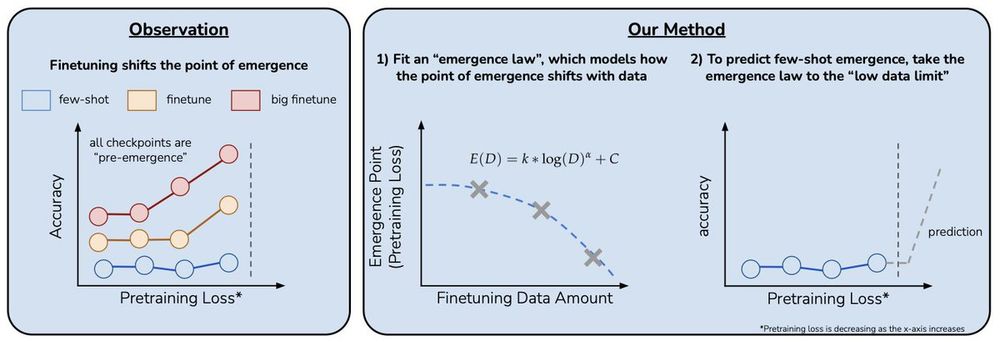

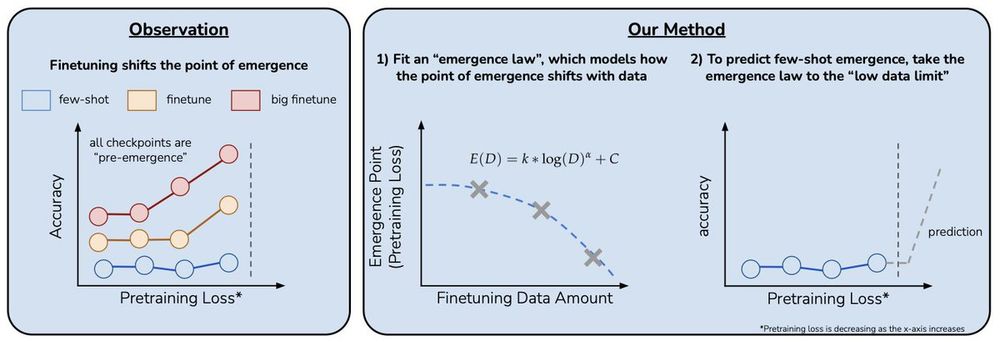

We propose a method for doing exactly this in our paper “Predicting Emergent Capabilities by Finetuning”🧵

We propose a method for doing exactly this in our paper “Predicting Emergent Capabilities by Finetuning”🧵

We propose a method for doing exactly this in our paper “Predicting Emergent Capabilities by Finetuning”🧵