Blogging about data and steering AI (https://dataleverage.substack.com/)

dataleverage.substack.com/p/almost-eve...

dataleverage.substack.com/p/almost-eve...

🗣️ @b-cavello.bsky.social in our #4DCongress

🗣️ @b-cavello.bsky.social in our #4DCongress

✨ Workshop on Algorithmic Collective Action (ACA) ✨

acaworkshop.github.io

at NeurIPS 2025!

✨ Workshop on Algorithmic Collective Action (ACA) ✨

acaworkshop.github.io

at NeurIPS 2025!

"This model is good for asking health questions, because 10,000 doctors attested to supporting training and/or eval". Etc.

"This model is good for asking health questions, because 10,000 doctors attested to supporting training and/or eval". Etc.

On Thurs Aditya Karan will present on collective action dl.acm.org/doi/10.1145/... at 10:57 (New Stage A)

On Thurs Aditya Karan will present on collective action dl.acm.org/doi/10.1145/... at 10:57 (New Stage A)

dataleverage.substack.com/p/on-ai-driv...

dataleverage.substack.com/p/on-ai-driv...

dataleverage.substack.com/p/google-and...

This will be post 1/3 in a series about viewing many AI products as all competing around the same task: ranking bundles or grains of records made by people.

dataleverage.substack.com/p/google-and...

This will be post 1/3 in a series about viewing many AI products as all competing around the same task: ranking bundles or grains of records made by people.

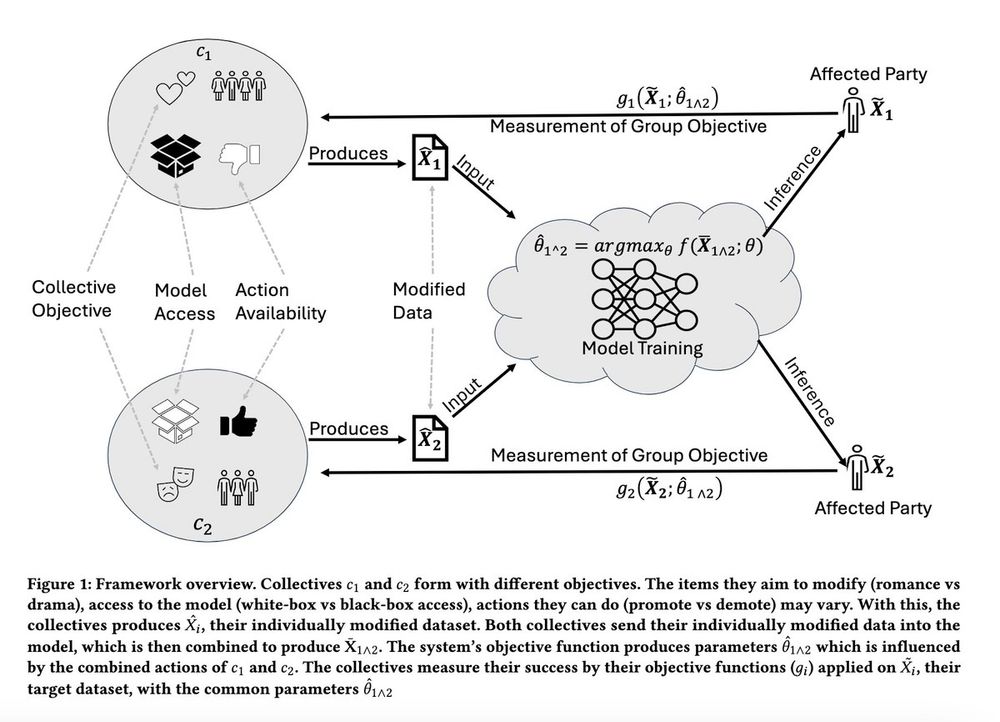

there's growing interest in algorithmic collective action, when a "collective" acts through data to impact a recommender system, classifier, or other model.

But... what happens if two collectives act at the same time?

there's growing interest in algorithmic collective action, when a "collective" acts through data to impact a recommender system, classifier, or other model.

But... what happens if two collectives act at the same time?

"A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices."

dataleverage.substack.com/p/public-ai-...

"A consortium of Public AI labs can substantially improve data pricing, which may also help to concretize debates about the ethics and legality of training practices."

dataleverage.substack.com/p/public-ai-...

- @christinalu.bsky.social on need for plural #AI model ontologies (sounds technical, but has big consequences for human #commons)

www.combinationsmag.com/model-plural...

- @christinalu.bsky.social on need for plural #AI model ontologies (sounds technical, but has big consequences for human #commons)

www.combinationsmag.com/model-plural...

dataleverage.substack.com/p/evaluation...

dataleverage.substack.com/p/evaluation...

I'm doing an experiment with microblogging directly to a GitHub repo that I can share across platforms...

I'm doing an experiment with microblogging directly to a GitHub repo that I can share across platforms...

Watch @audreyt.org give the announcement via @projectsyndicate.bsky.social

youtu.be/XkwqYQL6V4A?... (starts at 02:47:30)

Watch @audreyt.org give the announcement via @projectsyndicate.bsky.social

youtu.be/XkwqYQL6V4A?... (starts at 02:47:30)

But “open” model progress likely to increase the visibility of the tension, for better or worse.

But “open” model progress likely to increase the visibility of the tension, for better or worse.