Short answer: yes.

Slightly longer answer: nobody should be using it for legal research or writing.

www.abc.net.au/news/2025-11...

www.abc.net.au/news/2025-11...

For the IMTFE records in Australia, plus our own Aust war crimes trials, see my finding aid at the National Archives of Australia www.naa.gov.au/help-your-re...

For the IMTFE records in Australia, plus our own Aust war crimes trials, see my finding aid at the National Archives of Australia www.naa.gov.au/help-your-re...

intersections.anu.edu.au/issue7/morri....

intersections.anu.edu.au/issue7/morri....

www.accc.gov.au/medi...

Now would be a good time to let them know what you think about AI inclusion in teaching and research

#academic #history #JSTOR #AI

In response to a summary judgement motion, a TX plaintiff’s lawyer wanted to submit a witness affidavit that compared ... (cont.)

lnkd.in/ePra8vFB

#lawsky #law

oxfordre.com/internationa...

oxfordre.com/internationa...

www.abc.net.au/news/2025-11...

www.abc.net.au/news/2025-11...

www.thesaturdaypaper.com.au/share/21955/...

Claw every dollar back and ban Deloitte from govt consulting and tenders.

Better yet, sue them for breach of contract or prosecute them for fraud.

✍️ @paulkarp.bsky.social

Claw every dollar back and ban Deloitte from govt consulting and tenders.

Better yet, sue them for breach of contract or prosecute them for fraud.

www.theguardian.com/business/202...

www.theguardian.com/business/202...

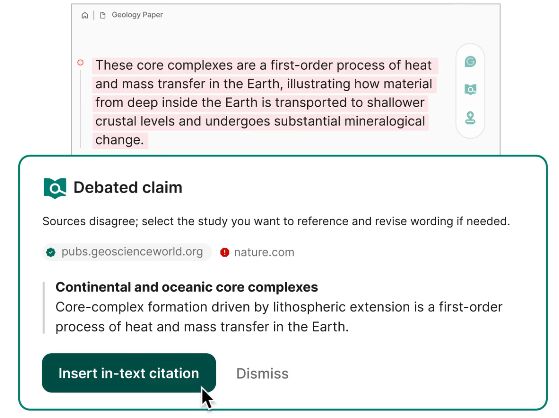

www.theverge.com/news/760508/...

NOW about Gen AI “hallucinations”? Is this what happens when you only read press releases from the AI boosters and don’t stop to think about any of their claims?

www.smh.com.au/business/wor...

NOW about Gen AI “hallucinations”? Is this what happens when you only read press releases from the AI boosters and don’t stop to think about any of their claims?

www.smh.com.au/business/wor...