![An example of a simple mechanism that can be modeled by a state machine is a turnstile.[4][5] A turnstile, used to control access to subways and amusement park rides, is a gate with three rotating arms at waist height, one across the entryway. Initially the arms are locked, blocking the entry, preventing patrons from passing through. Depositing a coin or token in a slot on the turnstile unlocks the arms, allowing a single customer to push through. After the customer passes through, the arms are locked again until another coin is inserted.

Considered as a state machine, the turnstile has two possible states: Locked and Unlocked.[4] There are two possible inputs that affect its state: putting a coin in the slot (coin) and pushing the arm (push). In the locked state, pushing on the arm has no effect; no matter how many times the input push is given, it stays in the locked state. Putting a coin in – that is, giving the machine a coin input – shifts the state from Locked to Unlocked. In the unlocked state, putting additional coins in has no effect; that is, giving additional coin inputs does not change the state. A customer pushing through the arms gives a push input and resets the state to Locked.

The turnstile state machine can be represented by a state-transition table, showing for each possible state, the transitions between them (based upon the inputs given to the machine) and the outputs resulting from each input:

The turnstile state machine can also be represented by a directed graph called a state diagram (above). Each state is represented by a node (circle). Edges (arrows) show the transitions from one state to another. Each arrow is labeled with the input that triggers that transition. An input that doesn't cause a change of state (such as a coin input in the Unlocked state) is represented by a circular arrow returning to the original state. The arrow into the Locked node from the black dot indicates it is the initial state.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:v2ro4utbnjevjbvwhxz3mmyb/bafkreicdj6pzxhfq7hy64myavnbs6bgm3metwjk3o74no4w4cq3cb2qygy@jpeg)

Yall arent thinking about technology its always just software. The computers have cooked brains so hard that real physical improvements get ignored if they dont involve chips and algorithms.

Yall arent thinking about technology its always just software. The computers have cooked brains so hard that real physical improvements get ignored if they dont involve chips and algorithms.

Goertzel is mentioned in the files nearly 800 times, including well after the Miami Herald investigation in 2018.

AI is a perverted way of taking a library of alexandria resource and grinding it up to allow individual people to still Behave and Interact like they are the ones driving all the activity, and "not relying on anyone"

AI is a perverted way of taking a library of alexandria resource and grinding it up to allow individual people to still Behave and Interact like they are the ones driving all the activity, and "not relying on anyone"

steipete.me/posts/2026/o...

steipete.me/posts/2026/o...

"Nature vs Nurture" is dumb because they arent all that separable. The outside environment, which includes the nurturing, flows in and out of you all the time and it affects gene expression regularly. You also alter the environment which will then change you again afterwards.

"Nature vs Nurture" is dumb because they arent all that separable. The outside environment, which includes the nurturing, flows in and out of you all the time and it affects gene expression regularly. You also alter the environment which will then change you again afterwards.

You can’t trust chatbots.

jonlieffmd.com/book/the-sec...

jonlieffmd.com/book/the-sec...

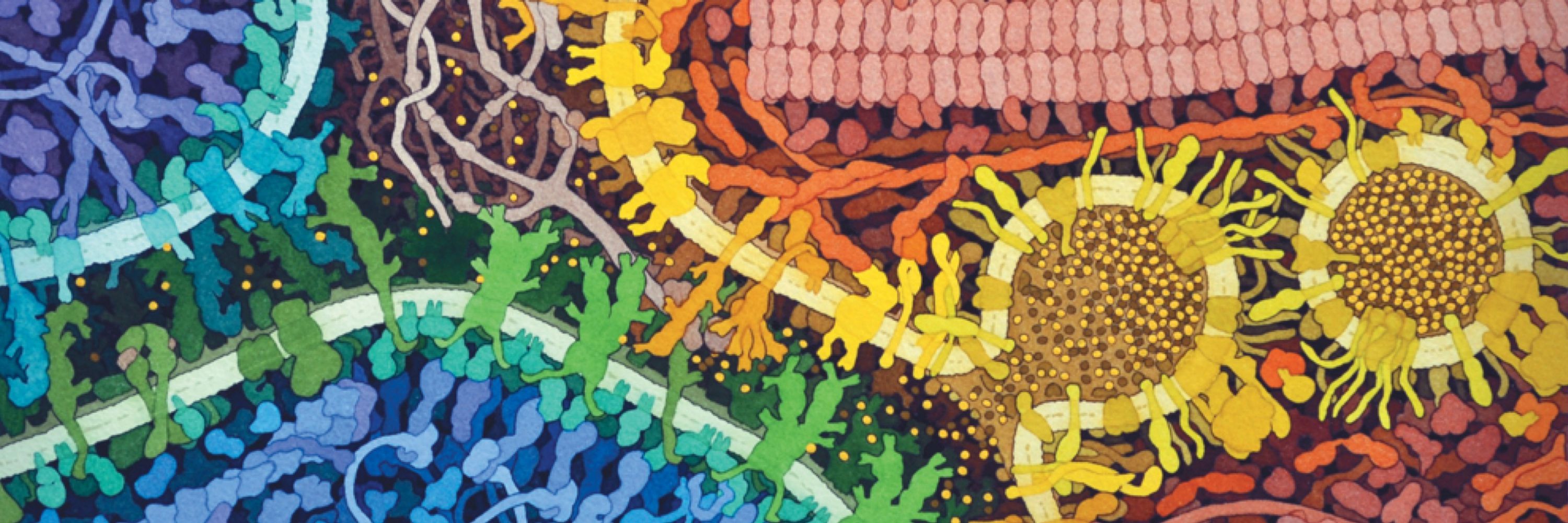

Meanwhile even lowly bacteria are making and deploying stuff like this:

Meanwhile even lowly bacteria are making and deploying stuff like this:

Meanwhile even lowly bacteria are making and deploying stuff like this: