🧑💻 ML at Goog, prev. Waymo, fintech, NPR

🐺 Social media manager for @noonathehusky

https://www.jeffcarp.com

Waymo vehicle reacted safely.

Source: Dmitri Dolgov (Co-CEO at waymo)

Waymo vehicle reacted safely.

Source: Dmitri Dolgov (Co-CEO at waymo)

This repository is a curated collection of the most influential papers, code implementations, benchmarks, and resources related to Large Language Models (LLMs) Post-Training Methodologies.

github.com/mbzuai-oryx/...

This repository is a curated collection of the most influential papers, code implementations, benchmarks, and resources related to Large Language Models (LLMs) Post-Training Methodologies.

github.com/mbzuai-oryx/...

Franklin St then Grove St, San Francisco

Robots aprés Rachmaninoff

Waymo solves the critical urban problems of not enough cars and too free flowing downtown traffic.

OP: @jeffcarp.bsky.social posted on threads

This book aims to demystify the science of scaling language models on TPUs: how TPUs work and how they communicate with each other, how LLMs run on real hardware, and how to parallelize your models during training and inference so they run efficiently at massive scale.

This book aims to demystify the science of scaling language models on TPUs: how TPUs work and how they communicate with each other, how LLMs run on real hardware, and how to parallelize your models during training and inference so they run efficiently at massive scale.

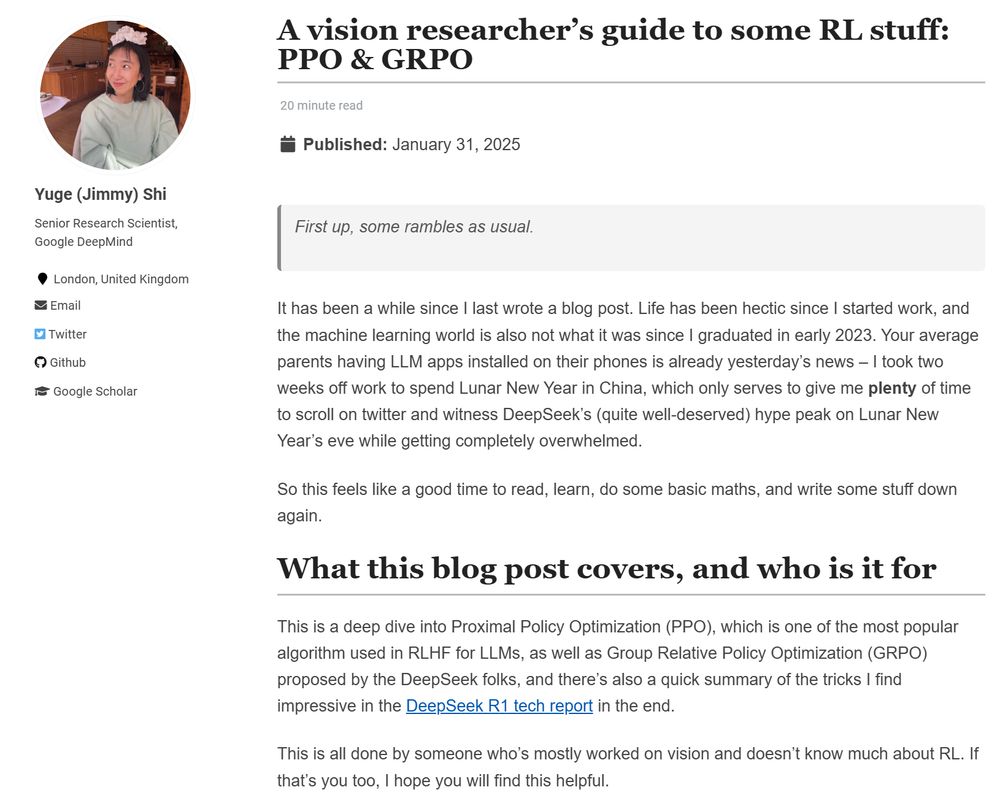

This is a deep dive into Proximal Policy Optimization (PPO), which is one of the most popular algorithm used in RLHF for LLMs, as well as Group Relative Policy Optimization (GRPO) proposed by the DeepSeek folks.

This is a deep dive into Proximal Policy Optimization (PPO), which is one of the most popular algorithm used in RLHF for LLMs, as well as Group Relative Policy Optimization (GRPO) proposed by the DeepSeek folks.

1+8+27+64+125+216+343+512+729 🥂 🎉 🎆 🎇 🧮

1+8+27+64+125+216+343+512+729 🥂 🎉 🎆 🎇 🧮

research.google/programs-and...

research.google/programs-and...

Gemini 2.0 announcement: blog.google/technology/g...

Gemini 2.0 announcement: blog.google/technology/g...

youtu.be/ljbeFpOHvEA?...

youtu.be/ljbeFpOHvEA?...

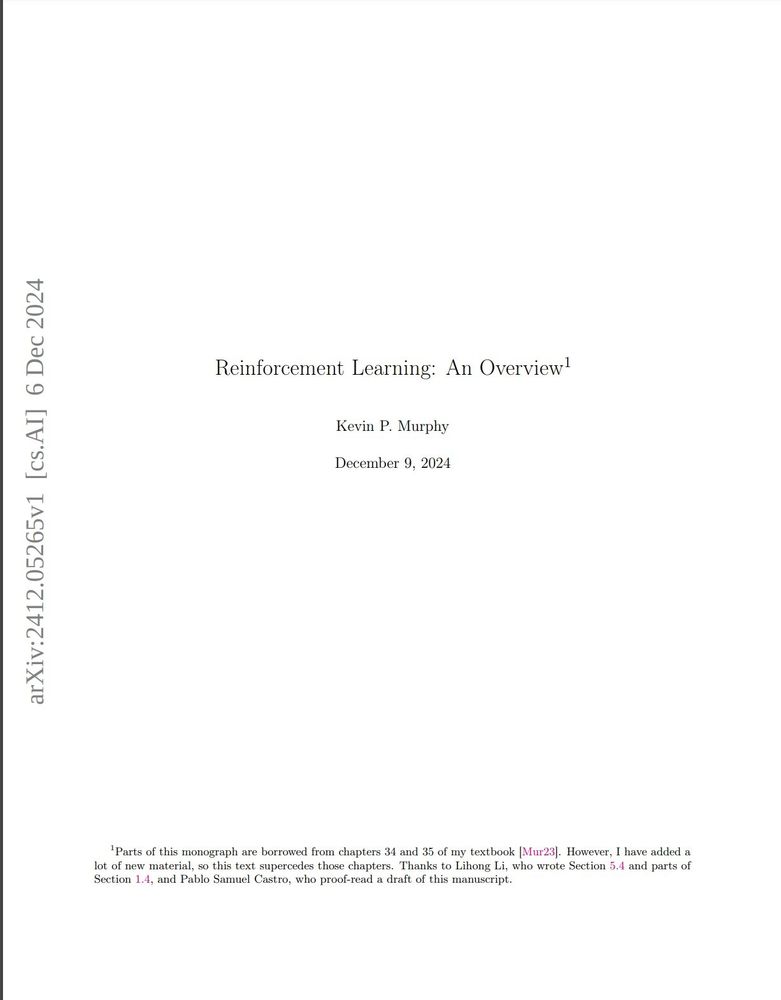

This manuscript gives a big-picture, up-to-date overview of the field of (deep) reinforcement learning and sequential decision making, covering value-based RL, policy-gradient methods, model-based methods, and various other topics.

arxiv.org/abs/2412.05265

This manuscript gives a big-picture, up-to-date overview of the field of (deep) reinforcement learning and sequential decision making, covering value-based RL, policy-gradient methods, model-based methods, and various other topics.

arxiv.org/abs/2412.05265

Around this time last year, I was working on the Gemini Launch and it was exciting to have access to such models

After one year I've learned a lot and I'm still amazed of what can be done!

best feature: 2M context window 🤯

developers.googleblog.com/en/looking-b...

Around this time last year, I was working on the Gemini Launch and it was exciting to have access to such models

After one year I've learned a lot and I'm still amazed of what can be done!

best feature: 2M context window 🤯

developers.googleblog.com/en/looking-b...

Does it still hold in the age of LLMs? 😎

Does it still hold in the age of LLMs? 😎

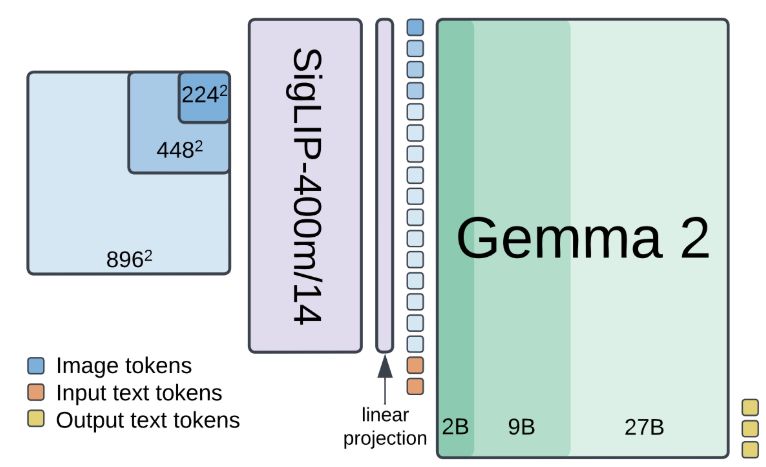

Congrats Paligemma2 team!

1/7

Congrats Paligemma2 team!

- CV and speech jump-started deep learning

- NLP innovated with transformers, which were then copied by CV and others

- Robotics and CV embraced RL early on

- NLP now discovers RL, first through RLHF and now for planning.

- CV and speech jump-started deep learning

- NLP innovated with transformers, which were then copied by CV and others

- Robotics and CV embraced RL early on

- NLP now discovers RL, first through RLHF and now for planning.