![Figure 1

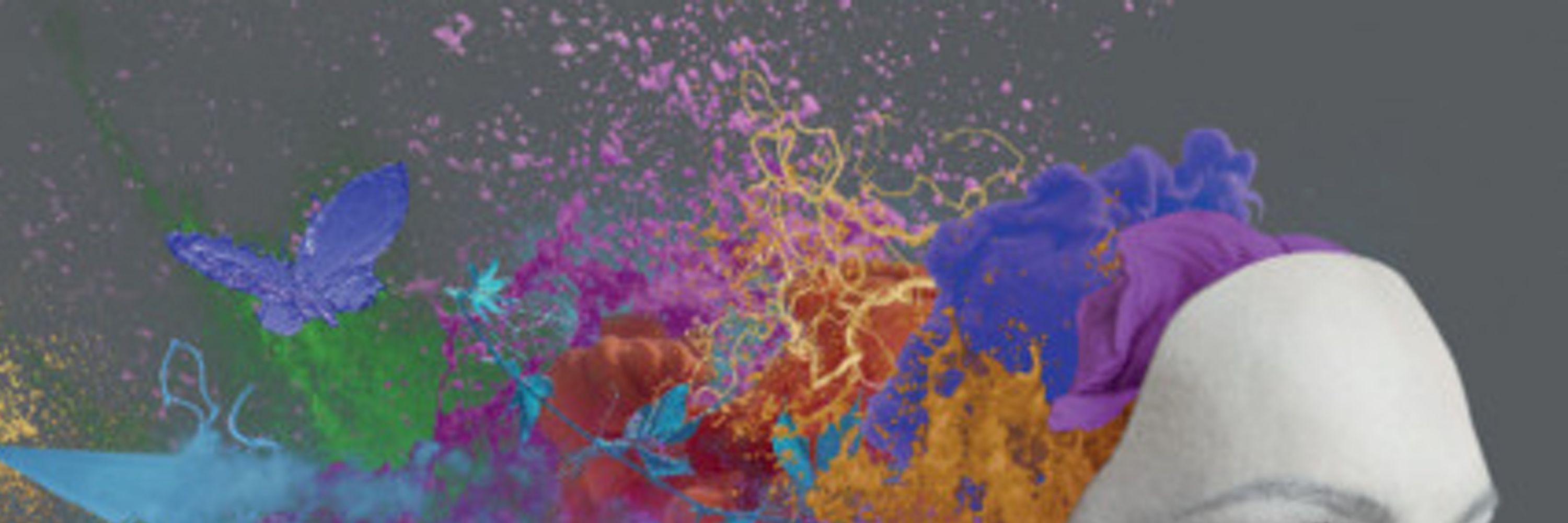

Illustration of why AI systems cannot realistically scale to human cognition within the foreseeable future: (b) Human cognitive capacities (such as reasoning, communication, problem solving, learning, concept formation, planning etc.) can handle unbounded situations across many domains, ranging from simple to complex. (a) Engineers create AI systems using machine learning from human data. (d) In an attempt to approximate human cognition a lot of data is consumed. (c) Making AI systems that approximate human cognition is intractable (van Rooij, Guest, et al., 2024), i.e., the required resources (e.g. time, data) grows prohibitively fast as input domains get more complex, leading to diminishing returns. (a) Any existing AI system is

created in limited time (hours, months or years, not millennia or eons). Therefore, existing AI systems cannot realistically have the domain-general cognitive capacities that humans have. [Made with elements from freepik.com.]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:24ngrqljhck7hpvbgrg7a23k/bafkreie5dbxbxpaszqwfbgne6pi7kf26im6chq45olvzxyyrg7eitq6uue@jpeg)

Iris van Rooij & Olivia Guest (2026). Combining Psychology with Artificial Intelligence: What Could Possibly Go Wrong? PsyArXiv osf.io/preprints/psyarxiv/aue4m_v2 @olivia.science

Our aim is to make these ideas accessible for a.o. psych students. Hope we succeeded 🙂

Negation: but this technology doesn't do the things you claim

kirby: the people writing these stories get funding to support narratives of power and inevitability, and that's what we should be scrutinizing

Negation: but this technology doesn't do the things you claim

kirby: the people writing these stories get funding to support narratives of power and inevitability, and that's what we should be scrutinizing

@olivia.science

fortune.com/2026/02/17/a...

@olivia.science

fortune.com/2026/02/17/a...

bsky.app/profile/oliv...

"We have certainly been here before. Many many times in the past, companies — just like artificial intelligence (AI) companies now — have lied to us to sell us products."

1/

bsky.app/profile/oliv...

for me, this is a clear sign that i am not interested in engaging or working with you

for me, this is a clear sign that i am not interested in engaging or working with you

The delicious one was the computer, because we ended up with folks using an abstracted version of what (mostly women) mathematicians did as a model of human behaviour. It’s all circular.

LLMs as the metaphor is maybe the worst yet.

The delicious one was the computer, because we ended up with folks using an abstracted version of what (mostly women) mathematicians did as a model of human behaviour. It’s all circular.

LLMs as the metaphor is maybe the worst yet.

This is not rocket science, it is solid principles from the psychology of perception.

This is not rocket science, it is solid principles from the psychology of perception.

6/

6/

bsky.app/profile/oliv...

2/

1. rarehistoricalphotos.com/doctors-smok...

2. Academic Collaborations and Public Health: Lessons from Dutch Universities' Tobacco Industry Partnerships for Fossil Fuel Ties doi.org/10.5281/zeno...

1/n 🧵

bsky.app/profile/oliv...

2/

"We have certainly been here before. Many many times in the past, companies — just like artificial intelligence (AI) companies now — have lied to us to sell us products."

1/

"We have certainly been here before. Many many times in the past, companies — just like artificial intelligence (AI) companies now — have lied to us to sell us products."

1/

@marentierra.bsky.social @irisvanrooij.bsky.social

@marentierra.bsky.social @irisvanrooij.bsky.social

7/n

bsky.app/profile/oliv...

doi.org/10.1093/ereh...

7/n

bsky.app/profile/oliv...

It's not a coincidence for example you've never heard of Margaret Cavendish from the 17th century, for example. bsky.app/profile/iris...

6/n

Cavendish, Margaret (1666). Observations upon Experimental Philosophy.

(Edited by Eileen O'Neill, in 2012: www.cambridge.org/core/books/m...).

Will be compiling some quotes and thoughts over time. Pin📍or bookmark this thread if you want to follow along.

1/🧵

It's not a coincidence for example you've never heard of Margaret Cavendish from the 17th century, for example. bsky.app/profile/iris...

6/n

5/n

bsky.app/profile/oliv...

doi.org/10.1007/s421...

5/n

bsky.app/profile/oliv...

Let's just chill on doing it for the billionth time.

bsky.app/profile/oliv...

4/n

"although three men received the Nobel Prize for penicillin, women participated significantly in the team effort that brought the drug to medical usefulness."

www.jstor.org/stable/jj.55...

Let's just chill on doing it for the billionth time.

bsky.app/profile/oliv...

4/n

If you're reading and agreeing, get serious. There's a reason it's mostly women academics fighting AI.

3/n

bsky.app/profile/timn...

If you're reading and agreeing, get serious. There's a reason it's mostly women academics fighting AI.

3/n

bsky.app/profile/timn...