Joint work with Abrek Er, @gozdegulsahin.bsky.social, @aykuterdem.bsky.social from KUIS AI Center.

Joint work with Abrek Er, @gozdegulsahin.bsky.social, @aykuterdem.bsky.social from KUIS AI Center.

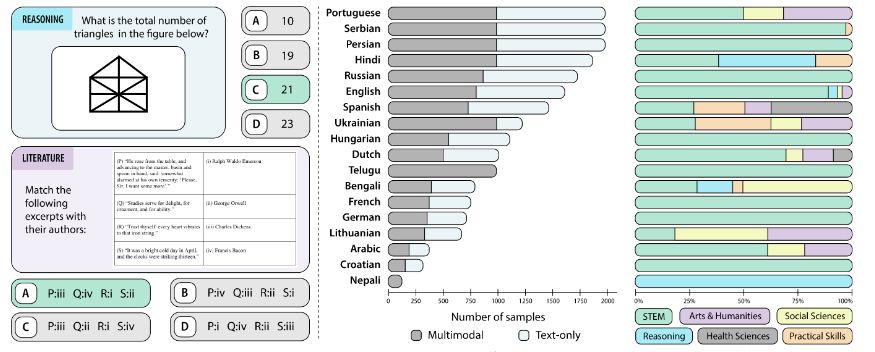

A comprehensive multimodal & multilingual benchmark for VLMs! It contains real questions from exams in different languages.

🌍 20,911 questions and 18 languages

📚 14 subjects (STEM → Humanities)

📸 55% multimodal questions

A comprehensive multimodal & multilingual benchmark for VLMs! It contains real questions from exams in different languages.

🌍 20,911 questions and 18 languages

📚 14 subjects (STEM → Humanities)

📸 55% multimodal questions