Está basado en Gemma-3-27B y se ejecuta en la nube privada de la administración, en el supercomputador Hércules.

Está basado en Gemma-3-27B y se ejecuta en la nube privada de la administración, en el supercomputador Hércules.

Podrían 'finjir' estar correctamente alineados, porque es lo que se espera de ellos.

Podrían 'finjir' estar correctamente alineados, porque es lo que se espera de ellos.

🤖🔍 Placement is hard! - objects vary in shape & placement modes (such as stacking, hanging, insertion)

AnyPlace predicts placement poses of unseen objects in real-world with ony using synthetic training data!

Read on👇

🤖🔍 Placement is hard! - objects vary in shape & placement modes (such as stacking, hanging, insertion)

AnyPlace predicts placement poses of unseen objects in real-world with ony using synthetic training data!

Read on👇

Los resultados caen drásticamente en todos los casos!

Los resultados caen drásticamente en todos los casos!

If you're interested in manage your followers and unfollows, send us a DM with your Gmail address, and we'll send you the instructions to join and download the app

(Android only for now!)

If you're interested in manage your followers and unfollows, send us a DM with your Gmail address, and we'll send you the instructions to join and download the app

(Android only for now!)

I hope Claude 4 will clarify this soon.

as expected, it's not a "reasoning model", it's just a regular LLM that can reason as needed

i'm extremely excited to get my hands on this one. Anthropic has always placed usability over performance

techcrunch.com/2025/02/13/a...

I hope Claude 4 will clarify this soon.

Había un intérprete trauduciendo para los asistentes y una estenotipista transcribiendo al intérprete para los subtítulos de la TV. #Goya2025

Había un intérprete trauduciendo para los asistentes y una estenotipista transcribiendo al intérprete para los subtítulos de la TV. #Goya2025

Un modelo de interpretación simultánea (real-time), capaz hasta de reproducir el acento del orador, ¡y corre en un móvil!

De momento solo francés->inglés, pero muy prometedor:

Un modelo de interpretación simultánea (real-time), capaz hasta de reproducir el acento del orador, ¡y corre en un móvil!

De momento solo francés->inglés, pero muy prometedor:

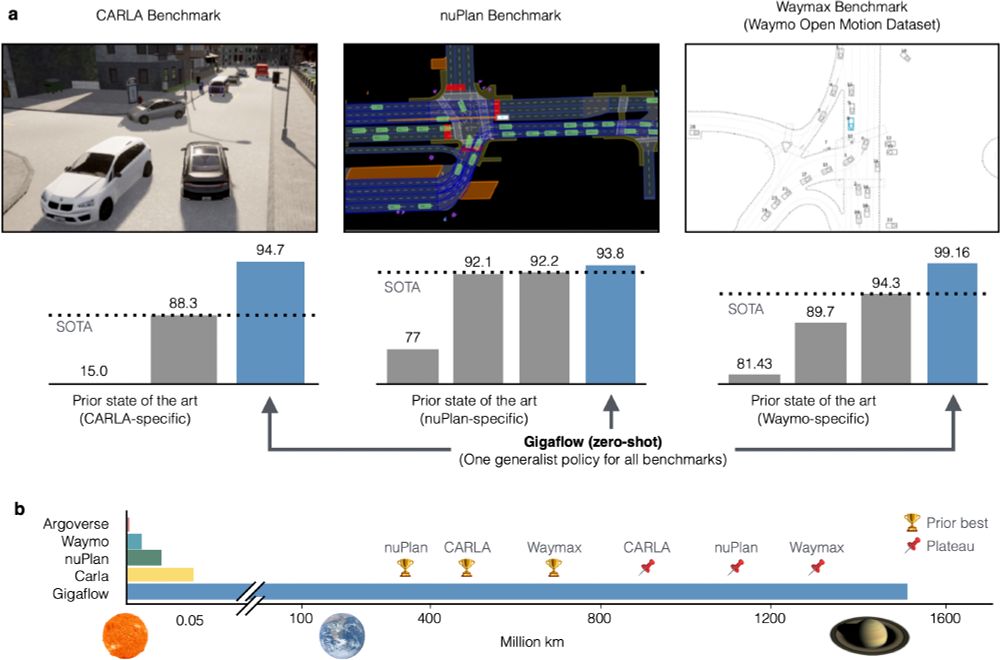

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

📢 Introducing AV-Link ♾️

Bridging unimodal diffusion models in one self-contained framework to enable:

📽️ ➡️ 🔊 Video-to-Audio generation.

🔊 ➡️ 📽️ Audio-to-Video generation.

🌐: snap-research.github.io/AVLink/

⤵️ Results

📢 Introducing AV-Link ♾️

Bridging unimodal diffusion models in one self-contained framework to enable:

📽️ ➡️ 🔊 Video-to-Audio generation.

🔊 ➡️ 📽️ Audio-to-Video generation.

🌐: snap-research.github.io/AVLink/

⤵️ Results

@llamaindex.bsky.social released vdr-2b-multi-v1

> uses 70% less image tokens, yet outperforming other dse-qwen2 based models

> 3x faster inference with less VRAM 💨

> shrinkable with matryoshka 🪆

huggingface.co/collections/...

All made by me with veo 2, the serious point is to note how impressive the "physics" of these models have become (also the consistency, like the spilled burrito across two different shots), there are some issues, obviously, but big advances.

All made by me with veo 2, the serious point is to note how impressive the "physics" of these models have become (also the consistency, like the spilled burrito across two different shots), there are some issues, obviously, but big advances.

There are lots of useful AI applications just waiting to be implemented, in all fields.

There are lots of useful AI applications just waiting to be implemented, in all fields.

We release 🧙🏽♀️DRUID to facilitate studies of context usage in real-world scenarios.

arxiv.org/abs/2412.17031

w/ @saravera.bsky.social, H.Yu, @rnv.bsky.social, C.Lioma, M.Maistro, @apepa.bsky.social and @iaugenstein.bsky.social ⭐️

Sonnet: 600B

Flash: 20B

4o: 600B

4o-mini: 40B

oX: 3T

oX-mini: 600B

Sonnet: 600B

Flash: 20B

4o: 600B

4o-mini: 40B

oX: 3T

oX-mini: 600B