Adjunct lecturer @ Australian Institute for ML. @aimlofficial.bsky.social

Occasionally cycling across continents.

https://www.damienteney.info

arxiv.org/abs/2505.20802

arxiv.org/abs/2505.20802

ReLU works well on avg, but you can find completely different activations. #cvpr2025

Do We Always Need the Simplicity Bias?

We take another step to understand why/when neural nets generalize so well. ⬇️🧵

Do We Always Need the Simplicity Bias?

We take another step to understand why/when neural nets generalize so well. ⬇️🧵

With a standardized automated reviewer, Zochi’s papers score an average of 7.67 compared to other publicly available papers generated by AI systems that score between 3 and 4.

Any advice to get more ML and less bunnies/politics in my Bluesky feed?

Any advice to get more ML and less bunnies/politics in my Bluesky feed?

But start it one *month* before the deadline.

But start it one *month* before the deadline.

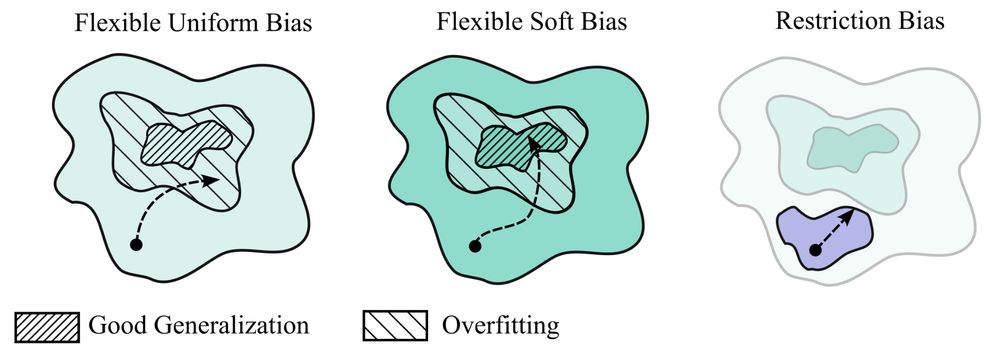

If you enjoyed our work on inductive biases (eg the Neural Redshift arxiv.org/abs/2403.02241), you'll love this paper that rigorously articulates "soft inductive biases" & how they explain supposedly-mysterious behaviors of neural nets.

If you enjoyed our work on inductive biases (eg the Neural Redshift arxiv.org/abs/2403.02241), you'll love this paper that rigorously articulates "soft inductive biases" & how they explain supposedly-mysterious behaviors of neural nets.

Que penser d'une innovation technico-scientifique dont la promotion dans les médias est plutôt du fait d'entrepreneurs, éditorialistes et politiciens, tandis que les scientifiques du secteur sont bien plus mesurés ?

Que penser d'une innovation technico-scientifique dont la promotion dans les médias est plutôt du fait d'entrepreneurs, éditorialistes et politiciens, tandis que les scientifiques du secteur sont bien plus mesurés ?

I’ll go first👇🏻(replying to myself like it’s normal)

If you feel that experiments are missing, ask yourself: are the additional results likely to affect the central message of the paper/nullify its main claims? If not, it's probably a nice suggestion (eg additional comparisons, datasets) but not a reason for rejection by itself.

If you feel that experiments are missing, ask yourself: are the additional results likely to affect the central message of the paper/nullify its main claims? If not, it's probably a nice suggestion (eg additional comparisons, datasets) but not a reason for rejection by itself.

I can't recall the last time I wanted to compare dozens of numbers in a table to two decimal places. A visualization makes it much clearer whether claimed differences are significant.

I can't recall the last time I wanted to compare dozens of numbers in a table to two decimal places. A visualization makes it much clearer whether claimed differences are significant.