The theme: 8 feathers for our 8 incredible Olympians. Let's cheer them on!

#IOAI2025 #TeamIndia #AI

The theme: 8 feathers for our 8 incredible Olympians. Let's cheer them on!

#IOAI2025 #TeamIndia #AI

Also, please support parakeet2, it is genuinely better and faster than whisper v3 large turbo, in my experience.

Also, please support parakeet2, it is genuinely better and faster than whisper v3 large turbo, in my experience.

In Word docs and LaTeX, it’s common.

In Word docs and LaTeX, it’s common.

For a highly technical paper in a field I know nothing about, I want a very simplified summary.

When I know I need to go deep, I use a summary to give the narrative along a specific axis.

For a highly technical paper in a field I know nothing about, I want a very simplified summary.

When I know I need to go deep, I use a summary to give the narrative along a specific axis.

meresophistry.substack.com/p/the-mental...

meresophistry.substack.com/p/the-mental...

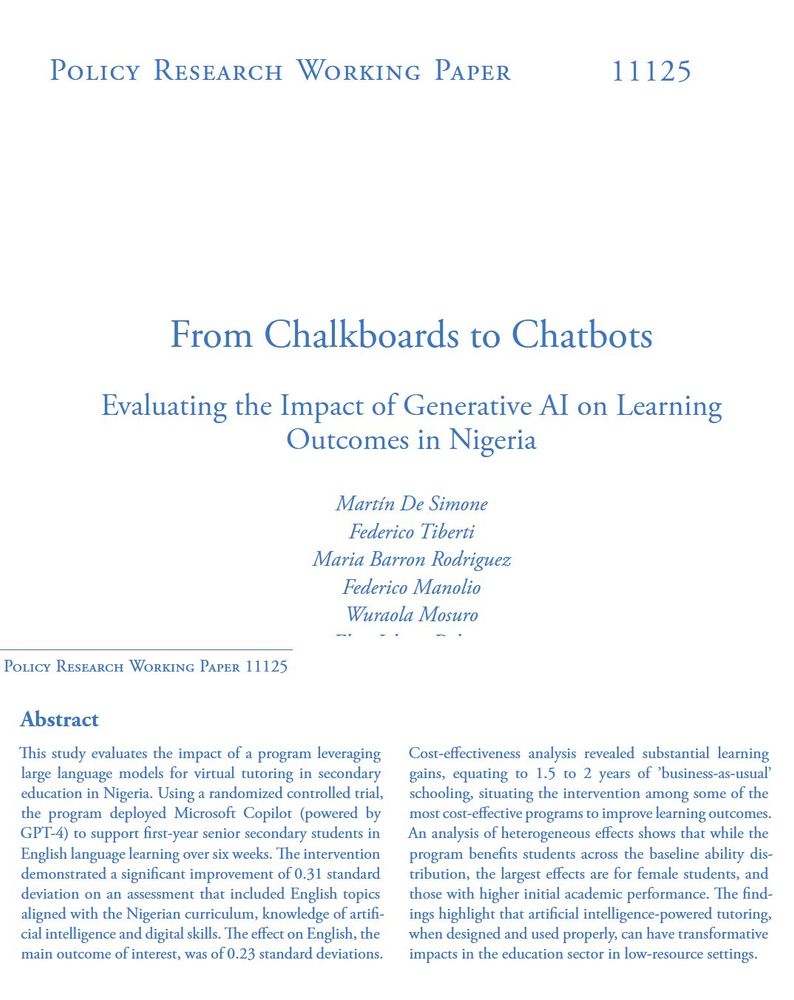

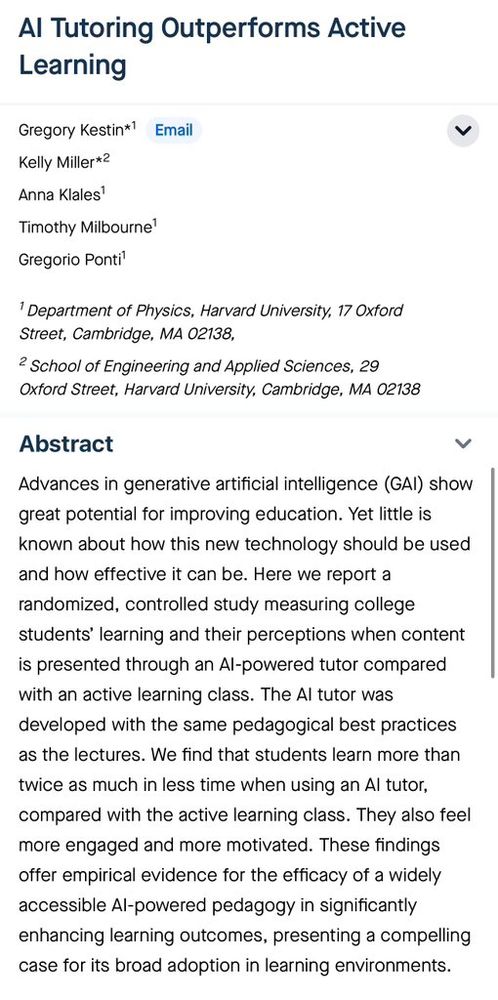

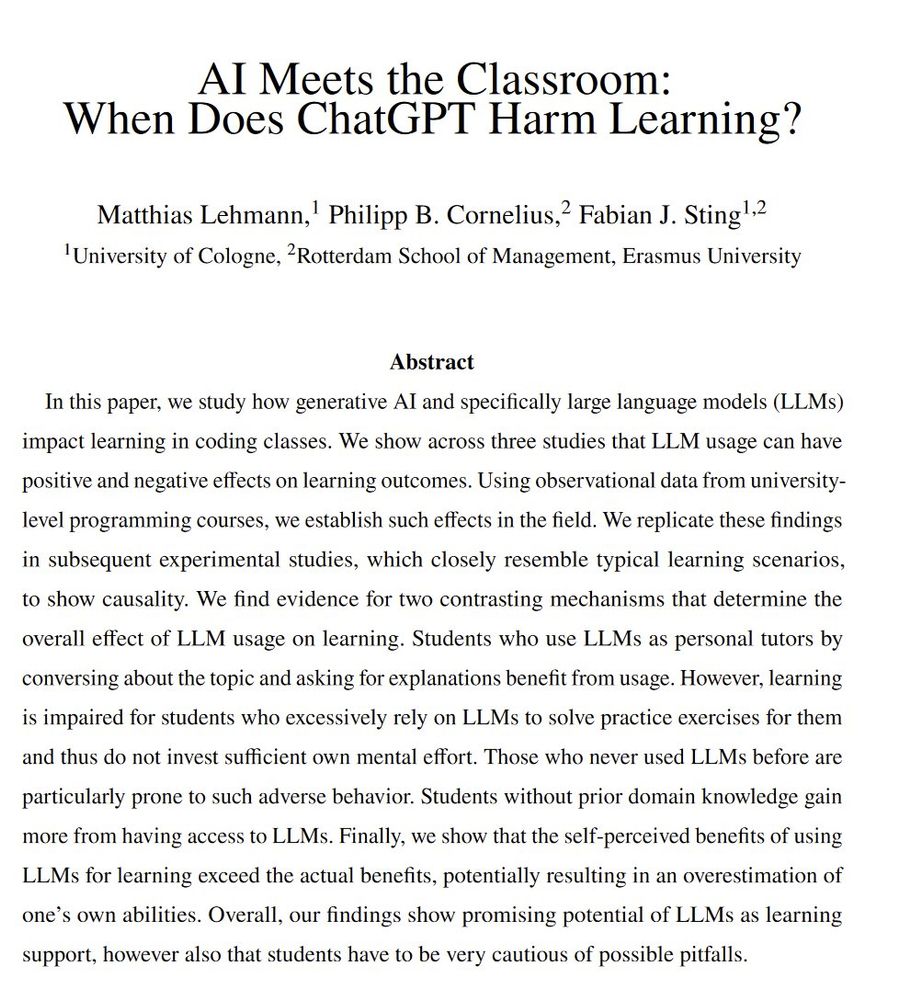

I grew up in the Indian education system with pretty poor teachers so I self-learned a lot. I’d have loved something which made that easier.

Still early days.

I grew up in the Indian education system with pretty poor teachers so I self-learned a lot. I’d have loved something which made that easier.

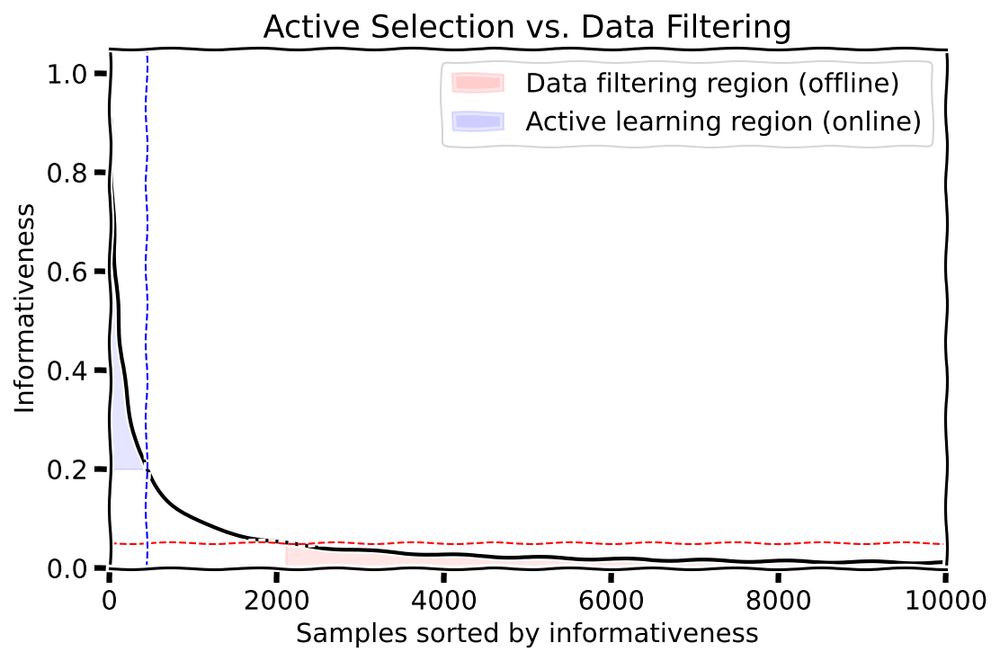

What is the fundamental difference between active learning and data filtering?

Well, obviously, the difference is that:

1/11

What is the fundamental difference between active learning and data filtering?

Well, obviously, the difference is that:

1/11

arxiv.org/abs/2504.12501

arxiv.org/abs/2504.12501

142-page report diving into the reasoning chains of R1. It spans 9 unique axes: safety, world modeling, faithfulness, long context, etc.

142-page report diving into the reasoning chains of R1. It spans 9 unique axes: safety, world modeling, faithfulness, long context, etc.