I will be at EMNLP next week presenting this work on November the 7th! Reach out to me for any questions :))

Work done with my advisor, Mirella Lapata!

Preprint: arxiv.org/pdf/2505.14627

#EMNLP2025 #multimodallearning #scalableoversight #visionlanguagemodels #nlproc

Work done with my advisor, Mirella Lapata!

Preprint: arxiv.org/pdf/2505.14627

#EMNLP2025 #multimodallearning #scalableoversight #visionlanguagemodels #nlproc

arxiv.org

November 1, 2025 at 7:30 PM

I will be at EMNLP next week presenting this work on November the 7th! Reach out to me for any questions :))

Work done with my advisor, Mirella Lapata!

Preprint: arxiv.org/pdf/2505.14627

#EMNLP2025 #multimodallearning #scalableoversight #visionlanguagemodels #nlproc

Work done with my advisor, Mirella Lapata!

Preprint: arxiv.org/pdf/2505.14627

#EMNLP2025 #multimodallearning #scalableoversight #visionlanguagemodels #nlproc

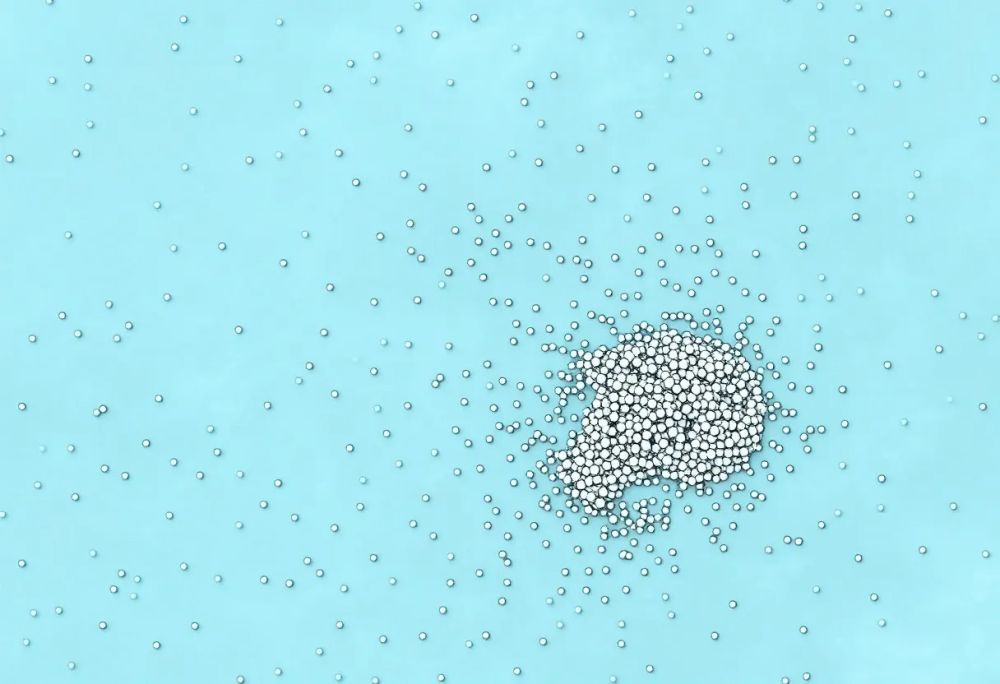

PerSense-D is a new benchmark dataset for personalized dense image segmentation, advancing AI accuracy in crowded visual environments. #visionlanguagemodels

New Dataset PerSense-D Enables Model-Agnostic Dense Object Segmentation

hackernoon.com

October 28, 2025 at 7:37 PM

PerSense-D is a new benchmark dataset for personalized dense image segmentation, advancing AI accuracy in crowded visual environments. #visionlanguagemodels

Adaptive prompts, density maps, and VLMs are used in PerSense's training-free one-shot segmentation framework for dense picture interpretation. #visionlanguagemodels

PerSense Delivers Expert-Level Instance Recognition Without Any Training

hackernoon.com

October 28, 2025 at 7:37 PM

Adaptive prompts, density maps, and VLMs are used in PerSense's training-free one-shot segmentation framework for dense picture interpretation. #visionlanguagemodels

PerSense is a model-aware, training-free system for one-shot tailored instance division in dense images based on density and vision-language cues. #visionlanguagemodels

PerSense: A One-Shot Framework for Personalized Segmentation in Dense Images

hackernoon.com

October 28, 2025 at 7:37 PM

PerSense is a model-aware, training-free system for one-shot tailored instance division in dense images based on density and vision-language cues. #visionlanguagemodels

Reason-RFT improves visual reasoning in vision-language models, according to the announcement. Read more: https://getnews.me/reason-rft-improves-visual-reasoning-in-vision-language-models/ #reasonrft #visionlanguagemodels #visualreasoning

October 8, 2025 at 6:22 PM

Reason-RFT improves visual reasoning in vision-language models, according to the announcement. Read more: https://getnews.me/reason-rft-improves-visual-reasoning-in-vision-language-models/ #reasonrft #visionlanguagemodels #visualreasoning

MetaSpatial says it improves 3D spatial reasoning in vision-language models. Read more: https://getnews.me/metaspatial-improves-3d-spatial-reasoning-in-vision-language-models/ #metaspatial #3dspatial #visionlanguagemodels

October 8, 2025 at 6:17 PM

MetaSpatial says it improves 3D spatial reasoning in vision-language models. Read more: https://getnews.me/metaspatial-improves-3d-spatial-reasoning-in-vision-language-models/ #metaspatial #3dspatial #visionlanguagemodels

VLMCountBench shows vision‑language models count objects when only one shape type (triangles, circles or squares) appears, but accuracy drops on scenes with multiple shapes. Read more: https://getnews.me/vision-language-models-struggle-with-compositional-counting/ #visionlanguagemodels #counting

October 8, 2025 at 1:15 AM

VLMCountBench shows vision‑language models count objects when only one shape type (triangles, circles or squares) appears, but accuracy drops on scenes with multiple shapes. Read more: https://getnews.me/vision-language-models-struggle-with-compositional-counting/ #visionlanguagemodels #counting

A new framework pairs vision‑language models with an action expert that refines sparse 3‑D waypoints into collision‑free motion plans, trained on synthetic and real point‑cloud data. https://getnews.me/vision-language-models-linked-to-action-expert-for-robot-planning/ #visionlanguagemodels #robotics

October 7, 2025 at 8:32 PM

A new framework pairs vision‑language models with an action expert that refines sparse 3‑D waypoints into collision‑free motion plans, trained on synthetic and real point‑cloud data. https://getnews.me/vision-language-models-linked-to-action-expert-for-robot-planning/ #visionlanguagemodels #robotics

DepthLM equips vision-language models with metric depth prediction, matching the accuracy of dedicated depth estimators, per the paper submitted on 1 Oct 2025. Read more: https://getnews.me/depthlm-achieves-accurate-metric-depth-with-vision-language-models/ #depthlm #visionlanguagemodels

October 3, 2025 at 11:54 AM

DepthLM equips vision-language models with metric depth prediction, matching the accuracy of dedicated depth estimators, per the paper submitted on 1 Oct 2025. Read more: https://getnews.me/depthlm-achieves-accurate-metric-depth-with-vision-language-models/ #depthlm #visionlanguagemodels

CoFFT, a training-free technique, lifts Vision Language Model accuracy by 3.1%–5.8% and debuted on 1 Oct 2025, iteratively sharpening visual focus during inference. https://getnews.me/cofft-boosts-vision-language-models-with-iterative-focused-reasoning/ #visionlanguagemodels #cofft

October 3, 2025 at 10:37 AM

CoFFT, a training-free technique, lifts Vision Language Model accuracy by 3.1%–5.8% and debuted on 1 Oct 2025, iteratively sharpening visual focus during inference. https://getnews.me/cofft-boosts-vision-language-models-with-iterative-focused-reasoning/ #visionlanguagemodels #cofft

Three new diagnostics—PSI, CMB, RoPE probe—show VLMs favor visual tokens; reducing visual token norms raised PSI and improved spatial reasoning. Read more: https://getnews.me/vision-language-models-restore-spatial-awareness-with-new-diagnostic-tools/ #visionlanguagemodels #spatialreasoning

October 3, 2025 at 7:26 AM

Three new diagnostics—PSI, CMB, RoPE probe—show VLMs favor visual tokens; reducing visual token norms raised PSI and improved spatial reasoning. Read more: https://getnews.me/vision-language-models-restore-spatial-awareness-with-new-diagnostic-tools/ #visionlanguagemodels #spatialreasoning

EDCT audits VLM explanation faithfulness on 120 OK‑VQA examples, showing many explanations are plausible but not causally linked to answers. Read more: https://getnews.me/explanation-driven-counterfactual-testing-boosts-faithfulness-of-vision-language-model-explanations/ #edct #visionlanguagemodels

October 2, 2025 at 7:25 PM

EDCT audits VLM explanation faithfulness on 120 OK‑VQA examples, showing many explanations are plausible but not causally linked to answers. Read more: https://getnews.me/explanation-driven-counterfactual-testing-boosts-faithfulness-of-vision-language-model-explanations/ #edct #visionlanguagemodels

CADC reduces required training data to about 5% of the original set while still outperforming full-data models on multimodal benchmarks, the authors report. Read more: https://getnews.me/capability-attributed-data-curation-improves-vision-language-models/ #visionlanguagemodels #capabilitycuration

October 2, 2025 at 7:21 PM

CADC reduces required training data to about 5% of the original set while still outperforming full-data models on multimodal benchmarks, the authors report. Read more: https://getnews.me/capability-attributed-data-curation-improves-vision-language-models/ #visionlanguagemodels #capabilitycuration

This paper summarizes a comprehensive framework for typographic attacks, proving their effectiveness and transferability against Vision-LLMs like LLaVA #visionlanguagemodels

Future of AD Security: Addressing Limitations and Ethical Concerns in Typographic Attack Research

hackernoon.com

October 1, 2025 at 1:30 PM

This paper summarizes a comprehensive framework for typographic attacks, proving their effectiveness and transferability against Vision-LLMs like LLaVA #visionlanguagemodels

This article presents an empirical study on the effectiveness and transferability of typographic attacks against major Vision-LLMs using AD-specific datasets. #visionlanguagemodels

Empirical Study: Evaluating Typographic Attack Effectiveness Against Vision-LLMs in AD Systems

hackernoon.com

October 1, 2025 at 1:15 PM

This article presents an empirical study on the effectiveness and transferability of typographic attacks against major Vision-LLMs using AD-specific datasets. #visionlanguagemodels

This article explores the physical realization of typographic attacks, categorizing their deployment into background and foreground elements #visionlanguagemodels

Foreground vs. Background: Analyzing Typographic Attack Placement in Autonomous Driving Systems

hackernoon.com

October 1, 2025 at 1:00 PM

This article explores the physical realization of typographic attacks, categorizing their deployment into background and foreground elements #visionlanguagemodels

GSM8K‑V adds visual format to 1,319 grade‑school math problems. Gemini‑2.5‑Pro scores 95.22% on text but only 46.93% on the visual version, showing a gap for VLMs. https://getnews.me/gsm8k-v-shows-vision-language-models-lag-on-visual-math-problems/ #gsm8kv #visionlanguagemodels

October 1, 2025 at 3:46 AM

GSM8K‑V adds visual format to 1,319 grade‑school math problems. Gemini‑2.5‑Pro scores 95.22% on text but only 46.93% on the visual version, showing a gap for VLMs. https://getnews.me/gsm8k-v-shows-vision-language-models-lag-on-visual-math-problems/ #gsm8kv #visionlanguagemodels

This article proposes a linguistic augmentation scheme for typographic attacks using explicit instructional directives. #visionlanguagemodels

Exploiting Vision-LLM Vulnerability: Enhancing Typographic Attacks with Instructional Directives

hackernoon.com

September 30, 2025 at 7:30 PM

This article proposes a linguistic augmentation scheme for typographic attacks using explicit instructional directives. #visionlanguagemodels

This article details the multi-step typographic attack pipeline, including Attack Auto-Generation and Attack Augmentation. #visionlanguagemodels

Methodology for Adversarial Attack Generation: Using Directives to Mislead Vision-LLMs

hackernoon.com

September 30, 2025 at 7:00 PM

This article details the multi-step typographic attack pipeline, including Attack Auto-Generation and Attack Augmentation. #visionlanguagemodels

TaSe splits queries into object, attribute and relation parts, then hierarchically recombines them, delivering a significant 24% boost on the OmniLabel benchmark. Read more: https://getnews.me/disentangling-text-for-better-language-based-object-detection/ #visionlanguagemodels #objectdetection

September 30, 2025 at 6:39 PM

TaSe splits queries into object, attribute and relation parts, then hierarchically recombines them, delivering a significant 24% boost on the OmniLabel benchmark. Read more: https://getnews.me/disentangling-text-for-better-language-based-object-detection/ #visionlanguagemodels #objectdetection

This article analyzes the critical safety trade-off of integrating Vision-LLMs into autonomous driving (AD) systems. #visionlanguagemodels

The Dual-Edged Sword of Vision-LLMs in AD: Reasoning Capabilities vs. Attack Vulnerabilities

hackernoon.com

September 30, 2025 at 5:00 PM

This article analyzes the critical safety trade-off of integrating Vision-LLMs into autonomous driving (AD) systems. #visionlanguagemodels

A study tested five vision‑language models on 957 color samples and found high accuracy for prototypical colors but lower performance on non‑prototypical shades across nine languages. https://getnews.me/vision-language-models-ability-to-name-colors-evaluated/ #visionlanguagemodels #colornaming

September 29, 2025 at 4:00 PM

A study tested five vision‑language models on 957 color samples and found high accuracy for prototypical colors but lower performance on non‑prototypical shades across nine languages. https://getnews.me/vision-language-models-ability-to-name-colors-evaluated/ #visionlanguagemodels #colornaming

Neural-MedBench, a compact neurology benchmark combining MRI, EHR data and clinical notes, reveals state-of-the-art vision-language models drop sharply on reasoning tasks. Read more: https://getnews.me/neural-medbench-highlights-gaps-in-ai-clinical-reasoning/ #neuralmedbench #visionlanguagemodels

September 29, 2025 at 1:11 PM

Neural-MedBench, a compact neurology benchmark combining MRI, EHR data and clinical notes, reveals state-of-the-art vision-language models drop sharply on reasoning tasks. Read more: https://getnews.me/neural-medbench-highlights-gaps-in-ai-clinical-reasoning/ #neuralmedbench #visionlanguagemodels

VLM2VLA treats robot actions as language tokens and fine‑tunes vision‑language models with LoRA, keeping VQA ability while succeeding in 800 real‑world robotic trials. Read more: https://getnews.me/fine-tuning-vision-language-models-to-action-models-without-forgetting/ #visionlanguagemodels #vla

September 29, 2025 at 12:32 PM

VLM2VLA treats robot actions as language tokens and fine‑tunes vision‑language models with LoRA, keeping VQA ability while succeeding in 800 real‑world robotic trials. Read more: https://getnews.me/fine-tuning-vision-language-models-to-action-models-without-forgetting/ #visionlanguagemodels #vla

StockGenChaR pairs high‑resolution stock chart images with expert narratives across asset classes and chart styles, providing a benchmark for vision‑language models. https://getnews.me/stockgenchar-dataset-advances-ai-captioning-of-stock-charts/ #stockgenchar #visionlanguagemodels

September 25, 2025 at 2:36 PM

StockGenChaR pairs high‑resolution stock chart images with expert narratives across asset classes and chart styles, providing a benchmark for vision‑language models. https://getnews.me/stockgenchar-dataset-advances-ai-captioning-of-stock-charts/ #stockgenchar #visionlanguagemodels