https://chaufanglin.github.io/

In contrast, AVEC shows a stronger use of visual information, with a significant negative correlation, especially in noisy conditions.

[7/8] 🧵

In contrast, AVEC shows a stronger use of visual information, with a significant negative correlation, especially in noisy conditions.

[7/8] 🧵

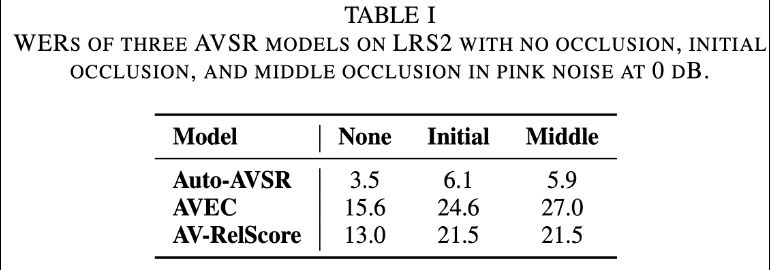

Auto-AVSR & AV-RelScore are equally affected by initial & middle occlusions, while AVEC is more impacted by middle occlusion.

Unlike humans, AVSR models do not depend on initial visual cues.

[5/8] 🧵

Auto-AVSR & AV-RelScore are equally affected by initial & middle occlusions, while AVEC is more impacted by middle occlusion.

Unlike humans, AVSR models do not depend on initial visual cues.

[5/8] 🧵

This metric quantifies the benefit of the visual modality in reducing WER compared to the audio-only system. [3/n] 🧵

This metric quantifies the benefit of the visual modality in reducing WER compared to the audio-only system. [3/n] 🧵