@expsyanz.bsky.social #EPC2025

@expsyanz.bsky.social #EPC2025

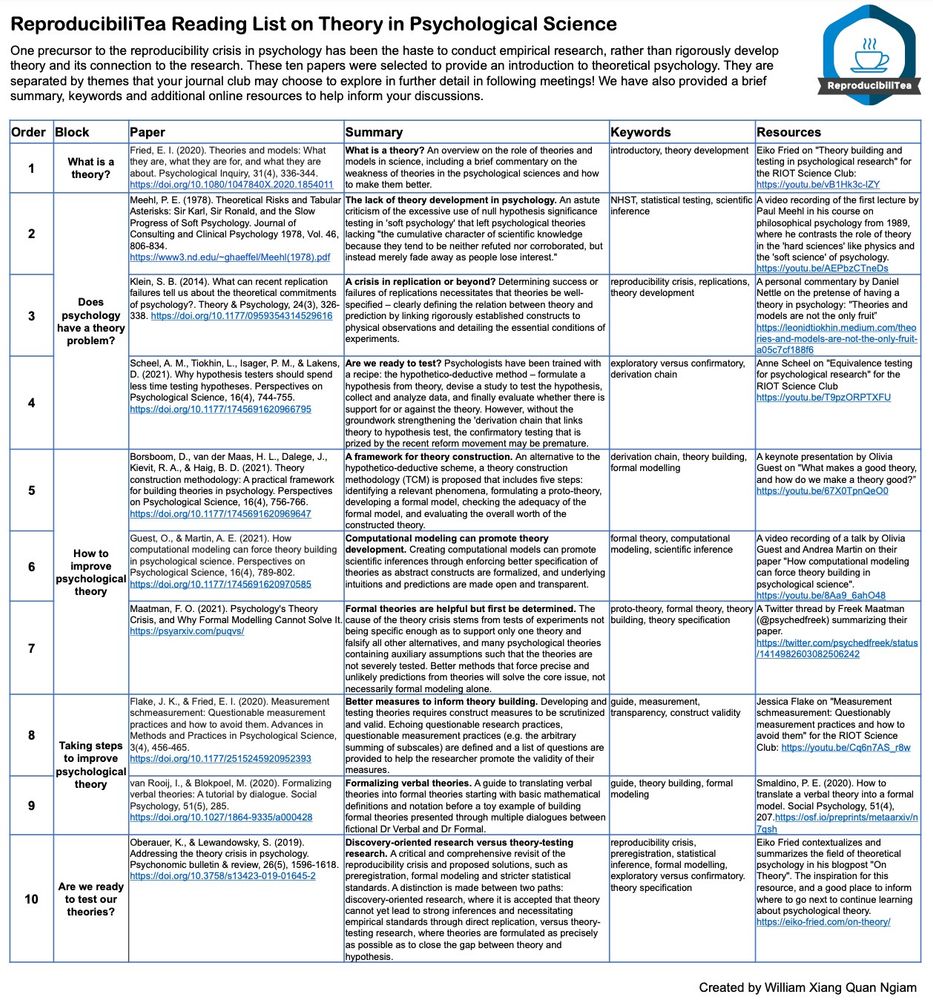

PDF of this reading list here: williamngiam.github.io/reading_list...

PDF of this reading list here: williamngiam.github.io/reading_list...

Check out my Veggie ID! You can create yours at sophie006liu.github.io/vegetal/

Check out my Veggie ID! You can create yours at sophie006liu.github.io/vegetal/