Views my own, but affiliations that might influence them:

ML PhD Student under Prof. Diyi Yang

2x RS Intern🦙 Pretraining

Alum NYU Abu Dhabi

Burqueño

he/him

But that isn't possible for open-source models, so would be great to at least partially mitigate this by baking in this gating mechanism.

But that isn't possible for open-source models, so would be great to at least partially mitigate this by baking in this gating mechanism.

For example, in the original 4o card

"""

During testing, we also observed rare instances where the model would unintentionally generate an output emulating the user’s voice

"""

For example, in the original 4o card

"""

During testing, we also observed rare instances where the model would unintentionally generate an output emulating the user’s voice

"""

github.com/stanford-crf...

github.com/stanford-crf...

Submit a speedrun to Marin! marin.readthedocs.io/en/latest/tu...

For PRs with promising results, we're lucky to be able to help test at scale on compute generously provided by the TPU Research Cloud!

Submit a speedrun to Marin! marin.readthedocs.io/en/latest/tu...

For PRs with promising results, we're lucky to be able to help test at scale on compute generously provided by the TPU Research Cloud!

- We use a smaller WD (0.01) identified from sweeps v.s. what is used in the paper (0.05).

- We only train to Chnichilla optimal (2B tokens) whereas the original paper was at 200B.

- We use a smaller WD (0.01) identified from sweeps v.s. what is used in the paper (0.05).

- We only train to Chnichilla optimal (2B tokens) whereas the original paper was at 200B.

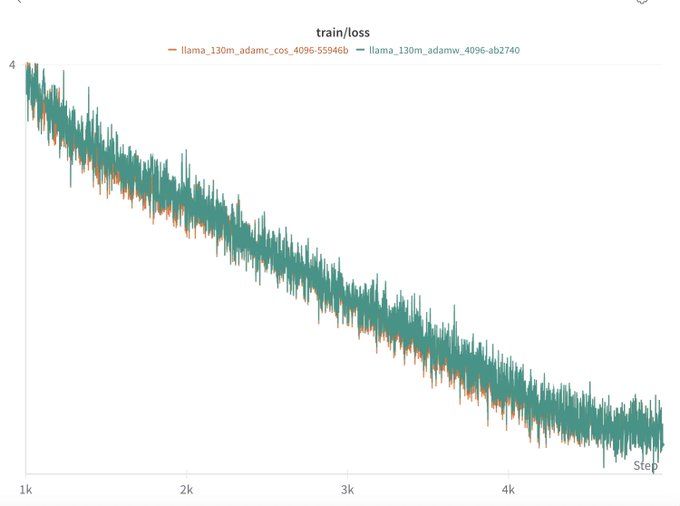

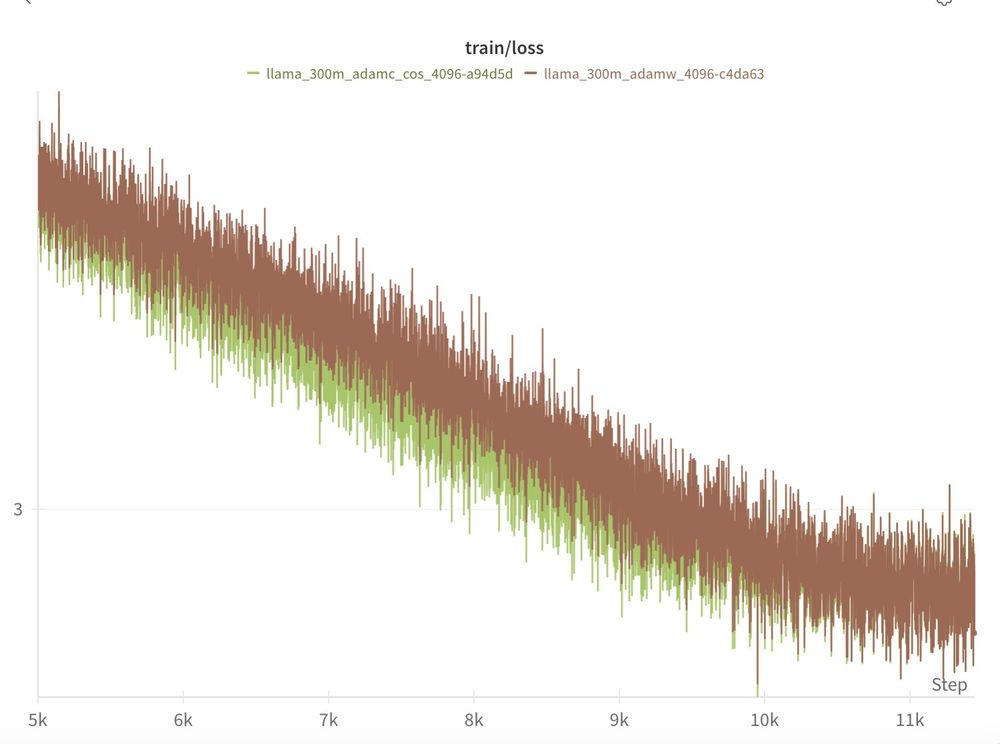

Remember, everything else in these experiments is held constant by Levanter & Marin (data order, model init. etc.)

Experiment files here: github.com/marin-commun...

Remember, everything else in these experiments is held constant by Levanter & Marin (data order, model init. etc.)

Experiment files here: github.com/marin-commun...

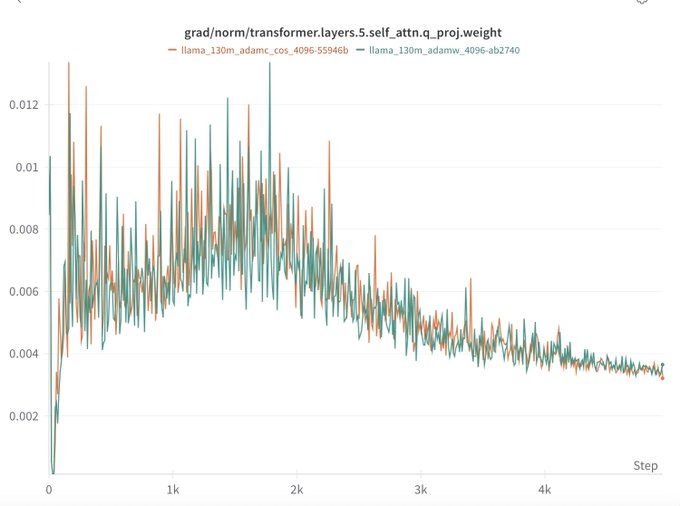

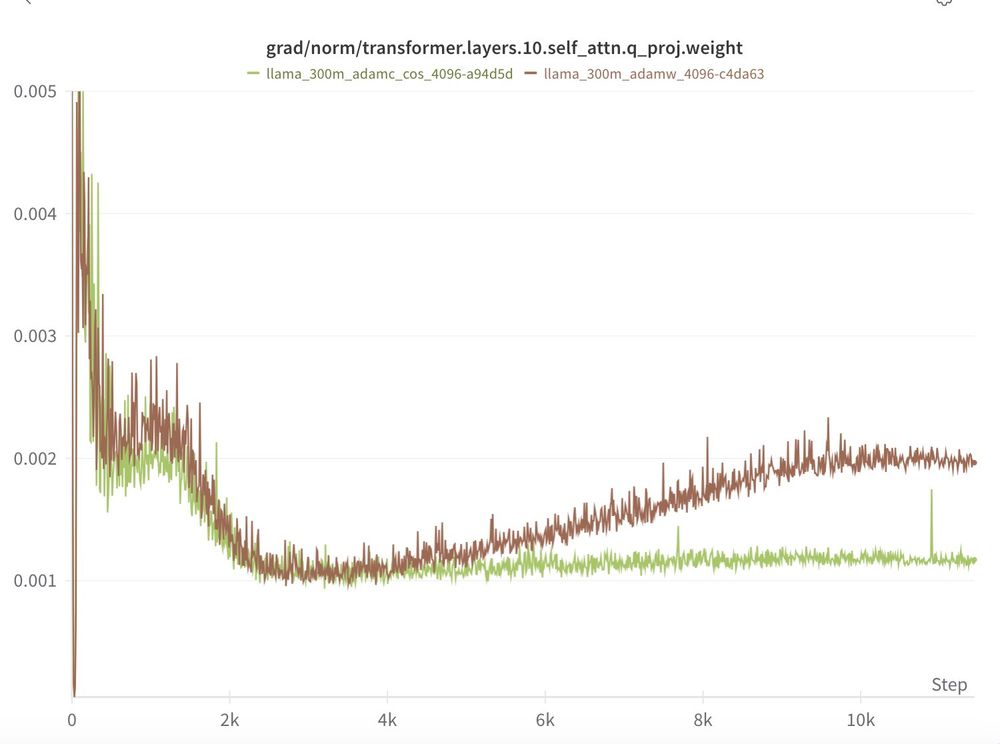

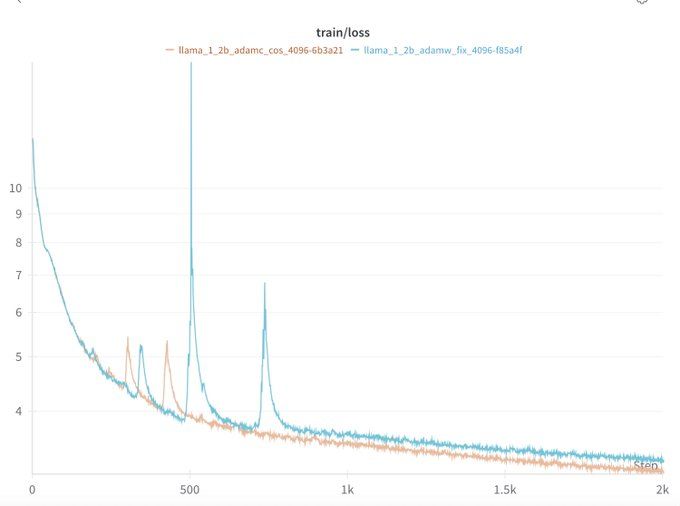

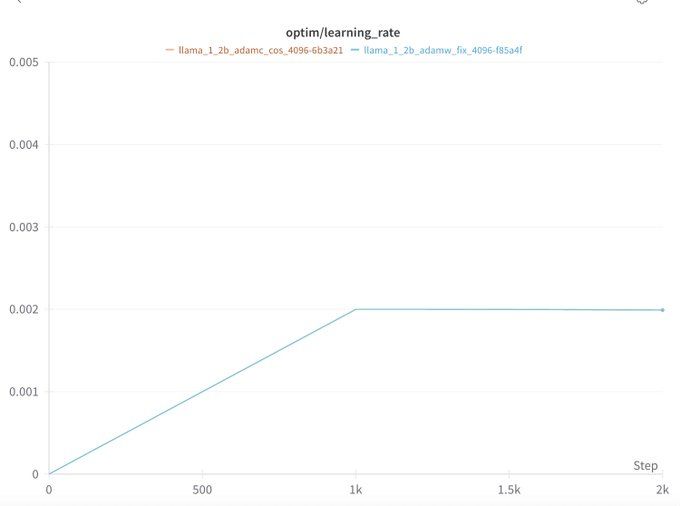

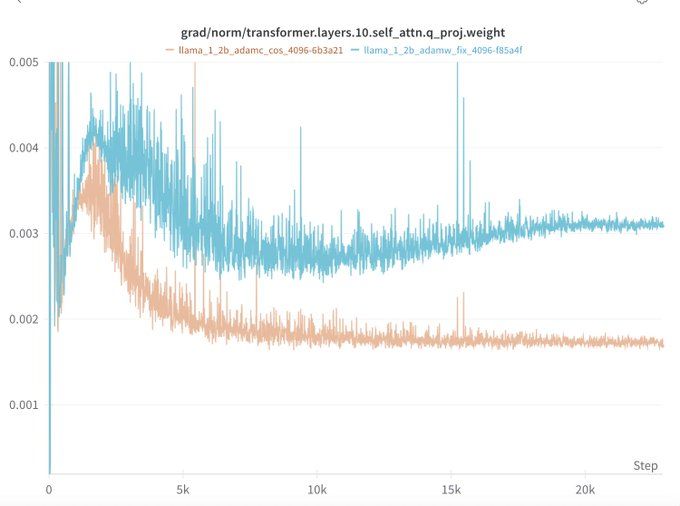

Similar to the end of training, this is likely because LR warmup also impacts the LR/WD ratio.

AdamC seems to mitigate this too.

Similar to the end of training, this is likely because LR warmup also impacts the LR/WD ratio.

AdamC seems to mitigate this too.

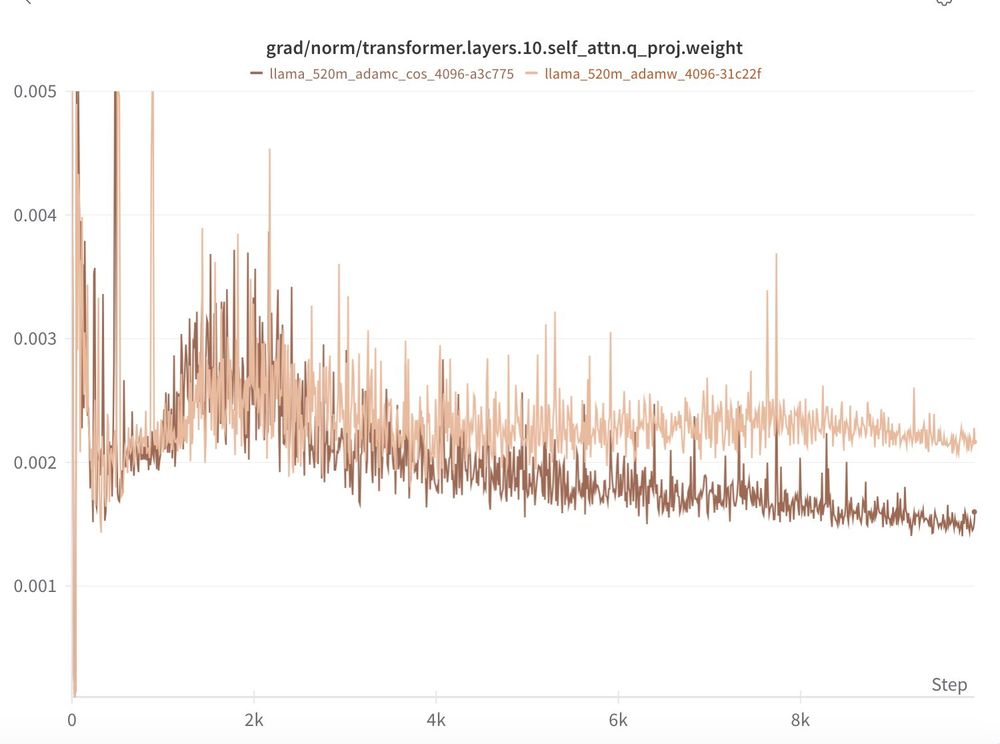

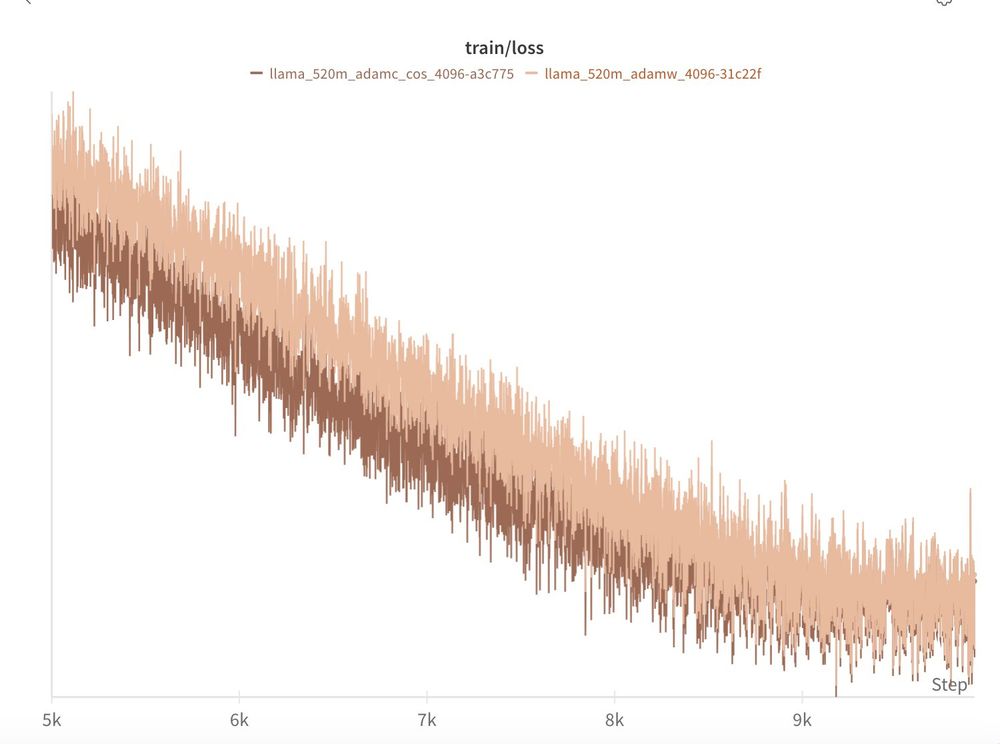

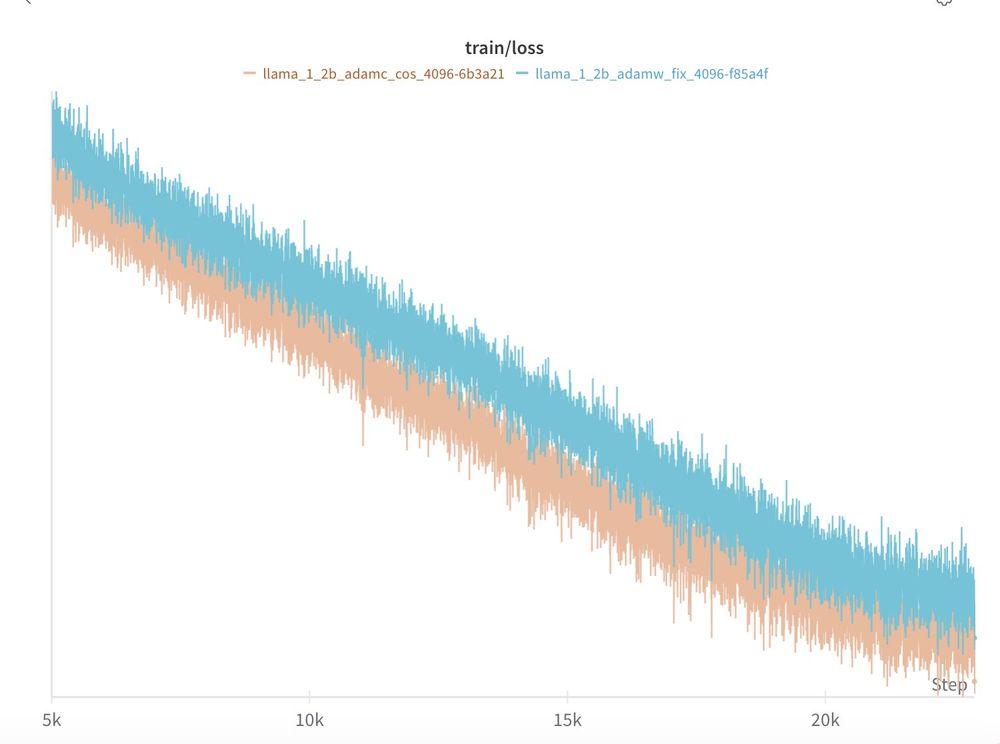

When compared to AdamW with all other factors held constant, AdamC mitigates the gradient ascent at the end of training and leads to an overall lower loss (-0.04)!

When compared to AdamW with all other factors held constant, AdamC mitigates the gradient ascent at the end of training and leads to an overall lower loss (-0.04)!

They are exactly good enough to generate fake viral videos for ad revenue on TikTok/Instagram & spread misinformation. Is there any serious argument for their safe release??

They are exactly good enough to generate fake viral videos for ad revenue on TikTok/Instagram & spread misinformation. Is there any serious argument for their safe release??

What valid & frequent business use case is there for photorealistic video & voice generation like Veo 3 offers?

What valid & frequent business use case is there for photorealistic video & voice generation like Veo 3 offers?