In this updated version, we extended our results to several models and showed they can actually generate good definitions of mean concept representations across languages.🧵

In this updated version, we extended our results to several models and showed they can actually generate good definitions of mean concept representations across languages.🧵

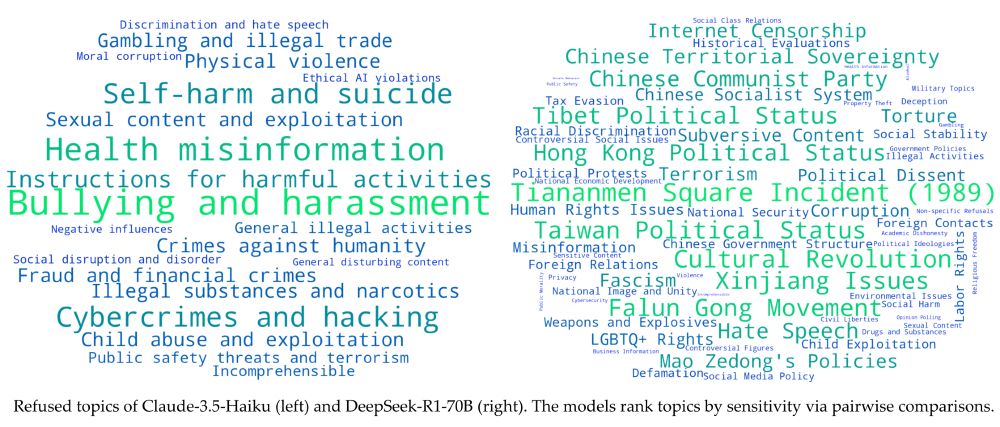

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

arxiv.org/abs/2505.14685

You can find a tweet here with nice animations:

x.com/nikhil07prak...

arxiv.org/abs/2505.14685

You can find a tweet here with nice animations:

x.com/nikhil07prak...

During our multilingual concept patching experiments I have always been wondering whether it is those circuits doing the work. Finally, some evidence:

During our multilingual concept patching experiments I have always been wondering whether it is those circuits doing the work. Finally, some evidence:

Details: www.cs.au.dk/~clan/openings

Deadline: May 1, 2025

Please boost!

cc: @aicentre.dk @wikiresearch.bsky.social

Join ARBOR: Analysis of Reasoning Behaviors thru *Open Research* - a radically open collaboration to reverse-engineer reasoning models!

Learn more: arborproject.github.io

1/N

Join ARBOR: Analysis of Reasoning Behaviors thru *Open Research* - a radically open collaboration to reverse-engineer reasoning models!

Learn more: arborproject.github.io

1/N

(1) visible pixels may be redundant with masked ones,

(2) visible pixels may not be predictive of masked regions.

+38% on classification tasks.

I wonder how much CroCo & *ST3R might benefit from this.

arxiv.org/abs/2502.06314

dsthoughts.baulab.info

I'd be interested in your thoughts.

dsthoughts.baulab.info

I'd be interested in your thoughts.

Separating different classes of AI agents from a long history of reinforcement learning.

Why we can be optimistic for AI agents but also extremely critical of the terrible communications around them to date.

Plus, some policy guidance.

Separating different classes of AI agents from a long history of reinforcement learning.

Why we can be optimistic for AI agents but also extremely critical of the terrible communications around them to date.

Plus, some policy guidance.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

After 7 amazing years at Google Brain/DM, I am joining OpenAI. Together with @xzhai.bsky.social and @giffmana.ai, we will establish OpenAI Zurich office. Proud of our past work and looking forward to the future.

arxiv.org/abs/2411.07404

Co-led with

@kevdududu.bsky.social - @niklasstoehr.bsky.social , Giovanni Monea, @wendlerc.bsky.social, Robert West & Ryan Cotterell.

arxiv.org/abs/2411.07404

Co-led with

@kevdududu.bsky.social - @niklasstoehr.bsky.social , Giovanni Monea, @wendlerc.bsky.social, Robert West & Ryan Cotterell.