vatniksoup.com/en/support-o...

vatniksoup.com/en/support-o...

Yes, our future’s in good hands.

24/24

Yes, our future’s in good hands.

24/24

23/24

bsky.app/profile/vatn...

23/24

bsky.app/profile/vatn...

22/24

22/24

21/24

21/24

20/24

20/24

19/24

19/24

18/24

18/24

17/24

17/24

16/24

16/24

15/24

15/24

14/24

bsky.app/profile/vatn...

14/24

bsky.app/profile/vatn...

13/24

13/24

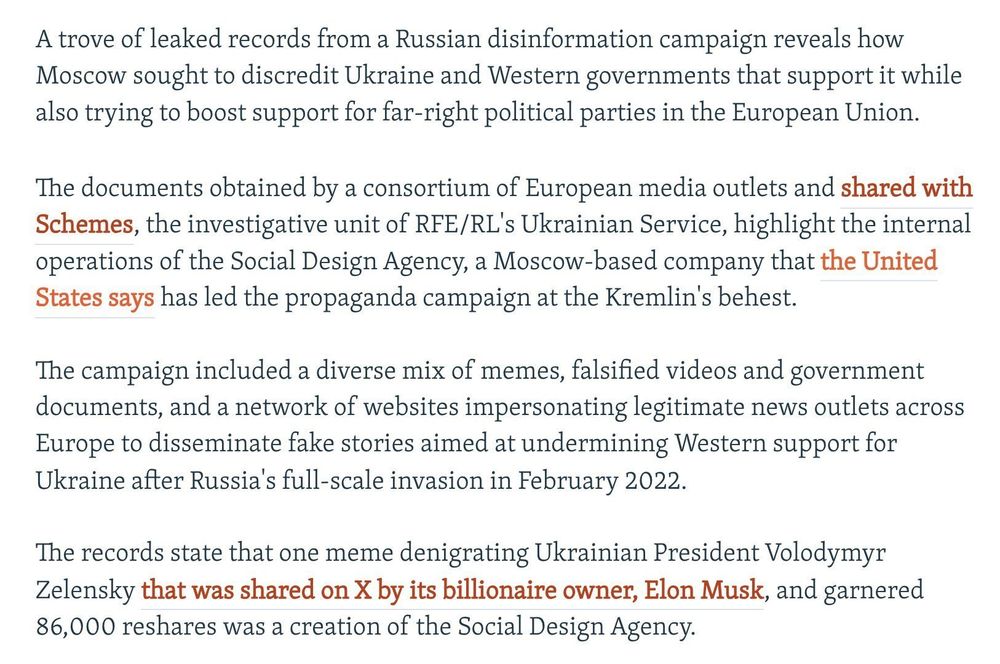

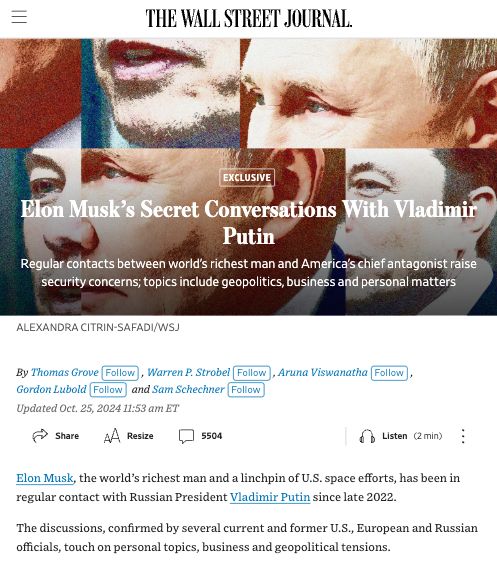

Even if he had good intentions, all of this would still be highly problematic.

12/24

Even if he had good intentions, all of this would still be highly problematic.

12/24

11/24

11/24

10/24

10/24

9/24

9/24

8/24

vatniksoup.com/en/soups/279/

8/24

vatniksoup.com/en/soups/279/

7/24

bsky.app/profile/vatn...

7/24

bsky.app/profile/vatn...

6/24

6/24

5/24

5/24

4/24

4/24

3/24

3/24

2/24

youtu.be/LPZh9BOjkQs

2/24

youtu.be/LPZh9BOjkQs