Personal website: tomeveritt.se

In a new paper, we use subtasks to assess capabilities. Perhaps surprisingly, LLMs often fail to fully employ their capabilities, i.e. they are not fully *goal-directed* 🧵

arxiv.org/abs/2504.118...

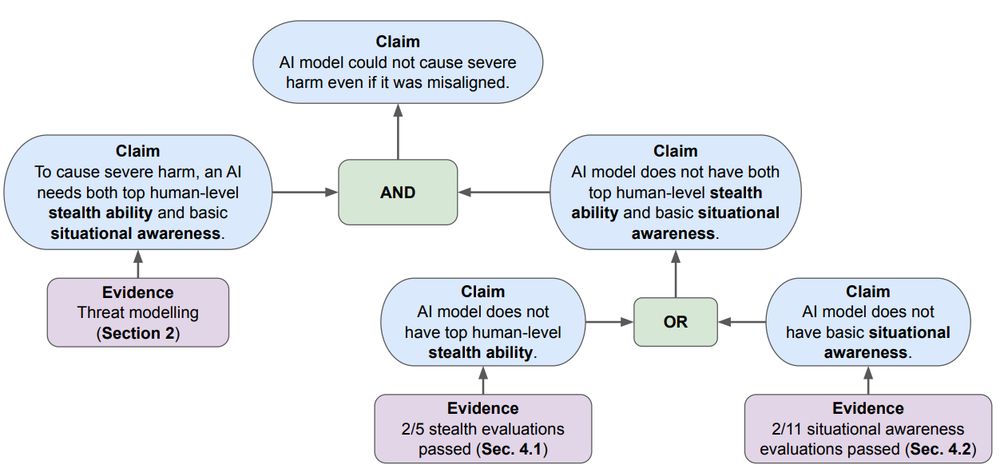

Sadly, NO. We show that training on *just the output* can still cause models to hide unwanted behavior in their chain-of-thought. MATS 8.0 Team Shard presents: a 🧵

🧵

My latest paper tries to solve a longstanding problem afflicting fields such as decision theory, economics, and ethics — the problem of infinities.

Let me explain a bit about what causes the problem and how my solution avoids it.

1/N

arxiv.org/abs/2509.19389

🧵

My latest paper tries to solve a longstanding problem afflicting fields such as decision theory, economics, and ethics — the problem of infinities.

Let me explain a bit about what causes the problem and how my solution avoids it.

1/N

arxiv.org/abs/2509.19389

2/3

2/3

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

We find early signs of steganographic capabilities in current frontier models, including Claude, GPT, and Gemini. 🧵

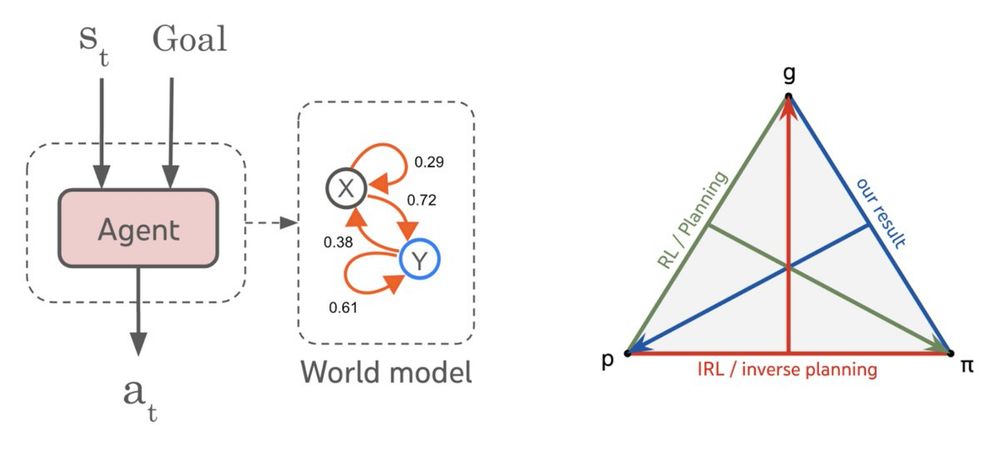

Our new #ICML2025 paper tackles this question from first principles, and finds a surprising answer, agents _are_ world models… 🧵

arxiv.org/abs/2506.01622

Our new #ICML2025 paper tackles this question from first principles, and finds a surprising answer, agents _are_ world models… 🧵

arxiv.org/abs/2506.01622

LLMs show it's perfectly possible

LLMs show it's perfectly possible

www.ted.com/talks/yoshua...

www.ted.com/talks/yoshua...

www.youtube.com/watch?app=de...

www.youtube.com/watch?app=de...

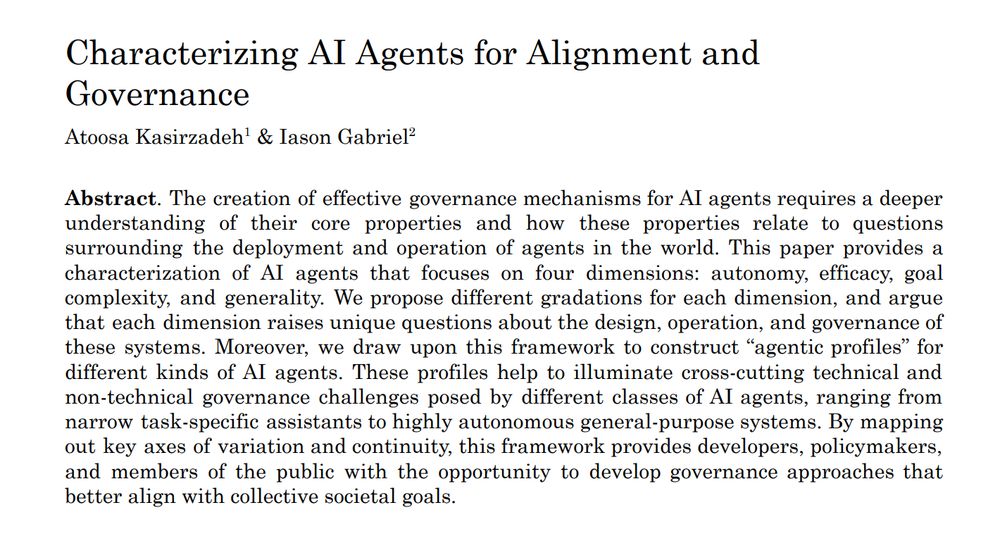

Great paper helping us understand the different possibilities

Great paper helping us understand the different possibilities

"the 4-minute mark for GPT-4 is completely arbitrary; you could probably put together one reasonable collection of word counting ... tasks with average human time of 30 seconds and another ... of 20 minutes where GPT-4 would hit 50% accuracy on each"

Ernest Davis and I deconstruct it at my newsletter: garymarcus.substack.com/p/the-latest...

"the 4-minute mark for GPT-4 is completely arbitrary; you could probably put together one reasonable collection of word counting ... tasks with average human time of 30 seconds and another ... of 20 minutes where GPT-4 would hit 50% accuracy on each"

dl.acm.org/doi/full/10....

dl.acm.org/doi/full/10....

perhaps the same is true for AI agents more generally?

perhaps the same is true for AI agents more generally?

Expert forecasters filter for the events that actually matter (not just noise), and forecast how likely this is to affect eg war, pandemics, frontier AI etc

Highly recommended!

Expert forecasters filter for the events that actually matter (not just noise), and forecast how likely this is to affect eg war, pandemics, frontier AI etc

Highly recommended!

Our paper in FAccT '25: How geopolitical rivals can cooperate on AI safety research.

arxiv.org/abs/2504.12914

Our paper in FAccT '25: How geopolitical rivals can cooperate on AI safety research.

arxiv.org/abs/2504.12914

We identified a common root cause to many safety vulnerabilities and pointed out some paths forward to address it!

We identified a common root cause to many safety vulnerabilities and pointed out some paths forward to address it!

In a new paper, we use subtasks to assess capabilities. Perhaps surprisingly, LLMs often fail to fully employ their capabilities, i.e. they are not fully *goal-directed* 🧵

arxiv.org/abs/2504.118...

In a new paper, we use subtasks to assess capabilities. Perhaps surprisingly, LLMs often fail to fully employ their capabilities, i.e. they are not fully *goal-directed* 🧵

arxiv.org/abs/2504.118...

arxiv.org/abs/2504.05259

arxiv.org/abs/2504.05259

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper

No time to read 145 pages? Check out the 10 page extended abstract at the beginning of the paper