@tlmnhut.bsky.social show: supervised pruning of a DNN’s feature space better aligns with human category representations, selects distinct subspaces for different categories, and more accurately predicts people’s preferences for GenAI images.

doi.org/10.1145/3768...

@tlmnhut.bsky.social show: supervised pruning of a DNN’s feature space better aligns with human category representations, selects distinct subspaces for different categories, and more accurately predicts people’s preferences for GenAI images.

doi.org/10.1145/3768...

See you there!

See you there!

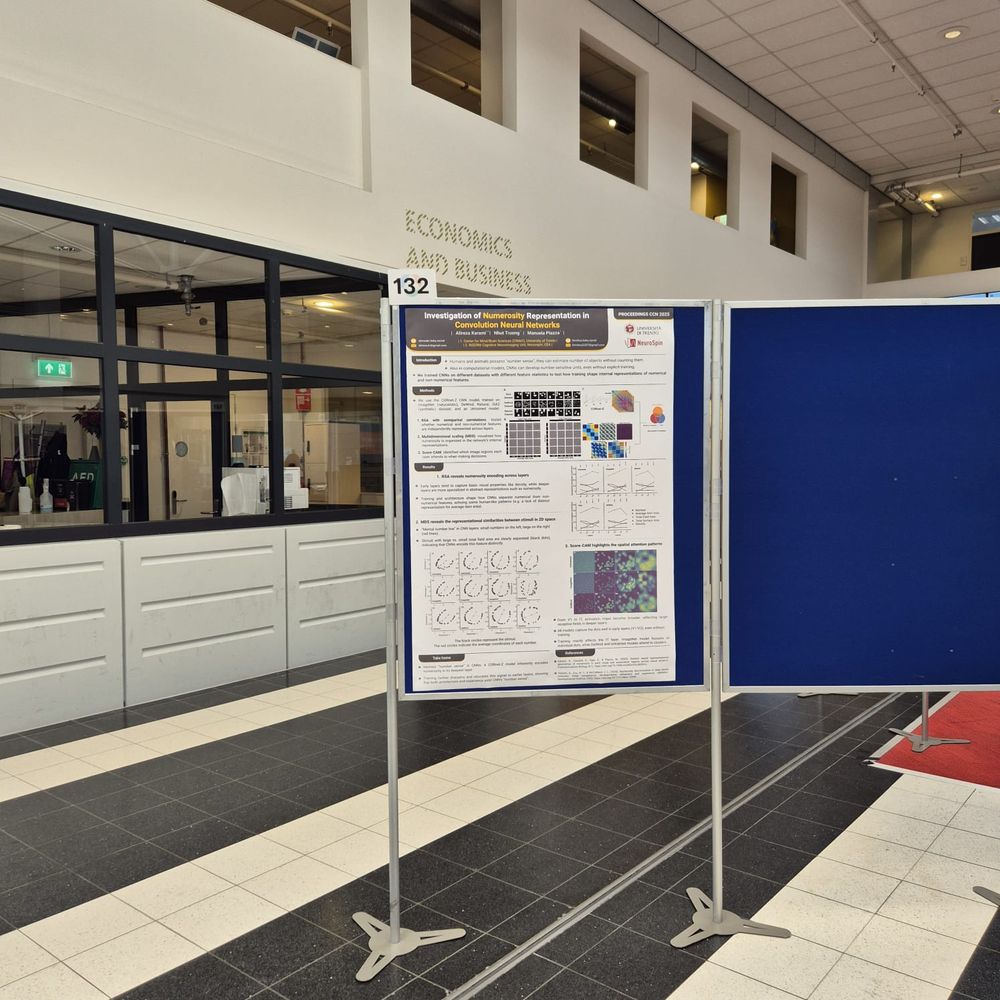

1️⃣ From my PhD with @manpiazza.bsky.social — accepted in the CCN proceedings. My young collaborator @tlmnhut.bsky.social will be presenting it. It’s about numerosity representation in CNNs.

📄 tinyurl.com/yc2dyhm3

1️⃣ From my PhD with @manpiazza.bsky.social — accepted in the CCN proceedings. My young collaborator @tlmnhut.bsky.social will be presenting it. It’s about numerosity representation in CNNs.

📄 tinyurl.com/yc2dyhm3

arxiv.org/abs/2508.00043

arxiv.org/abs/2508.00043

www.biorxiv.org/content/10.1...

🧵 1/n

www.biorxiv.org/content/10.1...

🧵 1/n

Come visit our poster!

#NeurIPS2024 #BehavioralML #Numerosity #DeepLearning

Come visit our poster!

#NeurIPS2024 #BehavioralML #Numerosity #DeepLearning

arxiv.org/abs/2409.16292

arxiv.org/abs/2409.16292