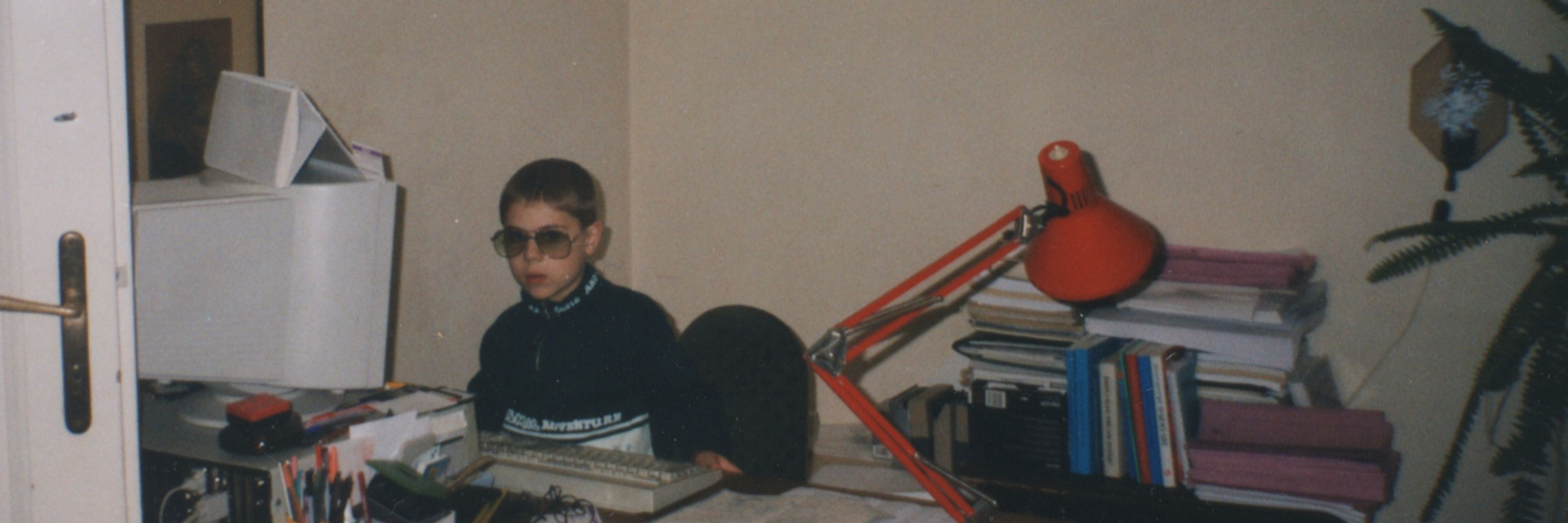

My neural networks series is dedicated to explaining exactly what's going on in the picture above.

Here's everything you need to know: thepalindrome.org/p/introduct...

My neural networks series is dedicated to explaining exactly what's going on in the picture above.

Here's everything you need to know: thepalindrome.org/p/introduct...

Join 35,000+ curious readers here: thepalindrome.org/

Join 35,000+ curious readers here: thepalindrome.org/

No. It's just different. In certain situations, frequentist estimations are perfectly enough. In others, Bayesian methods have the advantage. Use the right tool for the task, and don't worry about the rest.

No. It's just different. In certain situations, frequentist estimations are perfectly enough. In others, Bayesian methods have the advantage. Use the right tool for the task, and don't worry about the rest.

(The symbol ∝ reads as “proportional to”, and we write this instead of equality because of the omitted denominator.)

(The symbol ∝ reads as “proportional to”, and we write this instead of equality because of the omitted denominator.)

• the likelihood describes the probability of the observation given the model parameter,

• the prior describes our assumptions about the parameter before the observation,

• and the evidence is the total probability of our observation.

• the likelihood describes the probability of the observation given the model parameter,

• the prior describes our assumptions about the parameter before the observation,

• and the evidence is the total probability of our observation.

There are three terms on the right side: the likelihood, the prior, and the evidence.

There are three terms on the right side: the likelihood, the prior, and the evidence.

This is called posterior estimation.

This is called posterior estimation.

For instance, if we know absolutely nothing about our coin, we assume this to be uniform.

For instance, if we know absolutely nothing about our coin, we assume this to be uniform.

Yes, I know. The probability of probability. It’s kind of an Inception-moment, but you’ll get used to it.

Yes, I know. The probability of probability. It’s kind of an Inception-moment, but you’ll get used to it.

Is the coin biased, or were we just lucky? How can we tell?

Is the coin biased, or were we just lucky? How can we tell?

Again, this is a mathematically provable fact, not an interpretation.

Again, this is a mathematically provable fact, not an interpretation.

This is expressed in terms of conditional probabilities.

This is expressed in terms of conditional probabilities.

This is way too abstract, so let's elaborate.

This is way too abstract, so let's elaborate.

This is not an interpretation of probability. This is a mathematically provable fact, independent of interpretations. (A special case of the famous Law of Large Numbers.)

This is not an interpretation of probability. This is a mathematically provable fact, independent of interpretations. (A special case of the famous Law of Large Numbers.)

The ratio of the heads and the tosses is called “the relative frequency of heads”.

The ratio of the heads and the tosses is called “the relative frequency of heads”.

How can we assign probabilities? There are (at least) two schools of thought, constantly in conflict with each other.

Let's start with the frequentist school.

How can we assign probabilities? There are (at least) two schools of thought, constantly in conflict with each other.

Let's start with the frequentist school.

Probability is a well-defined mathematical object. This concept is separated from how probabilities are assigned.

Probability is a well-defined mathematical object. This concept is separated from how probabilities are assigned.

If we throw the dart randomly, the probability of hitting a certain shape is proportional to the shape's area.

If we throw the dart randomly, the probability of hitting a certain shape is proportional to the shape's area.