tl;dr: you can now chat with a brain scan 🧠💬

1/n

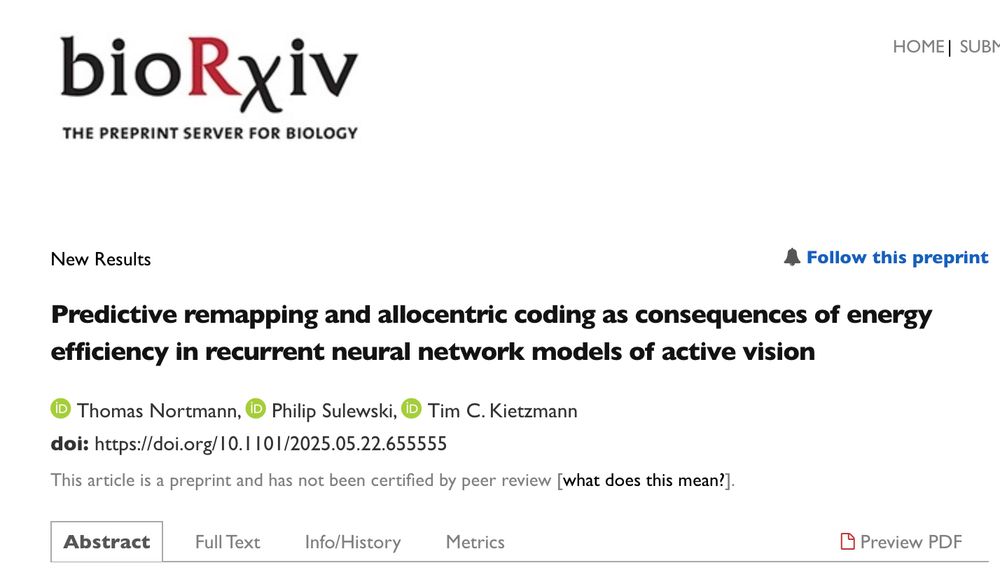

It learns predictive remapping and path integration into allocentric scene coordinates.

Now out in patterns: www.cell.com/patterns/ful...

It learns predictive remapping and path integration into allocentric scene coordinates.

Now out in patterns: www.cell.com/patterns/ful...

We asked ourselves, if complex neural dynamics like predictive remapping and allocentric coding can emerge from simple physical principles, in this case Energy Efficiency. Turns out they can!

More information in the 🧵 below.

I am super excited to see this one out in the wild.

We asked ourselves, if complex neural dynamics like predictive remapping and allocentric coding can emerge from simple physical principles, in this case Energy Efficiency. Turns out they can!

More information in the 🧵 below.

I am super excited to see this one out in the wild.

The outcome: a self-supervised training objective based on active vision that beats the SOTA on NSD representational alignment. 👇

How can we model natural scene representations in visual cortex? A solution is in active vision: predict the features of the next glimpse! arxiv.org/abs/2511.12715

+ @adriendoerig.bsky.social , @alexanderkroner.bsky.social , @carmenamme.bsky.social , @timkietzmann.bsky.social

🧵 1/14

The outcome: a self-supervised training objective based on active vision that beats the SOTA on NSD representational alignment. 👇

tl;dr: you can now chat with a brain scan 🧠💬

1/n

tl;dr: you can now chat with a brain scan 🧠💬

1/n

#neuroskyence

www.thetransmitter.org/the-big-pict...

#neuroskyence

www.thetransmitter.org/the-big-pict...

Reach out if you are interested in any of the above, I'll be at CCN next week!

Reach out if you are interested in any of the above, I'll be at CCN next week!

nouvelles.umontreal.ca/en/article/2...

nouvelles.umontreal.ca/en/article/2...

Check it out (and come chat with us about it at CCN).

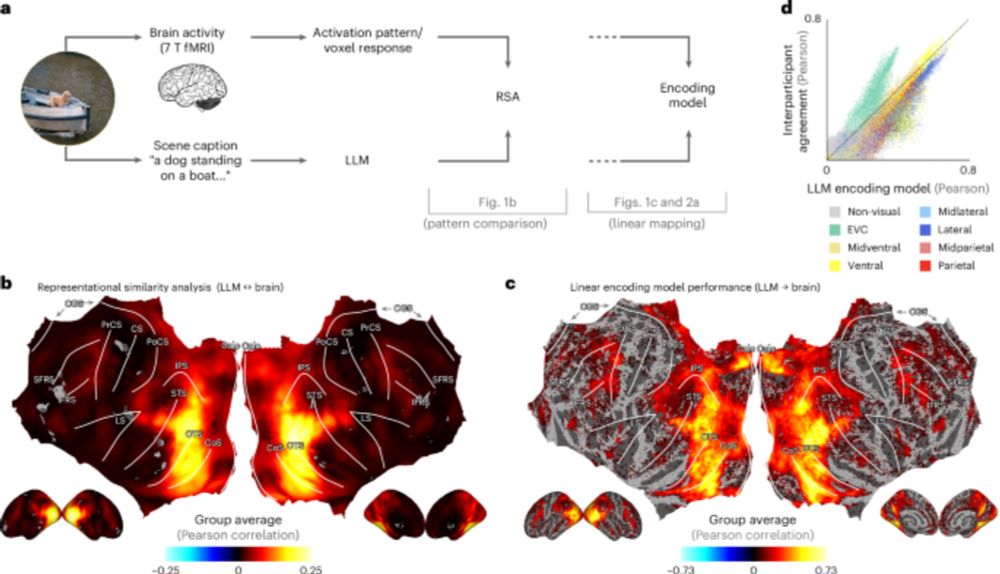

"Visual representations in the human brain are aligned with large language models"

🔗 www.nature.com/articles/s42...

Check it out (and come chat with us about it at CCN).

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

Let us take you for a spin. 1/6 www.biorxiv.org/content/10.1...

@tyrellturing.bsky.social's Deep learning framework

www.nature.com/articles/s41...

@tonyzador.bsky.social's Next-gen AI through neuroAI

www.nature.com/articles/s41...

@adriendoerig.bsky.social's Neuroconnectionist framework

www.nature.com/articles/s41...

@tyrellturing.bsky.social's Deep learning framework

www.nature.com/articles/s41...

@tonyzador.bsky.social's Next-gen AI through neuroAI

www.nature.com/articles/s41...

@adriendoerig.bsky.social's Neuroconnectionist framework

www.nature.com/articles/s41...

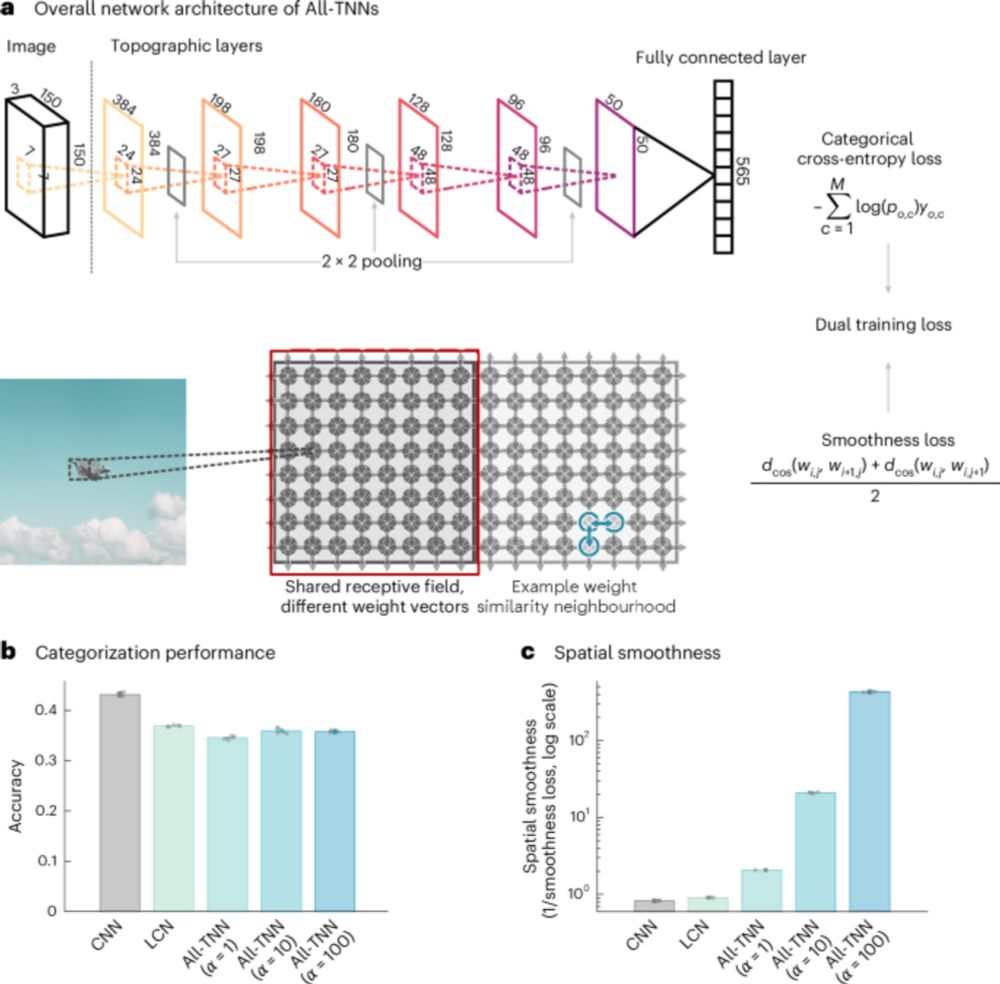

Our latest study, led by @DrewLinsley, examines how deep neural networks (DNNs) optimized for image categorization align with primate vision, using neural and behavioral benchmarks.

Our latest study, led by @DrewLinsley, examines how deep neural networks (DNNs) optimized for image categorization align with primate vision, using neural and behavioral benchmarks.

As well, the latents of a model trained only on neural activity capture information about brain regions and cell-types.

Step-by-step, we're gonna scale up folks!

🧠📈 🧪 #NeuroAI

Excited to share our #ICLR2025 Spotlight paper introducing POYO+ 🧠

poyo-plus.github.io

🧵

I'll post a summary of each of our projects closer to the conference.

Looking forward to seeing you all in Amsterdam!

I'll post a summary of each of our projects closer to the conference.

Looking forward to seeing you all in Amsterdam!

Here we administered 51 tests from 6 clinical and experimental batteries to assess vision in commercial AI models.

Very proud to share this first work from @genetang.bsky.social's PhD!

arxiv.org/abs/2504.10786

Our new paper explores this: elifesciences.org/reviewed-pre...

Our new paper explores this: elifesciences.org/reviewed-pre...