Tim Kellogg

@timkellogg.me

AI Architect | North Carolina | AI/ML, IoT, science

WARNING: I talk about kids sometimes

WARNING: I talk about kids sometimes

sent this to my brother asking, “does this count as wealth redistribution?”

(fun fact: my bro voted for Trump and is also undergoing collapse of the company he’s CEO of due to tariffs)

(fun fact: my bro voted for Trump and is also undergoing collapse of the company he’s CEO of due to tariffs)

November 9, 2025 at 9:36 PM

sent this to my brother asking, “does this count as wealth redistribution?”

(fun fact: my bro voted for Trump and is also undergoing collapse of the company he’s CEO of due to tariffs)

(fun fact: my bro voted for Trump and is also undergoing collapse of the company he’s CEO of due to tariffs)

The town of German, NY elected 2 positions on write-in ballots alone

1. Superintendent of Highways

2. Town Justice

apparently no one ran

1. Superintendent of Highways

2. Town Justice

apparently no one ran

November 9, 2025 at 9:06 PM

The town of German, NY elected 2 positions on write-in ballots alone

1. Superintendent of Highways

2. Town Justice

apparently no one ran

1. Superintendent of Highways

2. Town Justice

apparently no one ran

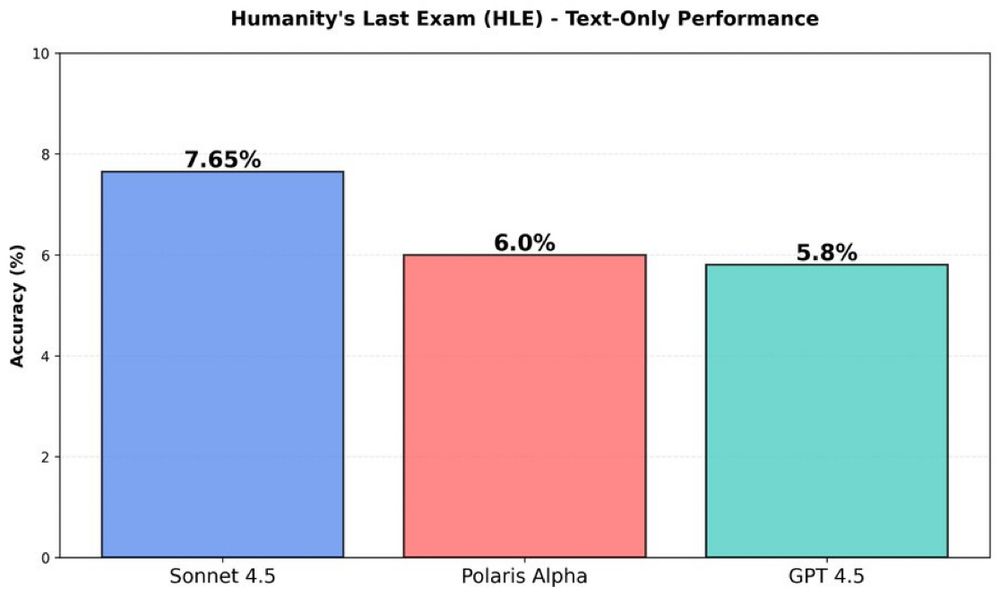

Polaris Alpha, believed to be GPT-5.1 non-reasoning, scores just below Sonnet 4.5 on HLE (unofficial run)

There will be a reasoning version too, and OpenAI excels at RL & post training, so I have high expectations for it

also leaked: Nov 24 release date

There will be a reasoning version too, and OpenAI excels at RL & post training, so I have high expectations for it

also leaked: Nov 24 release date

November 9, 2025 at 2:18 PM

Polaris Alpha, believed to be GPT-5.1 non-reasoning, scores just below Sonnet 4.5 on HLE (unofficial run)

There will be a reasoning version too, and OpenAI excels at RL & post training, so I have high expectations for it

also leaked: Nov 24 release date

There will be a reasoning version too, and OpenAI excels at RL & post training, so I have high expectations for it

also leaked: Nov 24 release date

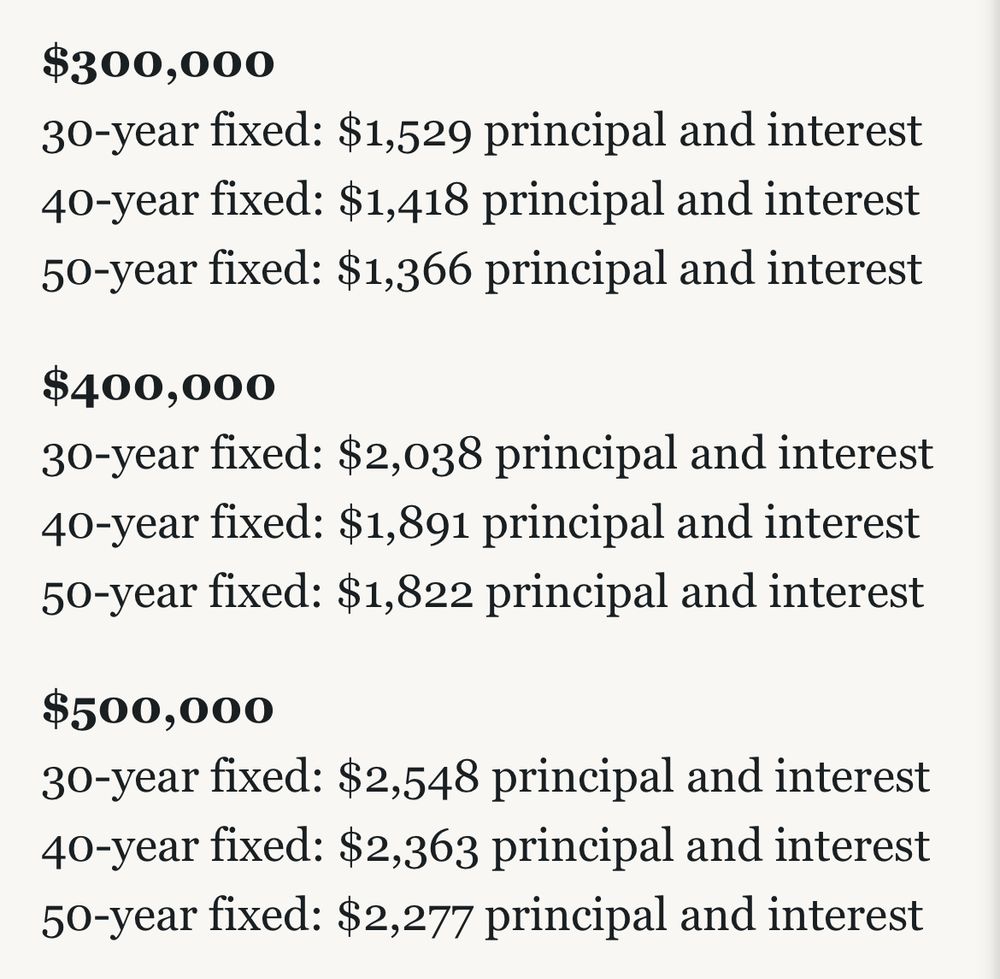

idk is a 50 year mortgage even worth it?

November 8, 2025 at 10:45 PM

idk is a 50 year mortgage even worth it?

GPT-5-codex-mini

Almost same performance as GPT-5-codex on high, but 4x faster and without pesky things like warm personality

www.neowin.net/amp/openai-i...

Almost same performance as GPT-5-codex on high, but 4x faster and without pesky things like warm personality

www.neowin.net/amp/openai-i...

November 8, 2025 at 4:46 PM

GPT-5-codex-mini

Almost same performance as GPT-5-codex on high, but 4x faster and without pesky things like warm personality

www.neowin.net/amp/openai-i...

Almost same performance as GPT-5-codex on high, but 4x faster and without pesky things like warm personality

www.neowin.net/amp/openai-i...

“nah, we don’t do 996”

November 8, 2025 at 12:49 PM

“nah, we don’t do 996”

this morning, X is saturated with people from US claiming that their favorite unknown benchmark (that happens to show K2 trailing US models) is actually the best single benchmark to watch

lol notice how they clipped off the top 12

lol notice how they clipped off the top 12

November 8, 2025 at 12:10 PM

this morning, X is saturated with people from US claiming that their favorite unknown benchmark (that happens to show K2 trailing US models) is actually the best single benchmark to watch

lol notice how they clipped off the top 12

lol notice how they clipped off the top 12

K2-Thinking is available in the Kimi app now

November 7, 2025 at 7:29 PM

K2-Thinking is available in the Kimi app now

November 7, 2025 at 6:35 PM

GPT-5.1 is live on OpenRouter via stealth preview

November 7, 2025 at 4:15 PM

GPT-5.1 is live on OpenRouter via stealth preview

i haven’t figured out how to use it, but apparently Kimi K2-Thinking has a Heavy mode with 8 parallel trajectories that are reflectively aggregated

it does better than GPT-5-pro on HLE

it does better than GPT-5-pro on HLE

November 7, 2025 at 4:04 PM

i haven’t figured out how to use it, but apparently Kimi K2-Thinking has a Heavy mode with 8 parallel trajectories that are reflectively aggregated

it does better than GPT-5-pro on HLE

it does better than GPT-5-pro on HLE

K2-Thinking is SOTA, top model in agentic tool calling

November 7, 2025 at 10:40 AM

K2-Thinking is SOTA, top model in agentic tool calling

this really highlights how LLMs do math

math is a string of many operations, so one small error (e.g. a misremembered shortcut) causes cascading calculation errors downstream

math is a string of many operations, so one small error (e.g. a misremembered shortcut) causes cascading calculation errors downstream

November 7, 2025 at 1:02 AM

this really highlights how LLMs do math

math is a string of many operations, so one small error (e.g. a misremembered shortcut) causes cascading calculation errors downstream

math is a string of many operations, so one small error (e.g. a misremembered shortcut) causes cascading calculation errors downstream

Surprising: Math requires a lot of memorization

Goodfire is at it again!

They developed a method similar to PCA that measures how much of an LLM’s weights are dedicated to memorization

www.goodfire.ai/research/und...

Goodfire is at it again!

They developed a method similar to PCA that measures how much of an LLM’s weights are dedicated to memorization

www.goodfire.ai/research/und...

November 7, 2025 at 1:02 AM

Surprising: Math requires a lot of memorization

Goodfire is at it again!

They developed a method similar to PCA that measures how much of an LLM’s weights are dedicated to memorization

www.goodfire.ai/research/und...

Goodfire is at it again!

They developed a method similar to PCA that measures how much of an LLM’s weights are dedicated to memorization

www.goodfire.ai/research/und...

notable: they ripped out the silicon that supports training

they say: “it’s the age of inference”

which, yeah, RL is mostly inference. Continual learning is almost all inference. Ambient agents, fast growing inference demands in general audiences

kartik343.wixstudio.com/blogorithm/p...

they say: “it’s the age of inference”

which, yeah, RL is mostly inference. Continual learning is almost all inference. Ambient agents, fast growing inference demands in general audiences

kartik343.wixstudio.com/blogorithm/p...

November 7, 2025 at 12:43 AM

notable: they ripped out the silicon that supports training

they say: “it’s the age of inference”

which, yeah, RL is mostly inference. Continual learning is almost all inference. Ambient agents, fast growing inference demands in general audiences

kartik343.wixstudio.com/blogorithm/p...

they say: “it’s the age of inference”

which, yeah, RL is mostly inference. Continual learning is almost all inference. Ambient agents, fast growing inference demands in general audiences

kartik343.wixstudio.com/blogorithm/p...

![A dark-themed bar chart titled “Agentic Reasoning.”

It compares model performance on “Humanity’s Last Exam (Text-only) w/ tools [3.b]”, described as “Expert-level questions across subjects.”

Three vertical bars are shown:

• Left (bright blue, K icon): 44.9

• Middle (light blue, knot logo): 41.7

• Right (tan, AI logo): 32.0

The chart visually emphasizes that the first model (K icon) leads slightly over the middle model, with both outperforming the third. The y-axis is unlabeled, but values likely represent performance scores or percentages.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:ckaz32jwl6t2cno6fmuw2nhn/bafkreigbmc5sapicc3zgca3jpvmcgiuzfxdornumceuwbnspuknezigiru@jpeg)

November 6, 2025 at 6:00 PM

OpenAI has been getting ready to release GPT-5.1 (this from their iOS code)

pretty sure i’ve A/B tested it, and it was a big step up, at least for the search-type queries i typically do

pretty sure i’ve A/B tested it, and it was a big step up, at least for the search-type queries i typically do

November 6, 2025 at 1:32 PM

OpenAI has been getting ready to release GPT-5.1 (this from their iOS code)

pretty sure i’ve A/B tested it, and it was a big step up, at least for the search-type queries i typically do

pretty sure i’ve A/B tested it, and it was a big step up, at least for the search-type queries i typically do

lol this part was cute

like, you realize we have space probes still functioning beyond pluto, right? there are answers for this stuff..

like, you realize we have space probes still functioning beyond pluto, right? there are answers for this stuff..

November 5, 2025 at 2:11 AM

lol this part was cute

like, you realize we have space probes still functioning beyond pluto, right? there are answers for this stuff..

like, you realize we have space probes still functioning beyond pluto, right? there are answers for this stuff..

Windsurf Codemaps

actually this makes a ton of sense — if vibe coding only works on small/non-complex projects, then the answer is to tackle complexity directly

Codemaps uses LLMs to create an “index” over your code, a map of where things are

cognition.ai/blog/codemaps

actually this makes a ton of sense — if vibe coding only works on small/non-complex projects, then the answer is to tackle complexity directly

Codemaps uses LLMs to create an “index” over your code, a map of where things are

cognition.ai/blog/codemaps

November 5, 2025 at 1:47 AM

Windsurf Codemaps

actually this makes a ton of sense — if vibe coding only works on small/non-complex projects, then the answer is to tackle complexity directly

Codemaps uses LLMs to create an “index” over your code, a map of where things are

cognition.ai/blog/codemaps

actually this makes a ton of sense — if vibe coding only works on small/non-complex projects, then the answer is to tackle complexity directly

Codemaps uses LLMs to create an “index” over your code, a map of where things are

cognition.ai/blog/codemaps

how did you come to those numbers? these are theirs

November 5, 2025 at 12:53 AM

how did you come to those numbers? these are theirs

Starcloud: GPUs in space

This company finally launched their first H100 into high Earth orbit. A solar array for power, uninterrupted by weather or nighttime, and a black plate in the back to radiate heat away into -270°C space

starcloudinc.github.io/wp.pdf

This company finally launched their first H100 into high Earth orbit. A solar array for power, uninterrupted by weather or nighttime, and a black plate in the back to radiate heat away into -270°C space

starcloudinc.github.io/wp.pdf

November 5, 2025 at 12:34 AM

Starcloud: GPUs in space

This company finally launched their first H100 into high Earth orbit. A solar array for power, uninterrupted by weather or nighttime, and a black plate in the back to radiate heat away into -270°C space

starcloudinc.github.io/wp.pdf

This company finally launched their first H100 into high Earth orbit. A solar array for power, uninterrupted by weather or nighttime, and a black plate in the back to radiate heat away into -270°C space

starcloudinc.github.io/wp.pdf