The benefits of merging, both the accuracy boost and the stability of the "sweet spot", become even more pronounced in larger, more capable models. This echoes prior work which shows merging bigger models are more effective and stable. (6/8)

The benefits of merging, both the accuracy boost and the stability of the "sweet spot", become even more pronounced in larger, more capable models. This echoes prior work which shows merging bigger models are more effective and stable. (6/8)

We find a "sweet spot" merge that is Pareto-superior: it has HIGHER accuracy than both parents while substantially restoring the calibration lost during alignment. (4/8)

We find a "sweet spot" merge that is Pareto-superior: it has HIGHER accuracy than both parents while substantially restoring the calibration lost during alignment. (4/8)

By interpolating between the well-calibrated base model and its capable but overconfident instruct counterpart, we create a continuous spectrum to navigate this trade-off. No retraining needed.

(3/8)

By interpolating between the well-calibrated base model and its capable but overconfident instruct counterpart, we create a continuous spectrum to navigate this trade-off. No retraining needed.

(3/8)

Instruction tuning doesn't just nudge performance; it wrecks calibration, causing a huge spike in overconfidence. (2/8)

Instruction tuning doesn't just nudge performance; it wrecks calibration, causing a huge spike in overconfidence. (2/8)

You face a choice: a well-calibrated base model or a capable but unreliable instruct model.

What if you didn't have to choose? What if you could navigate the trade-off?

(1/8)

You face a choice: a well-calibrated base model or a capable but unreliable instruct model.

What if you didn't have to choose? What if you could navigate the trade-off?

(1/8)

Paper: arxiv.org/abs/2510.17516

Data: huggingface.co/datasets/pit...

Website: simbench.tiancheng.hu (9/9)

Paper: arxiv.org/abs/2510.17516

Data: huggingface.co/datasets/pit...

Website: simbench.tiancheng.hu (9/9)

Instruction-tuning (the process that makes LLMs helpful and safe) improves their ability to predict consensus opinions.

BUT, it actively harms their ability to predict diverse, pluralistic opinions where humans disagree. (5/9)

Instruction-tuning (the process that makes LLMs helpful and safe) improves their ability to predict consensus opinions.

BUT, it actively harms their ability to predict diverse, pluralistic opinions where humans disagree. (5/9)

Across the model families we could test, bigger models are consistently better simulators. Performance reliably increases with model size. This suggests that future, larger models hold the potential to become highly accurate simulators. (4/9)

Across the model families we could test, bigger models are consistently better simulators. Performance reliably increases with model size. This suggests that future, larger models hold the potential to become highly accurate simulators. (4/9)

The promise is revolutionary for science & policy. But there’s a huge "IF": Do these simulations actually reflect reality?

To find out, we introduce SimBench: The first large-scale benchmark for group-level social simulation. (1/9)

The promise is revolutionary for science & policy. But there’s a huge "IF": Do these simulations actually reflect reality?

To find out, we introduce SimBench: The first large-scale benchmark for group-level social simulation. (1/9)

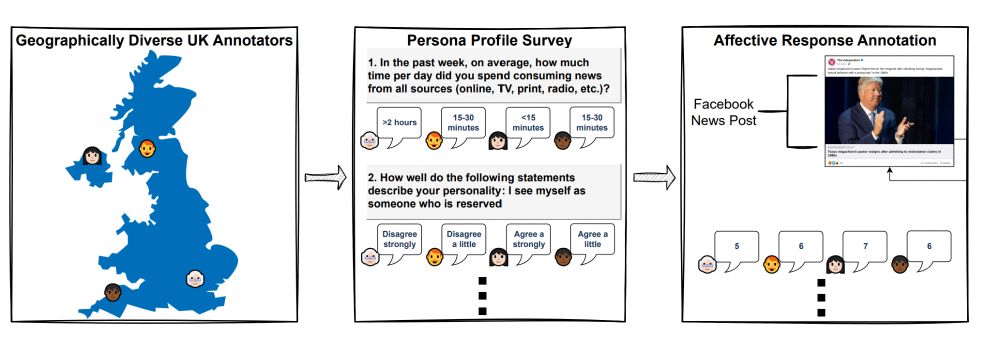

Excited to announce our iNews paper is accepted to #ACL2025! 🥳 It's a large-scale dataset for predicting individualized affective responses to real-world, multimodal news.

Paper: arxiv.org/abs/2503.03335

Data: huggingface.co/datasets/pit...

Excited to announce our iNews paper is accepted to #ACL2025! 🥳 It's a large-scale dataset for predicting individualized affective responses to real-world, multimodal news.

Paper: arxiv.org/abs/2503.03335

Data: huggingface.co/datasets/pit...

• "Early ascent phenomenon": performance dips with few examples, then improves

• Persona info consistently helps, even at 32-shot (reaching 44.4% accuracy).

• Image few-shot prompting scales worse than text, despite zero-shot advantage. (7/8)

• "Early ascent phenomenon": performance dips with few examples, then improves

• Persona info consistently helps, even at 32-shot (reaching 44.4% accuracy).

• Image few-shot prompting scales worse than text, despite zero-shot advantage. (7/8)

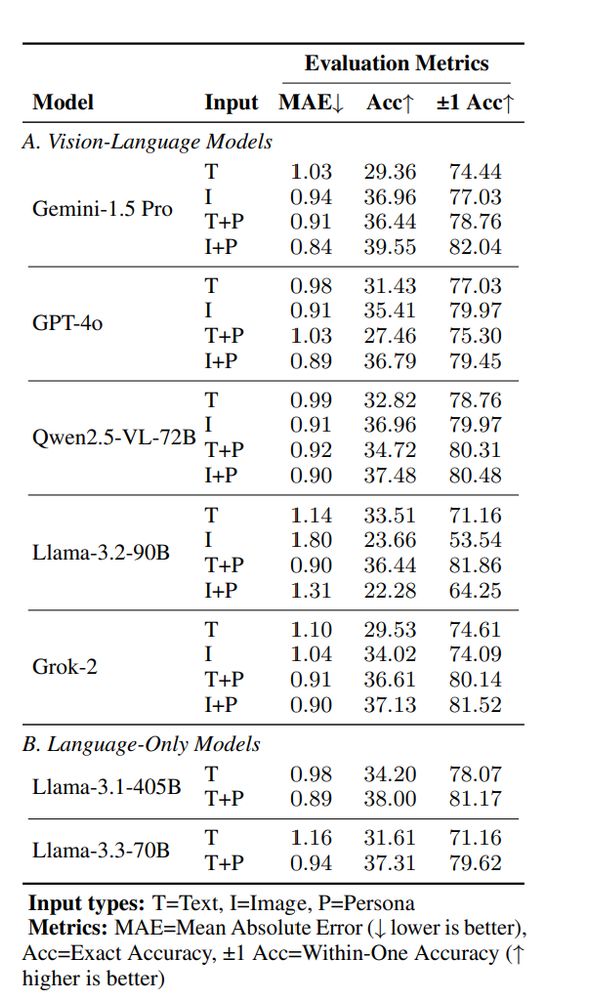

• Persona info boosts accuracy across models (up to 7% gain!).

• Image inputs generally outperform text inputs in zero-shot.

• Gemini 1.5 Pro + image + persona = best zero-shot performance (still only 40% accuracy though). (6/8)

• Persona info boosts accuracy across models (up to 7% gain!).

• Image inputs generally outperform text inputs in zero-shot.

• Gemini 1.5 Pro + image + persona = best zero-shot performance (still only 40% accuracy though). (6/8)

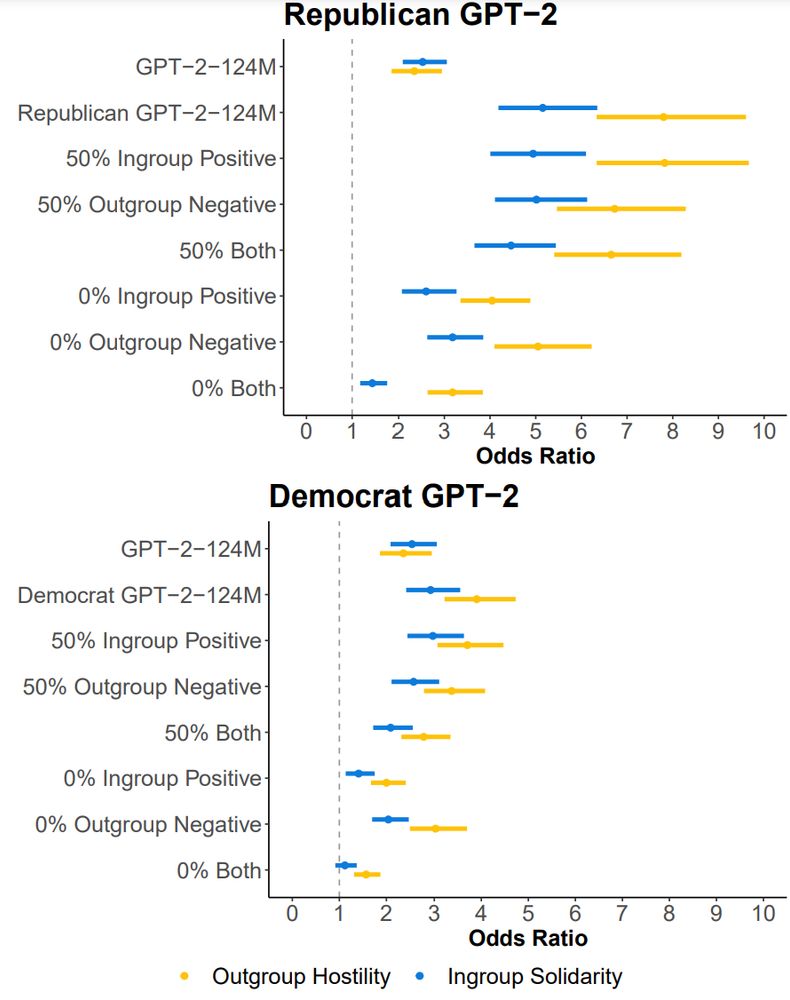

Is there a scaling law for simulation based on persona detailedness?

Is there a scaling law for simulation based on persona detailedness?

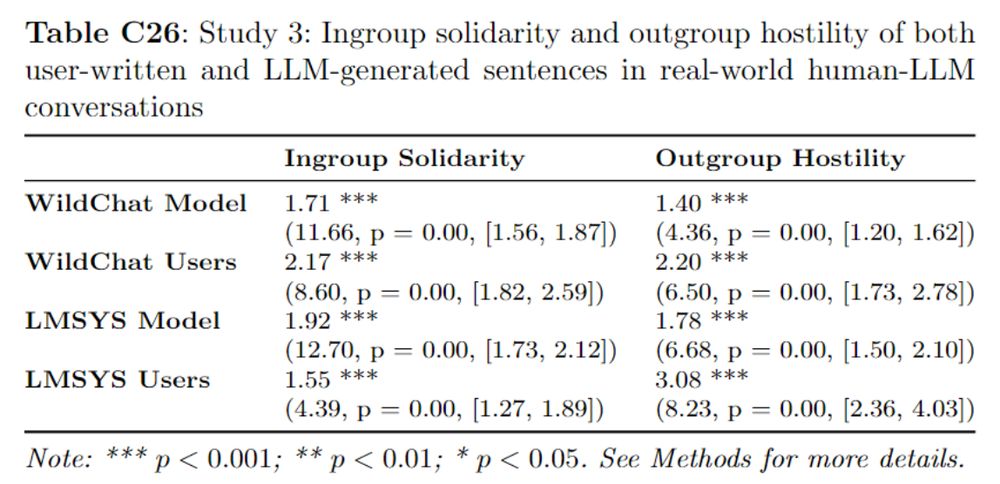

@profsanderlinden.bsky.social @steverathje.bsky.social

📄 arxiv.org/abs/2310.15819

@profsanderlinden.bsky.social @steverathje.bsky.social

📄 arxiv.org/abs/2310.15819