PhD student @ Vision Lab Caltech

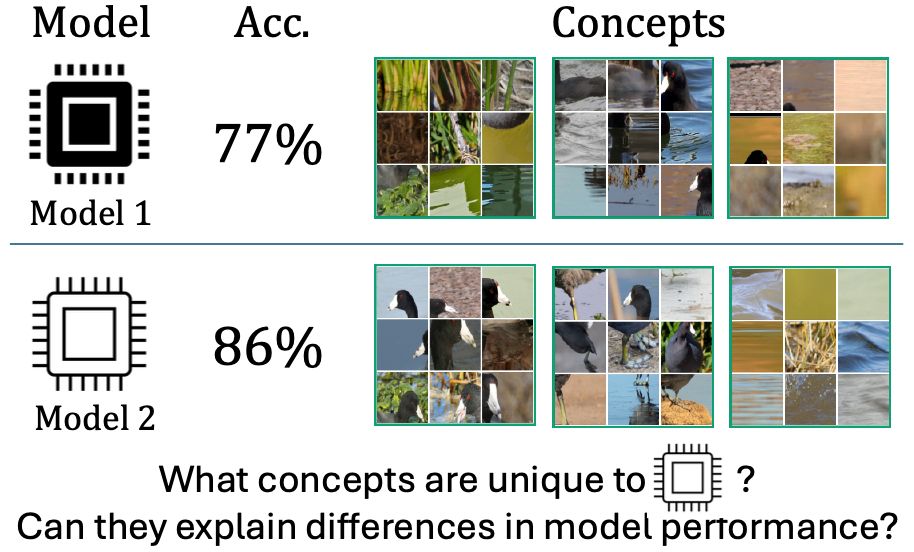

We all know the ViT-Large performs better than the Resnet-50, but what visual concepts drive this difference? Our new ICLR 2025 paper addresses this question! nkondapa.github.io/rsvc-page/

We all know the ViT-Large performs better than the Resnet-50, but what visual concepts drive this difference? Our new ICLR 2025 paper addresses this question! nkondapa.github.io/rsvc-page/