(*avg orientation, aspect-ratio, etc, may still vary. ask me about this!)

(*avg orientation, aspect-ratio, etc, may still vary. ask me about this!)

In @currentbiology.bsky.social, @chazfirestone.bsky.social & I show how these images—known as “visual anagrams”—can help solve a longstanding problem in cognitive science. bit.ly/45BVnCZ

In @currentbiology.bsky.social, @chazfirestone.bsky.social & I show how these images—known as “visual anagrams”—can help solve a longstanding problem in cognitive science. bit.ly/45BVnCZ

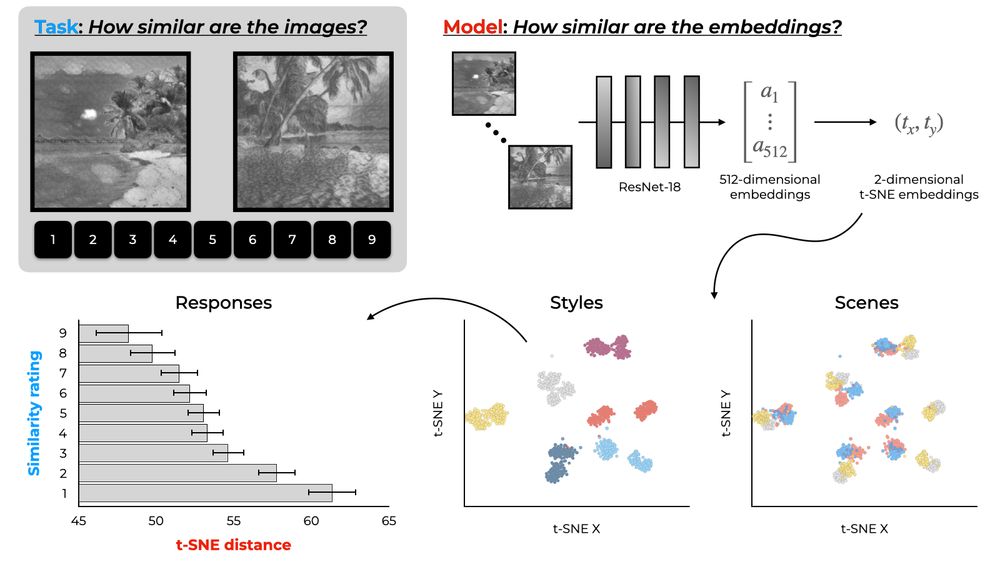

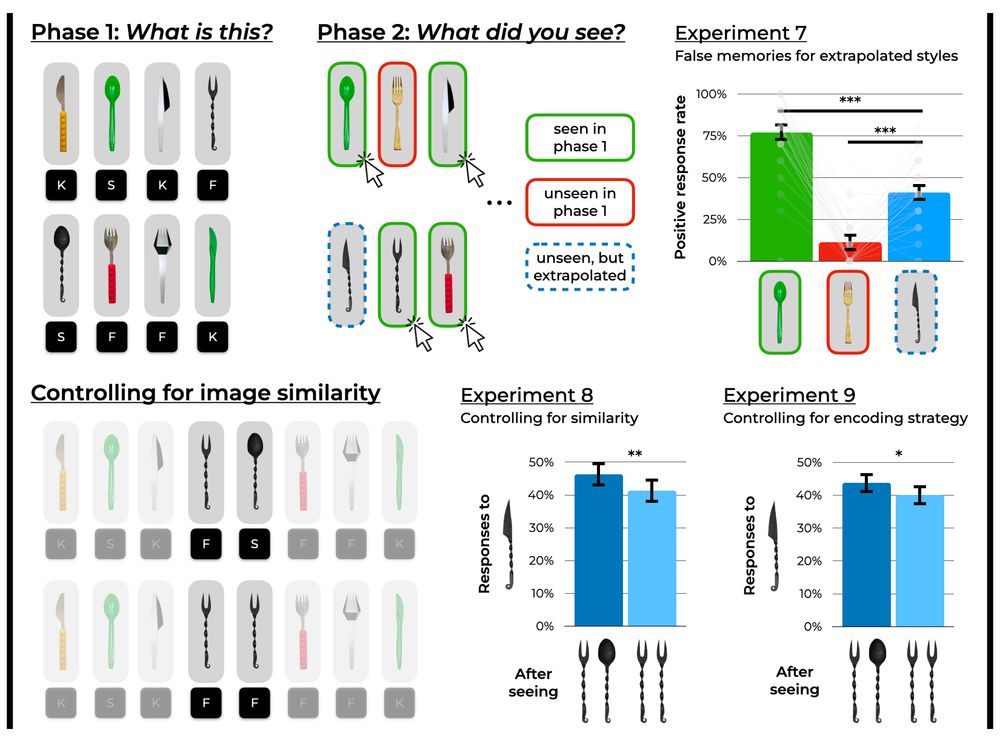

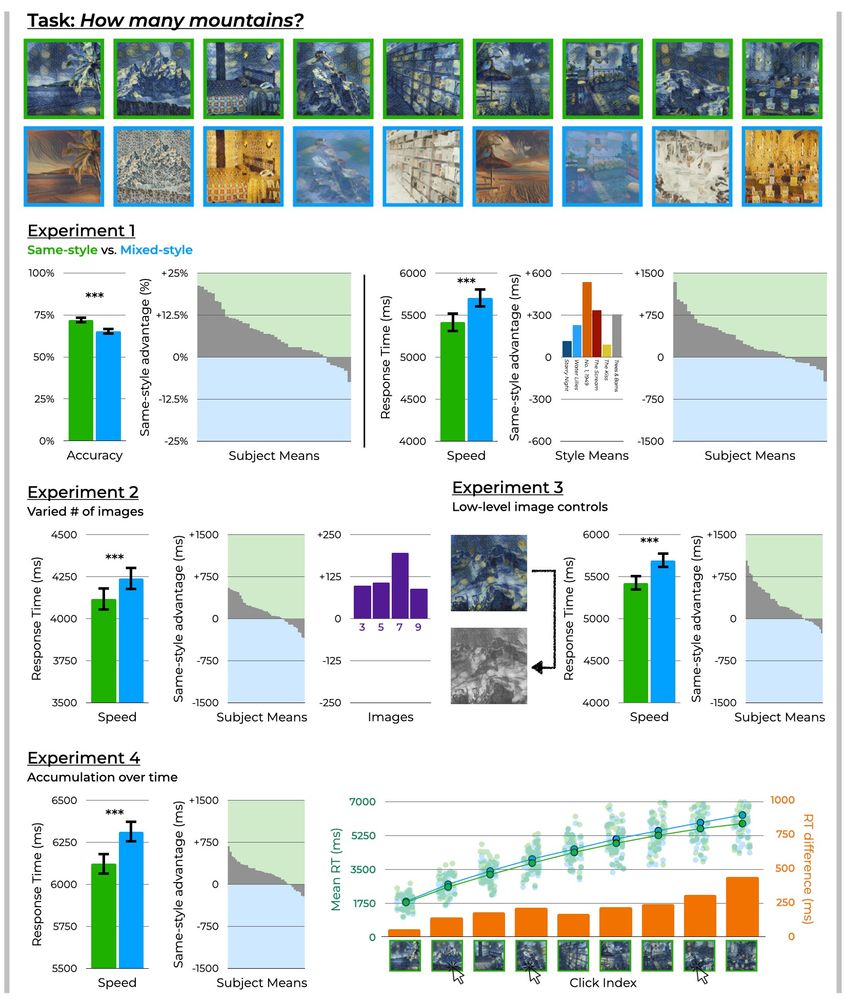

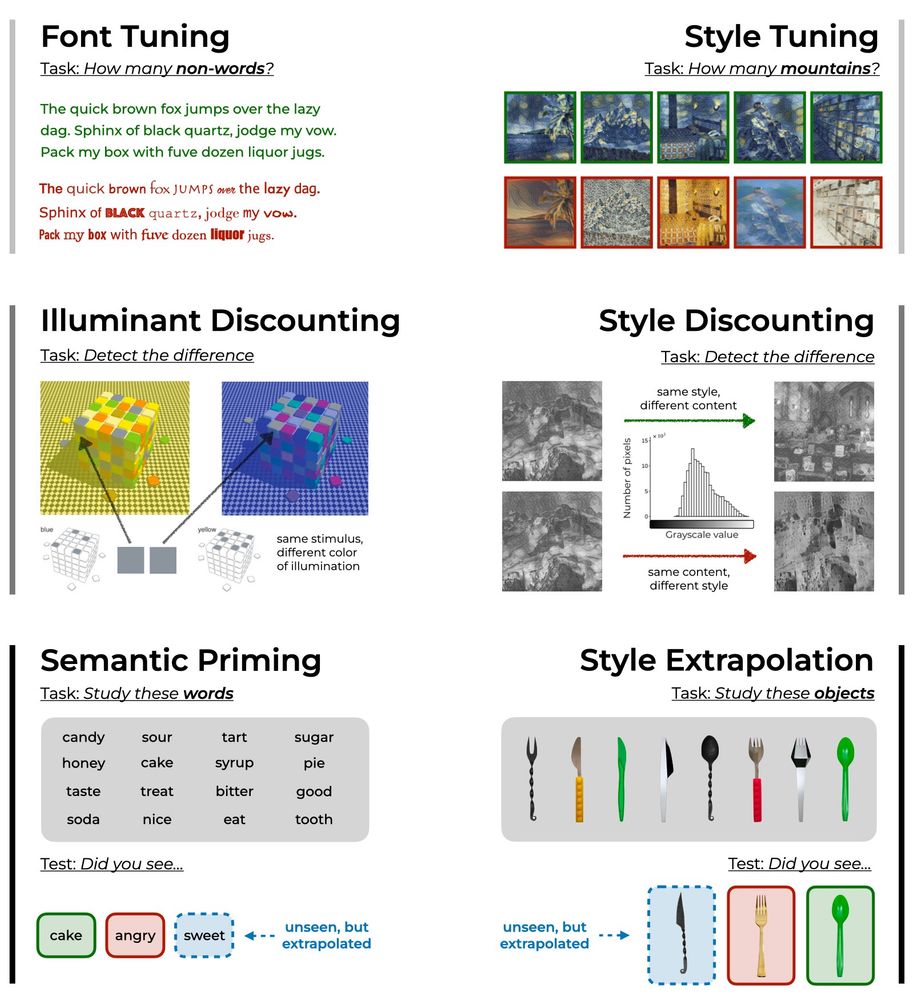

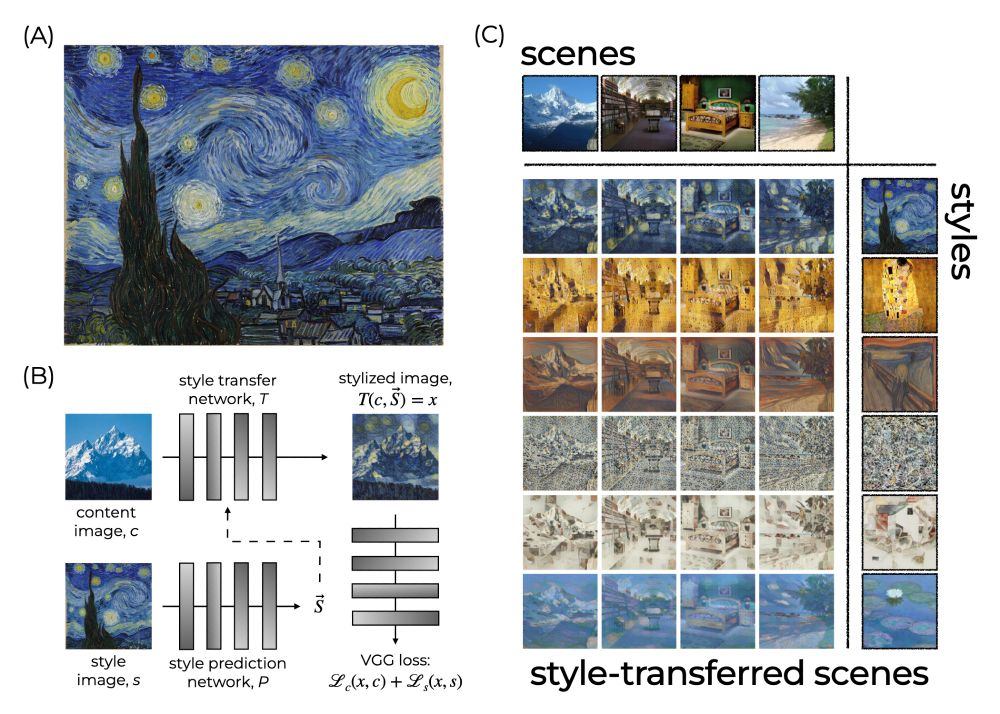

Using style transfer algorithms, we generated stimuli in various styles to use in psychophysics studies.

Using style transfer algorithms, we generated stimuli in various styles to use in psychophysics studies.

In @nathumbehav.nature.com, @chazfirestone.bsky.social & I take an experimental approach to style perception! osf.io/preprints/ps...

In @nathumbehav.nature.com, @chazfirestone.bsky.social & I take an experimental approach to style perception! osf.io/preprints/ps...

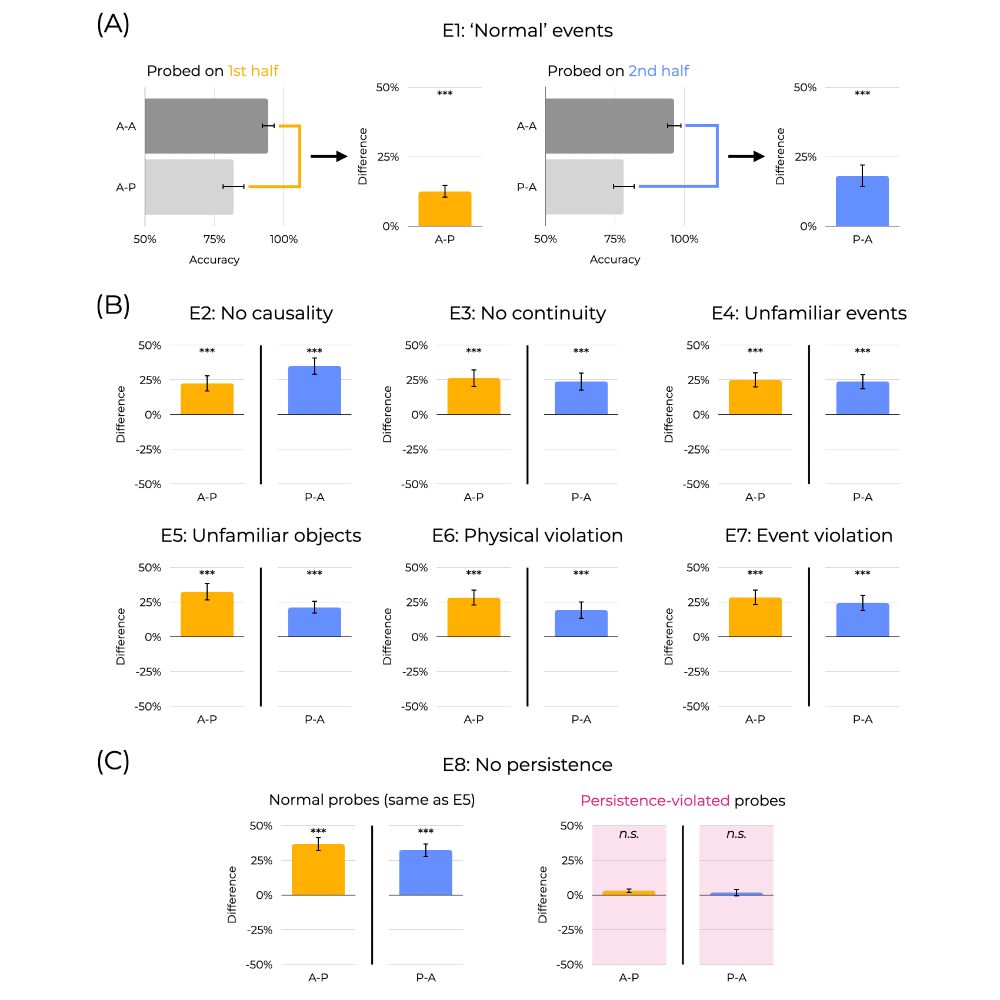

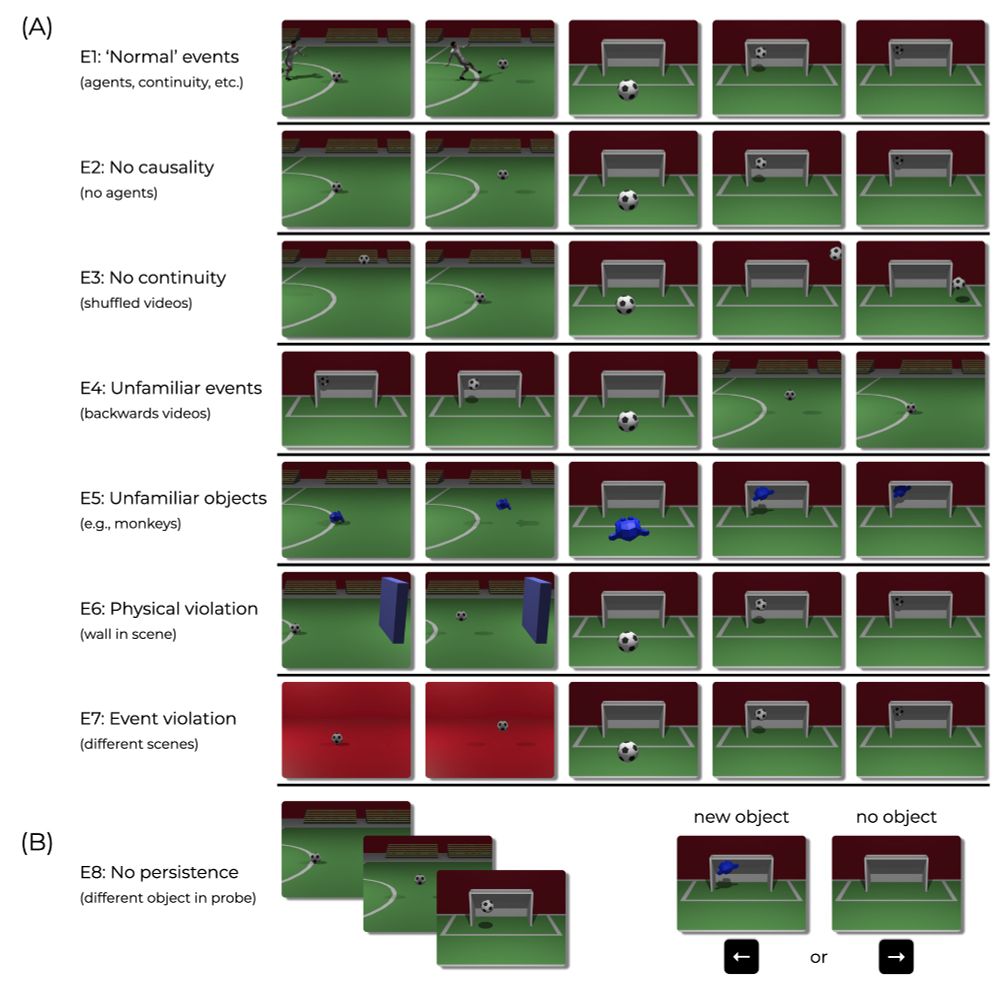

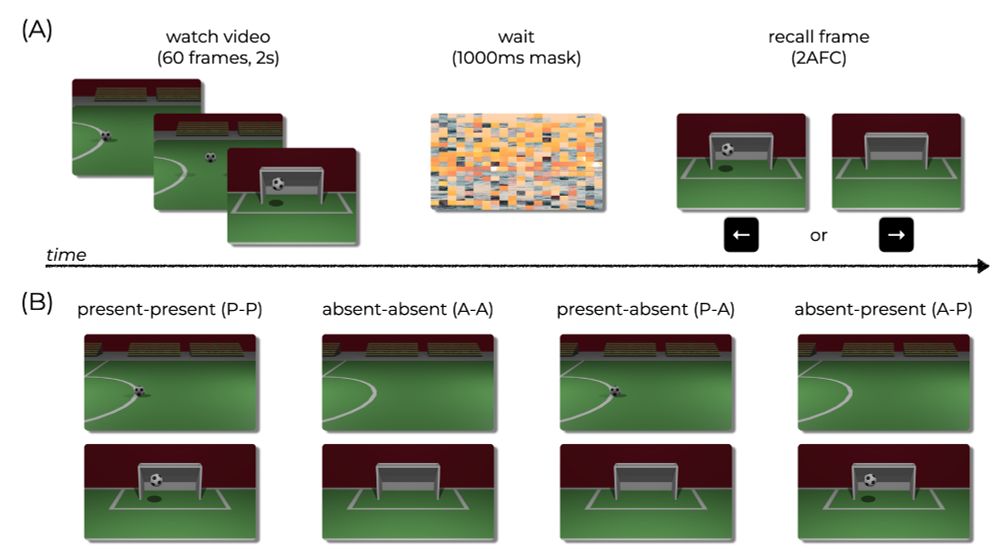

Do you remember seeing a ball in the second half of the video? Up to 37% of our participants reported seeing a ball, even though it wasn’t there. Why?

In a new paper in press @ Cognition, Brent Strickland and I ask what causes event completion. osf.io/preprints/ps...

Do you remember seeing a ball in the second half of the video? Up to 37% of our participants reported seeing a ball, even though it wasn’t there. Why?

In a new paper in press @ Cognition, Brent Strickland and I ask what causes event completion. osf.io/preprints/ps...