An abundance of thanks to all my mentors and friends who helped make this possible!!

An abundance of thanks to all my mentors and friends who helped make this possible!!

We’re truly delighted to welcome @yejinchoinka.bsky.social as a new @stanfordnlp.bsky.social faculty member, starting full-time in September. ❤️

nlp.stanford.edu/people/

To limit test this, I made a "Realtime Voice" MCP using free STT, VAD, and TTS systems. The result is a janky, but makes me me excited about the ecosystem to come!

To limit test this, I made a "Realtime Voice" MCP using free STT, VAD, and TTS systems. The result is a janky, but makes me me excited about the ecosystem to come!

We’re truly delighted to welcome @yejinchoinka.bsky.social as a new @stanfordnlp.bsky.social faculty member, starting full-time in September. ❤️

nlp.stanford.edu/people/

We’re truly delighted to welcome @yejinchoinka.bsky.social as a new @stanfordnlp.bsky.social faculty member, starting full-time in September. ❤️

nlp.stanford.edu/people/

@chrmanning.bsky.social @shikharmurty.bsky.social

@chrmanning.bsky.social @shikharmurty.bsky.social

Our new @stanfordnlp.bsky.social paper introduces CTC-DRO, a training method that reduces worst-language errors by up to 47.1%.

Work w/ Ananjan, Moussa, @jurafsky.bsky.social, Tatsu Hashimoto and Karen Livescu.

Here’s how it works 🧵

Our new @stanfordnlp.bsky.social paper introduces CTC-DRO, a training method that reduces worst-language errors by up to 47.1%.

Work w/ Ananjan, Moussa, @jurafsky.bsky.social, Tatsu Hashimoto and Karen Livescu.

Here’s how it works 🧵

Preprint: arxiv.org/abs/2405.05966

Video: youtu.be/5Aer7MUSuSU

Video: youtu.be/5Aer7MUSuSU

With EgoNormia, a 1.8k ego-centric video 🥽 QA benchmark, we show that this is surprisingly challenging!

With EgoNormia, a 1.8k ego-centric video 🥽 QA benchmark, we show that this is surprisingly challenging!

But humans are naturally quite good at this (>90% acc.)

Check it out!

➡️ arxiv.org/abs/2502.20490

But humans are naturally quite good at this (>90% acc.)

Check it out!

➡️ arxiv.org/abs/2502.20490

🔮 Nevertheless, the talk was quite prophetic!

🔮 Nevertheless, the talk was quite prophetic!

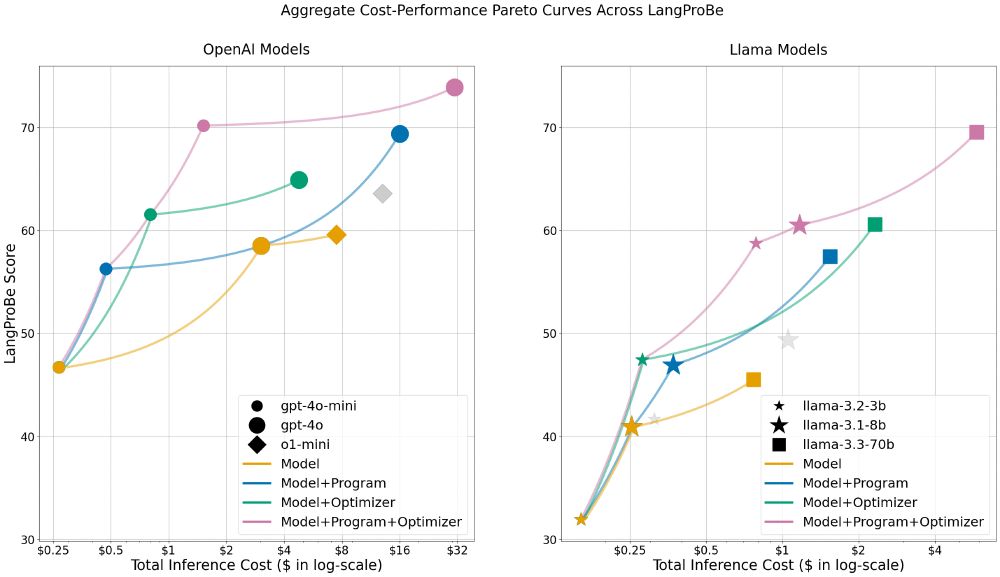

We find that, on avg across diverse tasks, smaller models within optimized programs beat calls to larger models at a fraction of the cost.

We find that, on avg across diverse tasks, smaller models within optimized programs beat calls to larger models at a fraction of the cost.

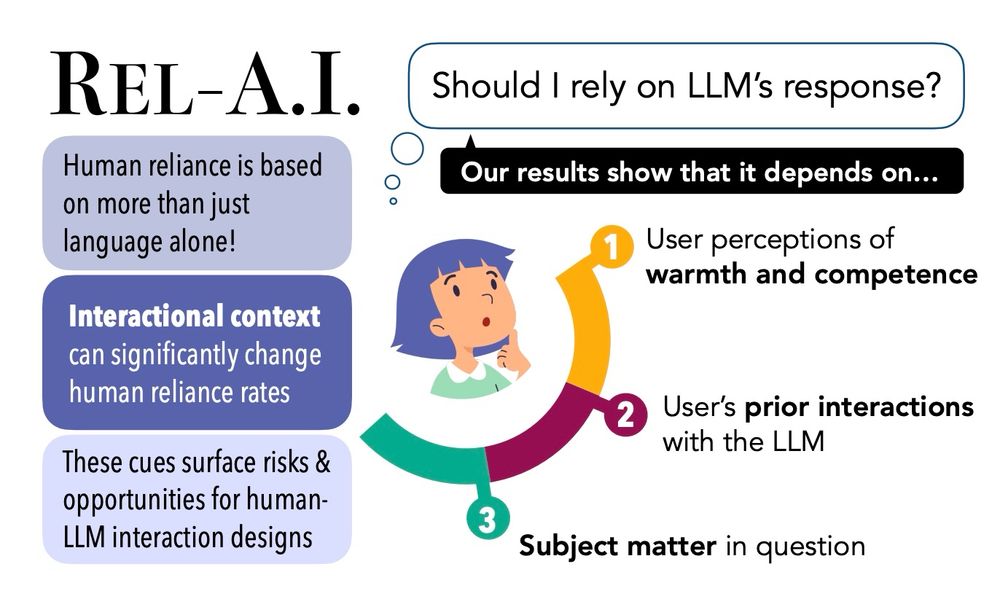

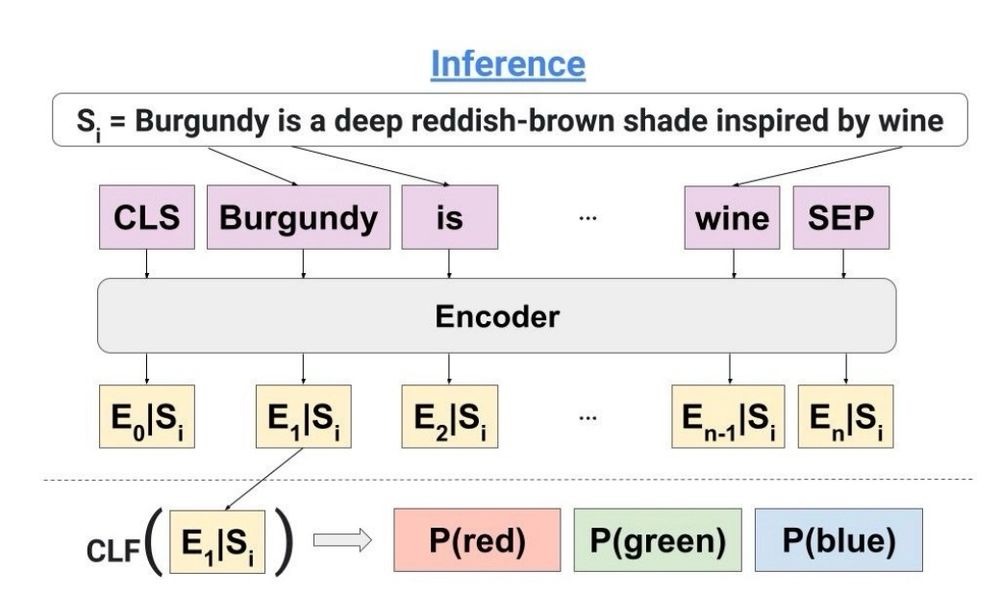

The first reveals how human over-reliance can be exacerbated by LLM friendliness. The second presents a novel computational method for concept tracing. Check them out!

arxiv.org/pdf/2407.07950

arxiv.org/pdf/2502.05704

The first reveals how human over-reliance can be exacerbated by LLM friendliness. The second presents a novel computational method for concept tracing. Check them out!

arxiv.org/pdf/2407.07950

arxiv.org/pdf/2502.05704

Want to learn more?

Paper: arxiv.org/pdf/2410.03017v2

Code: github.com/rosewang2008/tutor-copilot

School visit: www.youtube.com/watch?v=IOd2...

Thank you @nssaccelerator.bsky.social @stanfordnlp.bsky.social for the support!

Want to learn more?

Paper: arxiv.org/pdf/2410.03017v2

Code: github.com/rosewang2008/tutor-copilot

School visit: www.youtube.com/watch?v=IOd2...

Thank you @nssaccelerator.bsky.social @stanfordnlp.bsky.social for the support!

A year ago, I partnered with a district facing a major challenge. Instead of doing AI x Education research in isolation, I focused on their real needs.🧵

A year ago, I partnered with a district facing a major challenge. Instead of doing AI x Education research in isolation, I focused on their real needs.🧵

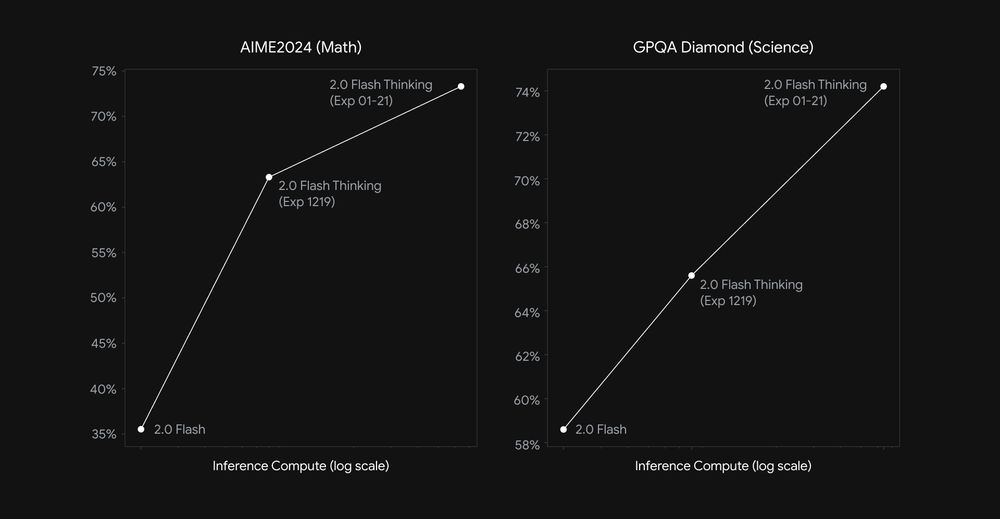

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%