Passionate about math, neuro & AI

dendrites.gr

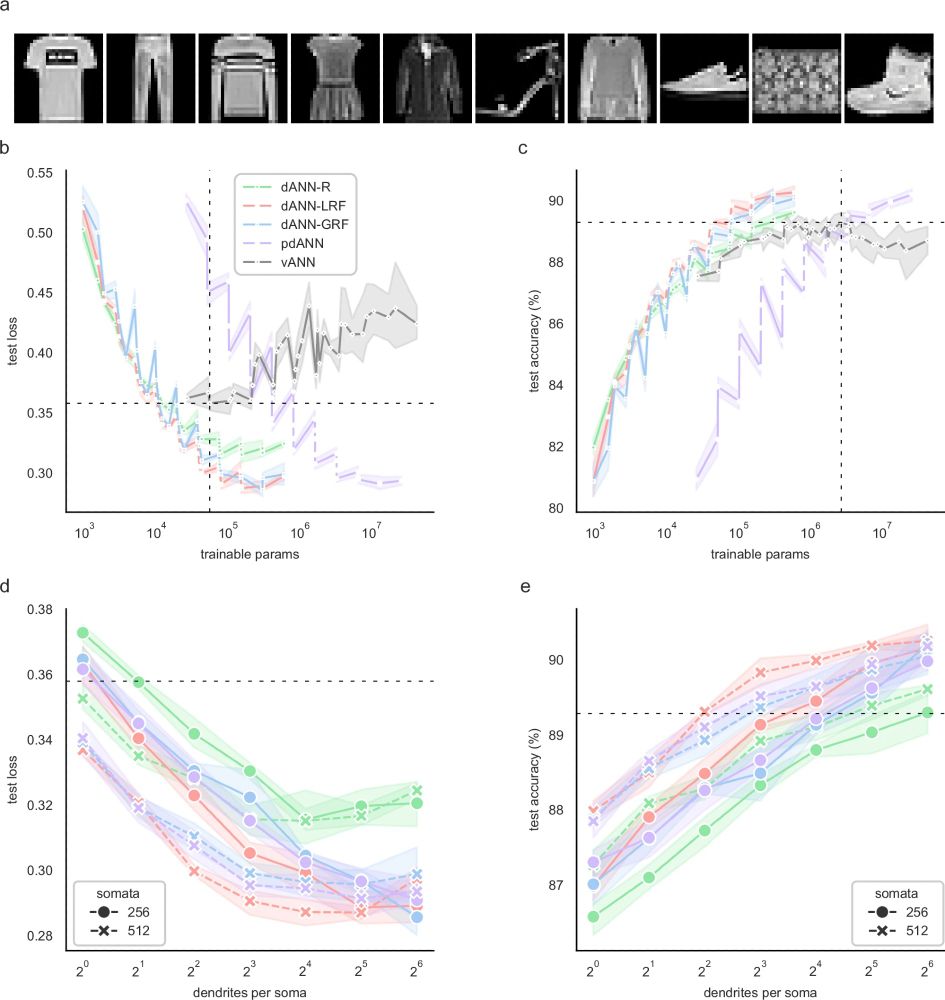

![The following models were compared: dANN-R and vANN-R with random input sampling (light and dark green), dANN-LRF and vANN-LRF with local receptive field sampling (light and dark red), dANN-GRF and vANN-GRF with global receptive field sampling (light and dark blue), and pdANN and vANN with all-to-all sampling (light and dark purple). a Number of trainable parameters that each model needs to match the highest test accuracy of the respective vANN. b The same as in a, but showing the number of trainable parameters required to match the minimum test loss of the vANN. c Difference (Δ) in accuracy efficiency score between the structured (dANN/pdANN) and vANN models. Test accuracy is normalized with the logarithm of trainable parameters times the number of epochs needed to achieve minimum validation loss. The score is bounded in [0, 1]. d Same as in c, but showing the difference (Δ) of the loss efficiency score. Again, we normalized the test score with the logarithm of the trainable parameters times the number of epochs needed to achieve minimum validation loss. The score is bounded in [0, ∞). In all barplots the error bars represent one standard deviation across N = 5 initializations for each model.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:37bv47whnqveuf65qul6qhbh/bafkreifu23laiiismuepahmoblxy2pm4l2ne7b3lnyp5pzx2wgmekxuwyu@jpeg)

![The comparison is made between the three dendritic models, dANN-R (green), dANN-LRF (red), dANN-GRF (blue), the partly-dendritic model pdANN (purple) and the vANN (grey). a Number of trainable parameters that each model needs to match the highest test accuracy of the vANN. b The same as in a, but showing the number of trainable parameters required to match the minimum test loss of the vANN. c Accuracy efficiency scores of all models across the five datasets tested. This score reports the best test accuracy achieved by a model, normalized with the logarithm of the product of trainable parameters with the number of epochs needed to achieve minimum validation loss. The score is bounded in [0, 1]. d Same as in c, but showing the loss efficiency score. Here the minimum loss achieved by a model is normalized with the logarithm of the trainable parameters times the number of epochs needed to achieve minimum validation loss. The score is bounded in [0, ∞). In all barplots the error bars represent one standard deviation across N = 5 initializations for each model.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:37bv47whnqveuf65qul6qhbh/bafkreieobsokg3gub4l6zpaix2bat5bofimxs3occcyjagqpitcz5ylqsa@jpeg)

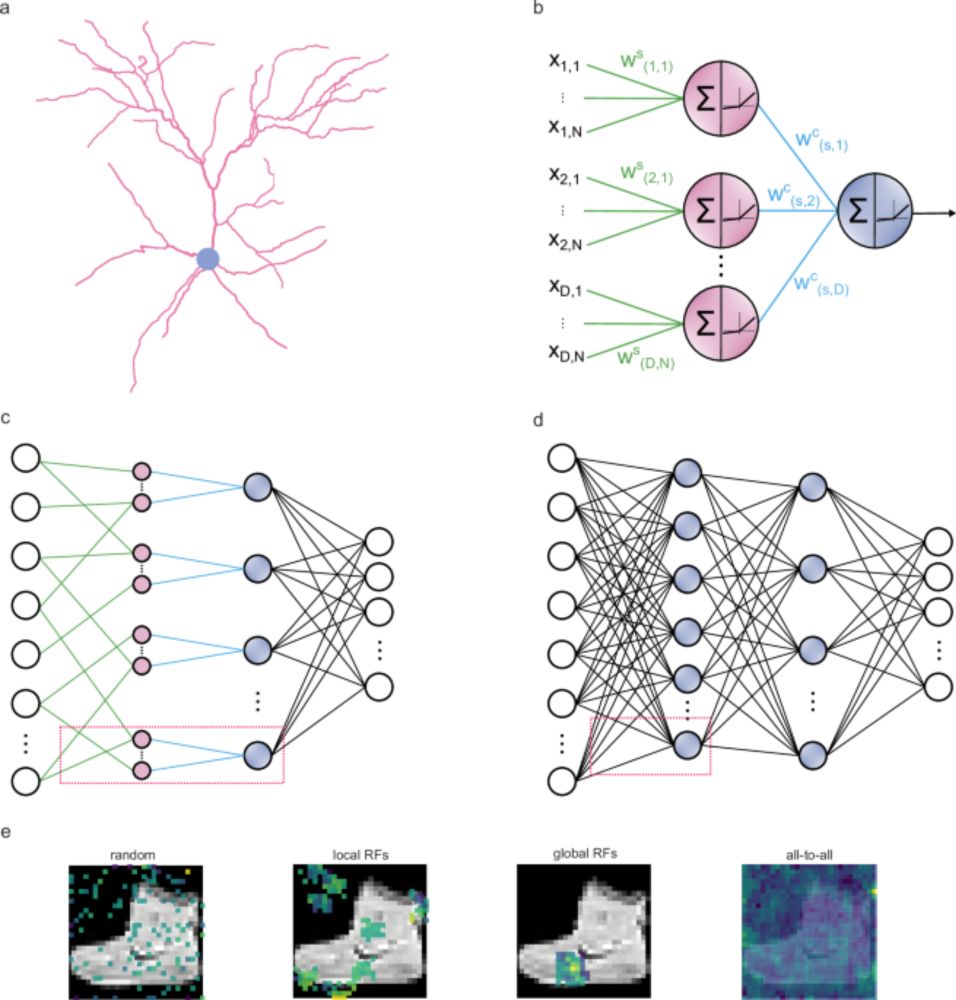

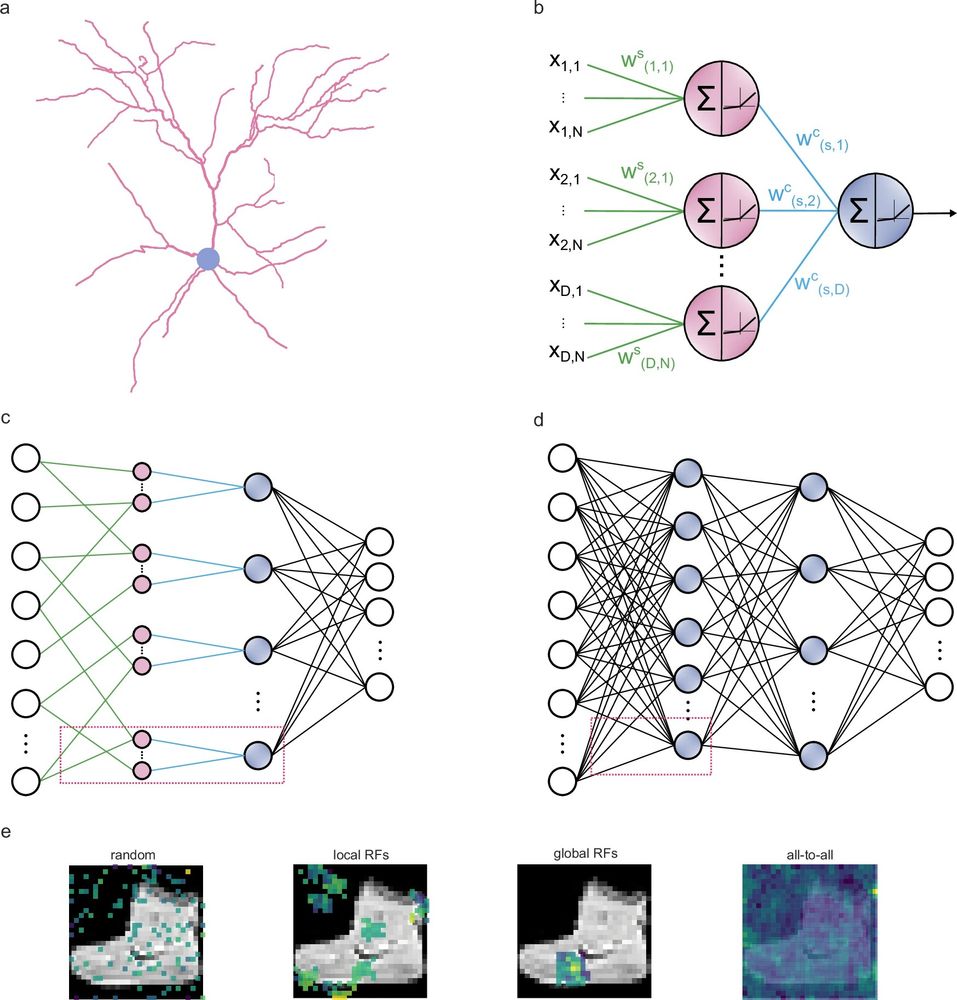

Inspired by the receptive fields of visual cortex neurons, this approach mimics the locally connected networks. (6/14)

Inspired by the receptive fields of visual cortex neurons, this approach mimics the locally connected networks. (6/14)

Dends enable complex computations, like logical operations, signal amplification, and more 🧠💡

doi.org/10.1016/j.co...

www.nature.com/articles/s41... (4/14)

Dends enable complex computations, like logical operations, signal amplification, and more 🧠💡

doi.org/10.1016/j.co...

www.nature.com/articles/s41... (4/14)