@hanlin_zhang, @depen_morwani, @vyasnikhil96, @uuujingfeng, @difanzou, @udayaghai

#AI #ML #ScalingLaws

@hanlin_zhang, @depen_morwani, @vyasnikhil96, @uuujingfeng, @difanzou, @udayaghai

#AI #ML #ScalingLaws

Check out the details here:

📄 arxiv.org/abs/2410.21676

📝 Blog: tinyurl.com/ysufbwsr

Check out the details here:

📄 arxiv.org/abs/2410.21676

📝 Blog: tinyurl.com/ysufbwsr

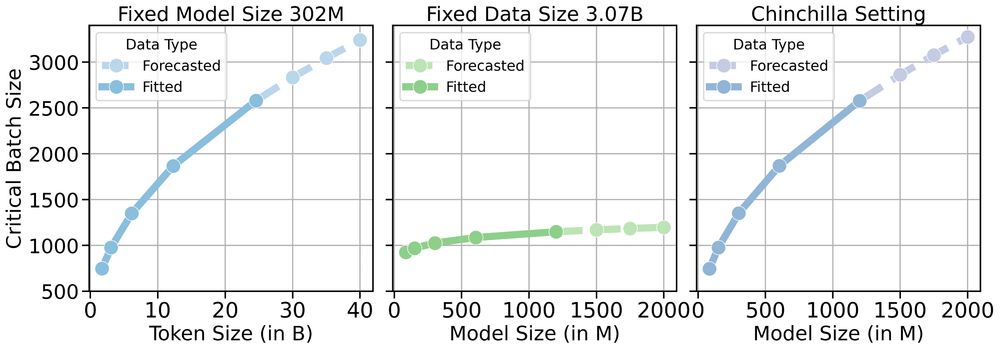

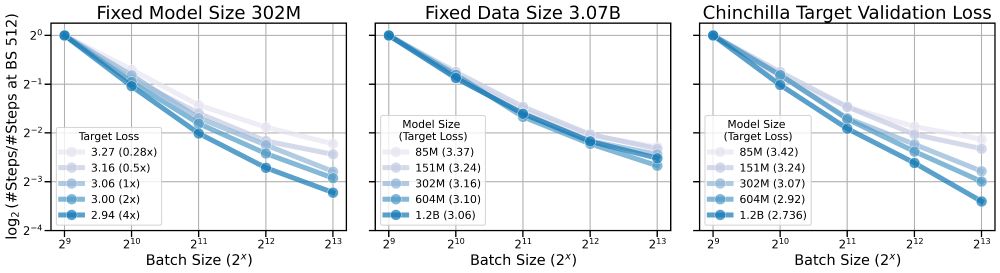

📈CBS increases as dataset size grows

🤏CBS remains weakly dependent on model size

Data size, not model size, drives parallel efficiency for large-scale pre-training.

📈CBS increases as dataset size grows

🤏CBS remains weakly dependent on model size

Data size, not model size, drives parallel efficiency for large-scale pre-training.