Shamelessly copied from a slack message.

Shamelessly copied from a slack message.

t.co/CVkAKNXZme

t.co/CVkAKNXZme

They tested filtration of species/genus data against adv. fine-tuning. It didn't work well. This suggests filtering may work better if applied to entire tasks/domains rather than specific instances.

arxiv.org/abs/2510.27629

They tested filtration of species/genus data against adv. fine-tuning. It didn't work well. This suggests filtering may work better if applied to entire tasks/domains rather than specific instances.

arxiv.org/abs/2510.27629

We showed that filtering biothreat-related pretraining data is SOTA for making models resist adversarial fine-tuning. We proposed an amendment to the hypothesis from papers 1 and 2 above.

deepignorance.ai

We showed that filtering biothreat-related pretraining data is SOTA for making models resist adversarial fine-tuning. We proposed an amendment to the hypothesis from papers 1 and 2 above.

deepignorance.ai

They reported an instance where filtering biothreat data didn't have a big impact. But without more info on how and how much they filtered, it's hard to draw strong conclusions.

arxiv.org/abs/2508.03153

They reported an instance where filtering biothreat data didn't have a big impact. But without more info on how and how much they filtered, it's hard to draw strong conclusions.

arxiv.org/abs/2508.03153

They found similar results to the safety pretraining paper -- that models trained on without toxic text could be *more* vulnerable to attacks eliciting toxicity.

arxiv.org/abs/2505.04741

They found similar results to the safety pretraining paper -- that models trained on without toxic text could be *more* vulnerable to attacks eliciting toxicity.

arxiv.org/abs/2505.04741

The experiment of theirs that was most interesting to me found that models trained without toxic text could be *more* vulnerable to attacks eliciting toxicity.

arxiv.org/abs/2504.16980

The experiment of theirs that was most interesting to me found that models trained without toxic text could be *more* vulnerable to attacks eliciting toxicity.

arxiv.org/abs/2504.16980

It appears that state AI bills -- many of which big tech has fought tooth and nail to prevent -- are categorically regulatory capture.

It appears that state AI bills -- many of which big tech has fought tooth and nail to prevent -- are categorically regulatory capture.

t.co/Ag4J6rrejz

t.co/Ag4J6rrejz

Thx Epoch & Bhandari et al.

Thx Epoch & Bhandari et al.

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

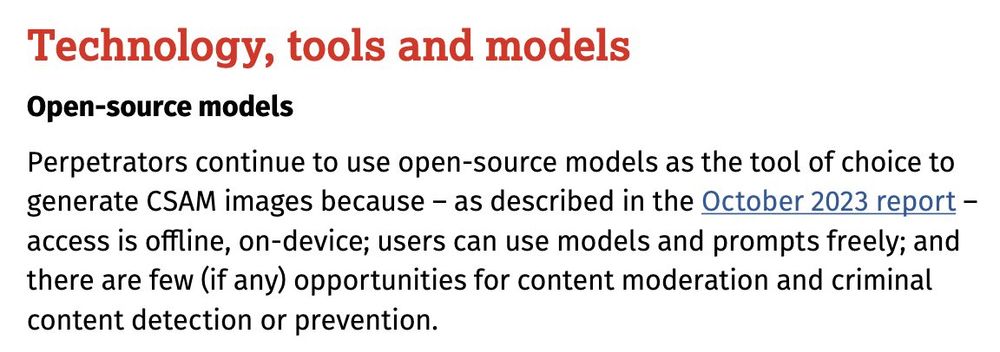

admin.iwf.org.uk/media/nadlc...

admin.iwf.org.uk/media/nadlc...

Thx @EpochAIResearch & Bhandari et al.

Thx @EpochAIResearch & Bhandari et al.

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

From a technical perspective, safeguarding open-weight model safety is AI safety in hard mode. But there's still a lot of progress to be made. Our new paper covers 16 open problems.

🧵🧵🧵

I'm increasingly persuaded that the only quantitative measures that matter anymore are usage stats & profit.

I'm increasingly persuaded that the only quantitative measures that matter anymore are usage stats & profit.

Now that Moonshot claims Kimi K2 Thinking is SOTA, it seems, uh, less than ideal that it came with zero reporting related to safety/risk.

Now that Moonshot claims Kimi K2 Thinking is SOTA, it seems, uh, less than ideal that it came with zero reporting related to safety/risk.

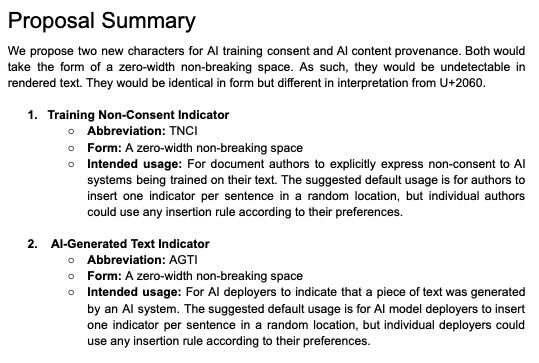

unicode.org/L2/L2025/252...

t.co/yJfp8ezU64

unicode.org/L2/L2025/252...

t.co/yJfp8ezU64

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

Of course -- that's obvious. Nobody would ever dispute that.

So then why are we saying that?

Maybe it's a little too obvious...

t.co/us8MEhMrIh

t.co/us8MEhMrIh