(10/11)

(10/11)

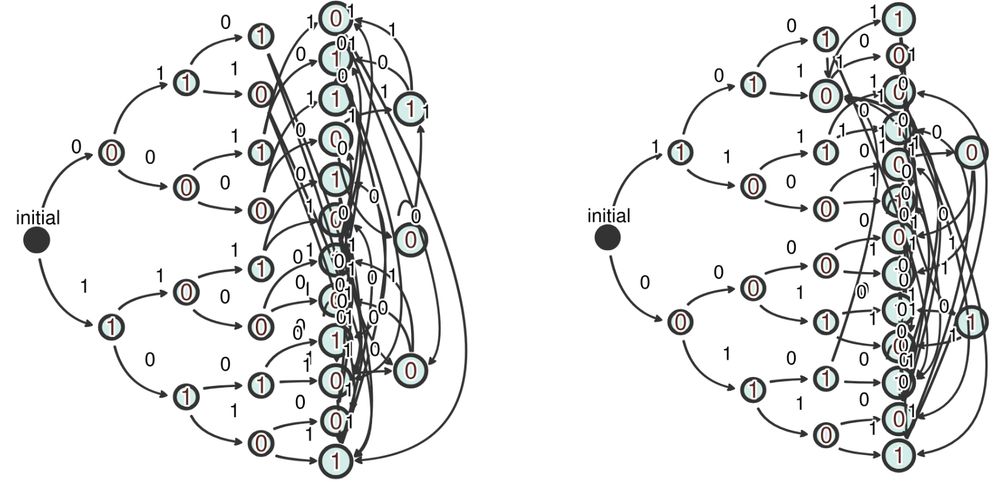

(9/11)

(9/11)

With enough mergers, the automaton becomes finite, fixing its behavior for long sequences.

If the training data uniquely specifies the task, this results in full generalization.

(8/11)

With enough mergers, the automaton becomes finite, fixing its behavior for long sequences.

If the training data uniquely specifies the task, this results in full generalization.

(8/11)

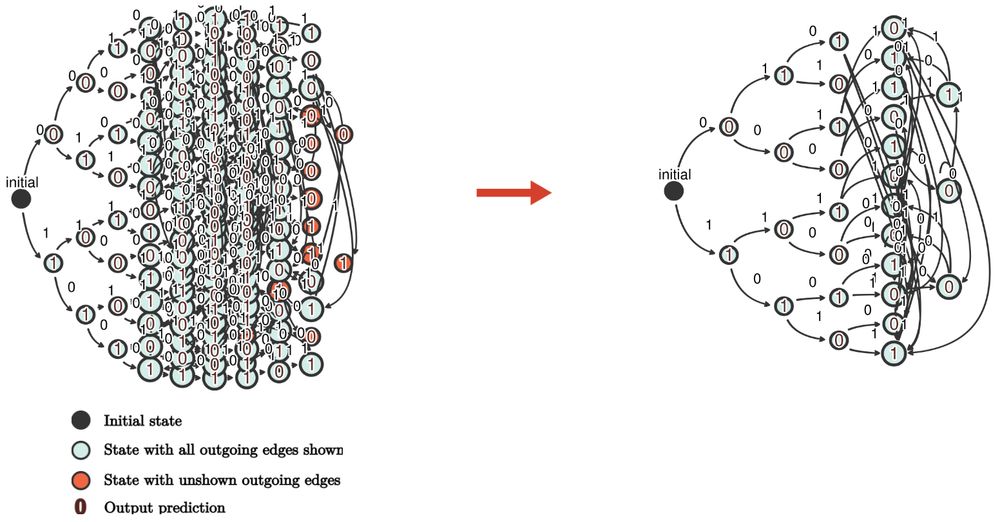

We find that pairs of sequences which always agree on target outputs after receiving any possible additional symbols will merge representations under certain conditions.

(7/11)

We find that pairs of sequences which always agree on target outputs after receiving any possible additional symbols will merge representations under certain conditions.

(7/11)

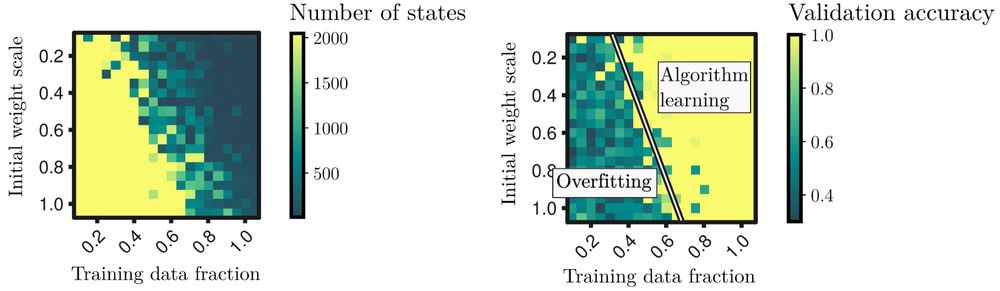

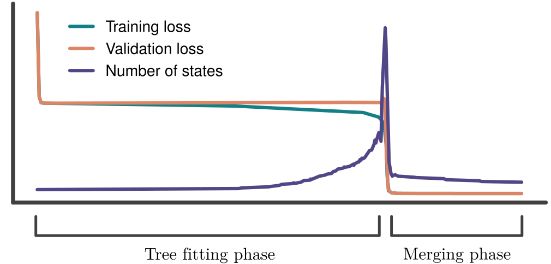

-An initial phase where the RNN builds an infinite tree and fits it to the training data, reducing only the training loss.

-A second merging phase, where representations merge until the automaton becomes finite, with a sudden drop in validation loss.

(6/11)

-An initial phase where the RNN builds an infinite tree and fits it to the training data, reducing only the training loss.

-A second merging phase, where representations merge until the automaton becomes finite, with a sudden drop in validation loss.

(6/11)

(5/11)

(5/11)

This cannot be explained by smooth interpolation of the training data, and suggests some kind of algorithm is being learned.

(4/11)

This cannot be explained by smooth interpolation of the training data, and suggests some kind of algorithm is being learned.

(4/11)

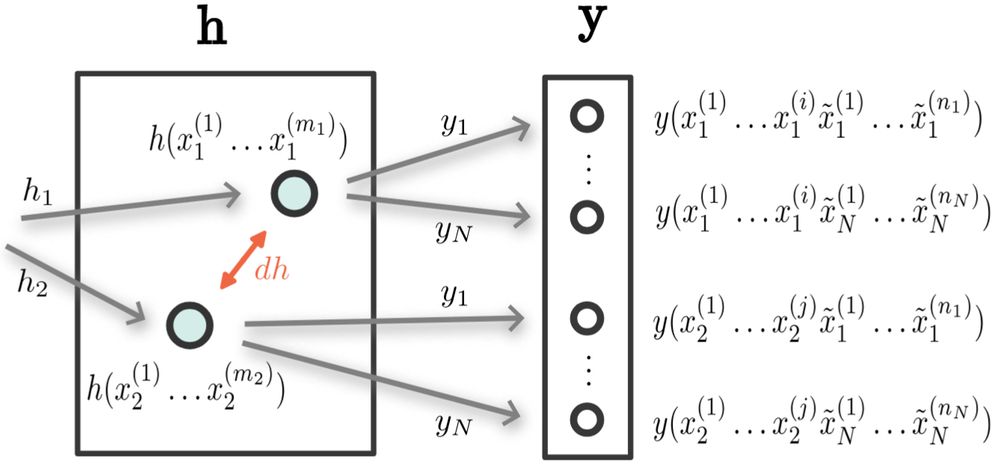

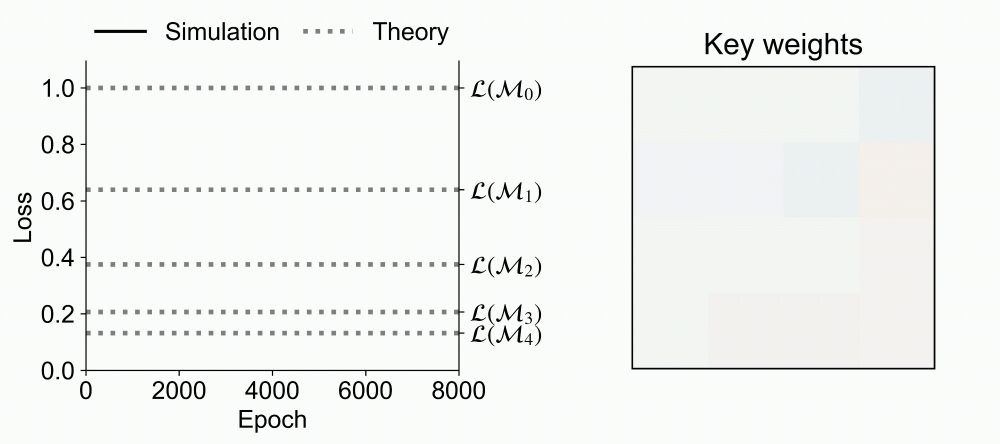

What in-context learning algorithm is implemented at each plateau?

What in-context learning algorithm is implemented at each plateau?

We derive an exact analytical time-course solution for a class of datasets and initializations.

We derive an exact analytical time-course solution for a class of datasets and initializations.

We examine 2 common parametrizations of linear attention: one with the key and query weights merged as a single matrix, and one with separate key and query weights

We examine 2 common parametrizations of linear attention: one with the key and query weights merged as a single matrix, and one with separate key and query weights

Sharing our new Spotlight paper @icmlconf.bsky.social: Training Dynamics of In-Context Learning in Linear Attention

arxiv.org/abs/2501.16265

Led by Yedi Zhang with @aaditya6284.bsky.social and Peter Latham

Sharing our new Spotlight paper @icmlconf.bsky.social: Training Dynamics of In-Context Learning in Linear Attention

arxiv.org/abs/2501.16265

Led by Yedi Zhang with @aaditya6284.bsky.social and Peter Latham