If an AI model which has a perfect neural as well as behavior scores, then will that model is a model of consciousness?

If an AI model which has a perfect neural as well as behavior scores, then will that model is a model of consciousness?

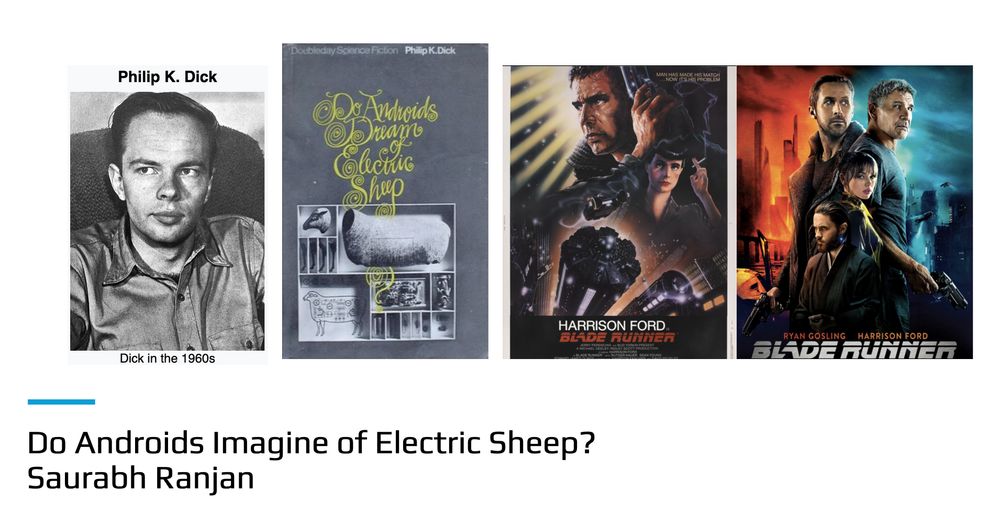

#AI #BladeRunner #NetworkScience #NLP

#AI #BladeRunner #NetworkScience #NLP

(first three columns from left in the pic are imagination networks for VVIQ-2, next three columns for PSIQ) 🧵3/n

(first three columns from left in the pic are imagination networks for VVIQ-2, next three columns for PSIQ) 🧵3/n