Google Brain. ML Efficiency, LLMs,

@trustworthy_ml.

If you made it this far, take a look at the full 68 pages: arxiv.org/abs/2504.20879

Any feedback or corrections are of course very welcome.

If you made it this far, take a look at the full 68 pages: arxiv.org/abs/2504.20879

Any feedback or corrections are of course very welcome.

As scientists, we must do better.

As a community, I hope we can demand better. We make very clear the 5 changes needed.

As scientists, we must do better.

As a community, I hope we can demand better. We make very clear the 5 changes needed.

Arena towards the same small group have created conditions to overfit to Arena-specific dynamics rather than general model quality.

Arena towards the same small group have created conditions to overfit to Arena-specific dynamics rather than general model quality.

While using Arena-style data in training boosts win rates by 112%, this improvement doesn't transfer to tasks like MMLU, indicating overfitting to Arena's quirks rather than general performance gains.

While using Arena-style data in training boosts win rates by 112%, this improvement doesn't transfer to tasks like MMLU, indicating overfitting to Arena's quirks rather than general performance gains.

1) proprietary models sampled at higher rates to appear in battles 📶

2) open-weights + open-source models removed from Arena more often 🚮

3) How many private variants 🔍

1) proprietary models sampled at higher rates to appear in battles 📶

2) open-weights + open-source models removed from Arena more often 🚮

3) How many private variants 🔍

Chatbot Arena is a open community resource that provides free feedback but 61.3% of all data goes to proprietary model providers.

Chatbot Arena is a open community resource that provides free feedback but 61.3% of all data goes to proprietary model providers.

Being able to choose the best score to disclose enables systematic gaming of Arena score.

This advantage increases with number of variants and if all other providers don’t know they can also private test.

Being able to choose the best score to disclose enables systematic gaming of Arena score.

This advantage increases with number of variants and if all other providers don’t know they can also private test.

Providers can choose what score to disclose and retract all others.

At an extreme, we see testing of up to 27 models in lead up to releases.

Providers can choose what score to disclose and retract all others.

At an extreme, we see testing of up to 27 models in lead up to releases.

We show that preferential policies engaged in by a handful of providers lead to overfitting to Arena-specific metrics rather than genuine AI progress.

We show that preferential policies engaged in by a handful of providers lead to overfitting to Arena-specific metrics rather than genuine AI progress.

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

At the Elysee dinner, Macron put this tension well --saying it would be unfortunate if we end up with a Chinese, French, US model – biased towards our differences.

At the Elysee dinner, Macron put this tension well --saying it would be unfortunate if we end up with a Chinese, French, US model – biased towards our differences.

I think a summit is typically most valuable as a catalyst, not as a solution in itself.

But, will share some observations.

I think a summit is typically most valuable as a catalyst, not as a solution in itself.

But, will share some observations.

This shows how performance on western concepts impacts model rankings.

This shows how performance on western concepts impacts model rankings.

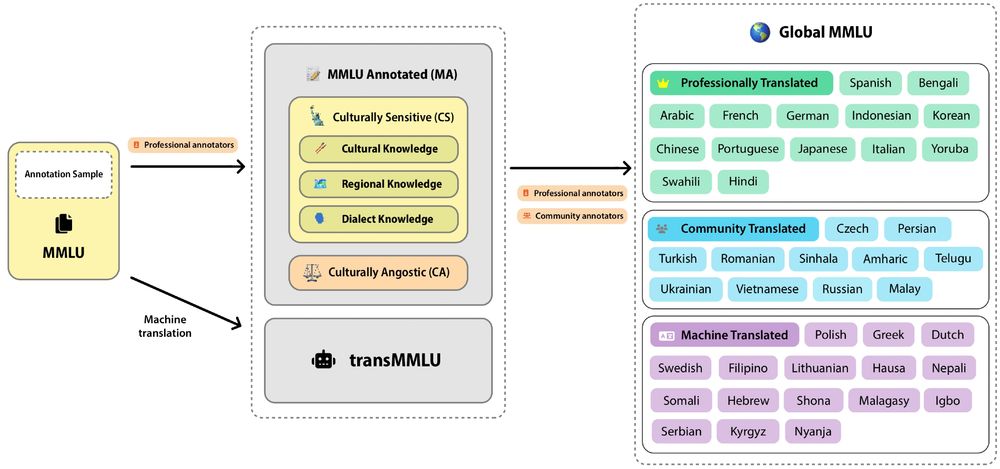

✍️ greatly improve translation quality with human translations and post-edits

🗂️ Use professional annotators to trace what questions into Culturally Sensitive (CS 🗽) and Culturally Agnostic (CA ⚖️) subsets.

We release on HuggingFace: huggingface.co/datasets/Coh...

✍️ greatly improve translation quality with human translations and post-edits

🗂️ Use professional annotators to trace what questions into Culturally Sensitive (CS 🗽) and Culturally Agnostic (CA ⚖️) subsets.

We release on HuggingFace: huggingface.co/datasets/Coh...

An outsanding 85% are tagged as specific to Western culture 🗽 or western regions 🗺️

Progress on MMLU requires excelling at western culture.

An outsanding 85% are tagged as specific to Western culture 🗽 or western regions 🗺️

Progress on MMLU requires excelling at western culture.

As part of a massive cross-institutional collaboration:

🗽Find MMLU is heavily overfit to western culture

🔍 Professional annotation of cultural sensitivity data

🌍 Release improved Global-MMLU 42 languages

📜 Paper: arxiv.org/pdf/2412.03304

📂 Data: hf.co/datasets/Coh...

As part of a massive cross-institutional collaboration:

🗽Find MMLU is heavily overfit to western culture

🔍 Professional annotation of cultural sensitivity data

🌍 Release improved Global-MMLU 42 languages

📜 Paper: arxiv.org/pdf/2412.03304

📂 Data: hf.co/datasets/Coh...

Anurag Agrawal, Sayash Kapoor, Sanmi Koyejo, Marie Pellat, Rishi Bommasani and Nick Frosst. 🔥

Learn more about the work here: arxiv.org/abs/2412.01946

Anurag Agrawal, Sayash Kapoor, Sanmi Koyejo, Marie Pellat, Rishi Bommasani and Nick Frosst. 🔥

Learn more about the work here: arxiv.org/abs/2412.01946

Our recent cross-institutional work asks: Does the available evidence match the current level of attention?

📜 arxiv.org/abs/2412.01946

Our recent cross-institutional work asks: Does the available evidence match the current level of attention?

📜 arxiv.org/abs/2412.01946

Does anyone know what airport this is?

Does anyone know what airport this is?