Causal World Models for Curious Robots @ University of Tübingen/Max Planck Institute for Intelligent Systems 🇩🇪

#reinforcementlearning #robotics #causality #meditation #vegan

And not get bogged by the fact that I am too distracted to go deep into one input stream (book or podcast or article or paper) at a time

And not get bogged by the fact that I am too distracted to go deep into one input stream (book or podcast or article or paper) at a time

@visakanv.com (not sure if you identify as a fox in the fox hedgehog dichotomy though)

@visakanv.com (not sure if you identify as a fox in the fox hedgehog dichotomy though)

Anyways, If these two problems are related, just establishing that would be an amazing paper!

Anyways, If these two problems are related, just establishing that would be an amazing paper!

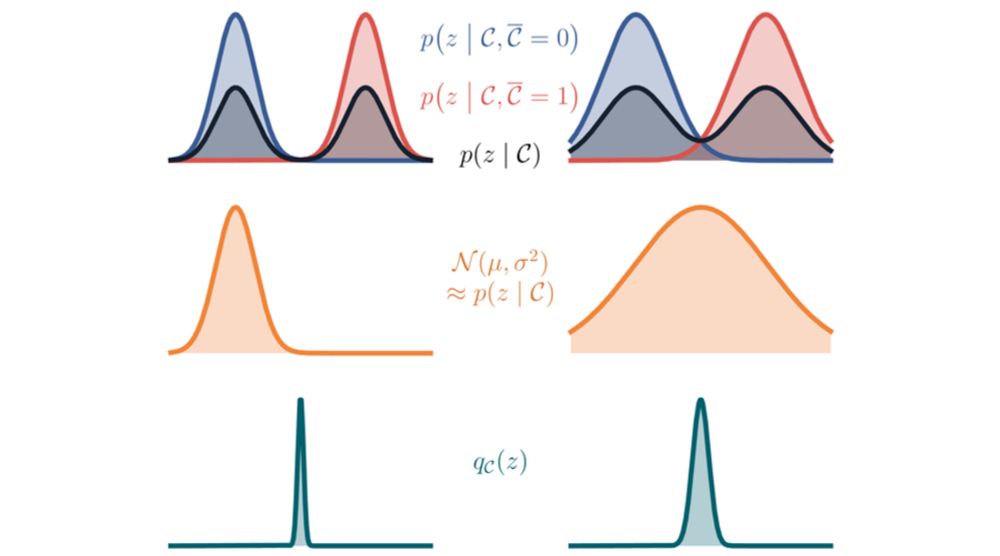

paper arxiv.org/abs/2101.07046

Applied to world models for pomdps web.archive.org/web/20241009...

paper arxiv.org/abs/2101.07046

Applied to world models for pomdps web.archive.org/web/20241009...

arxiv.org/abs/2410.23506

arxiv.org/abs/2410.23506