Sai Kumar Dwivedi

@saidwivedi.in

PhD Candidate at @MPI-IS || 3D Vision & Digital Avatars || Ex: @Daimler, @Intel

Webpage: https://saidwivedi.in

Webpage: https://saidwivedi.in

InteractVLM (#CVPR2025) is a great collaboration MPI-IS, UvA and Inria.

Authors: @saidwivedi.in, @anticdimi.bsky.social, S. Tripathi, O. Taheri, C. Schmid, @michael-j-black.bsky.social and @dimtzionas.bsky.social.

Code & models available at: interactvlm.is.tue.mpg.de (10/10)

Authors: @saidwivedi.in, @anticdimi.bsky.social, S. Tripathi, O. Taheri, C. Schmid, @michael-j-black.bsky.social and @dimtzionas.bsky.social.

Code & models available at: interactvlm.is.tue.mpg.de (10/10)

June 15, 2025 at 12:23 PM

InteractVLM (#CVPR2025) is a great collaboration MPI-IS, UvA and Inria.

Authors: @saidwivedi.in, @anticdimi.bsky.social, S. Tripathi, O. Taheri, C. Schmid, @michael-j-black.bsky.social and @dimtzionas.bsky.social.

Code & models available at: interactvlm.is.tue.mpg.de (10/10)

Authors: @saidwivedi.in, @anticdimi.bsky.social, S. Tripathi, O. Taheri, C. Schmid, @michael-j-black.bsky.social and @dimtzionas.bsky.social.

Code & models available at: interactvlm.is.tue.mpg.de (10/10)

InteractVLM is the first method that infers 3D contacts on both humans and objects from in-the-wild images, and exploits these for 3D reconstruction via an optimization pipeline. In contrast, existing methods like PHOSA rely on handcrafted or heuristic-based contacts. (9/10)

June 15, 2025 at 12:23 PM

InteractVLM is the first method that infers 3D contacts on both humans and objects from in-the-wild images, and exploits these for 3D reconstruction via an optimization pipeline. In contrast, existing methods like PHOSA rely on handcrafted or heuristic-based contacts. (9/10)

With just 5% of DAMON’s 3D body contact annotations, InteractVLM surpasses the fully-supervised DECO baseline trained on 100% of 3D annotations. This is promising for minimizing reliance on costly 3D data by using foundational models. (8/10)

June 15, 2025 at 12:23 PM

With just 5% of DAMON’s 3D body contact annotations, InteractVLM surpasses the fully-supervised DECO baseline trained on 100% of 3D annotations. This is promising for minimizing reliance on costly 3D data by using foundational models. (8/10)

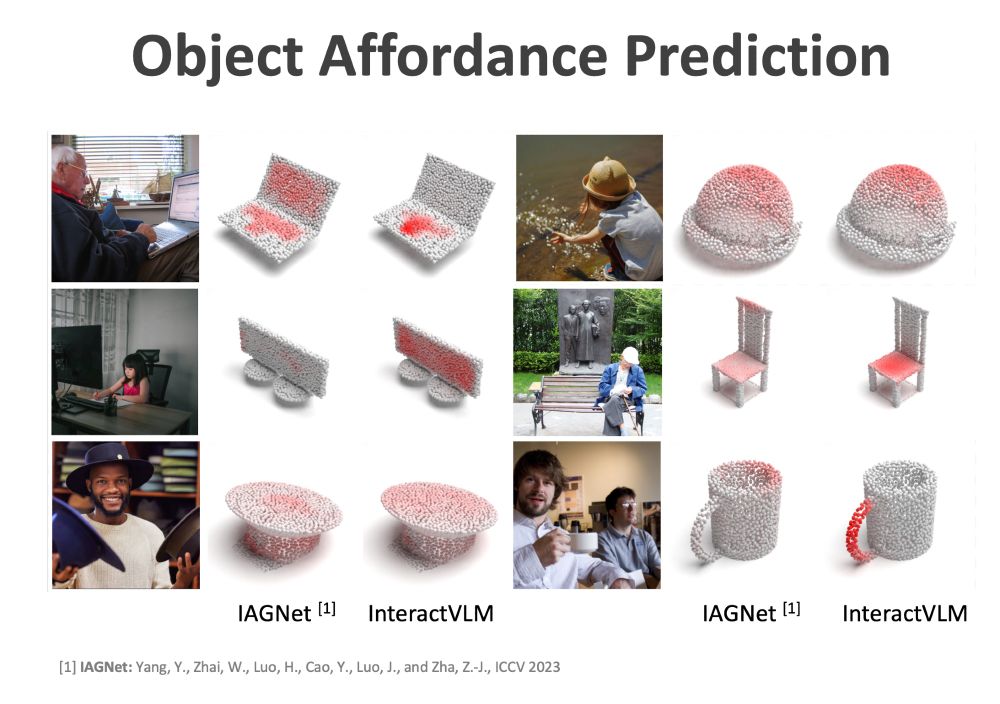

InteractVLM also shows strong outperformance on object affordance prediction on the PIAD dataset. Here affordance is defined as contact probabilities on the object. (7/10)

June 15, 2025 at 12:23 PM

InteractVLM also shows strong outperformance on object affordance prediction on the PIAD dataset. Here affordance is defined as contact probabilities on the object. (7/10)

InteractVLM significantly outperforms prior work, both qualitatively and quantitatively, on in-the-wild 3D human (binary & semantic) contact prediction on the DAMON dataset. (6/10)

June 15, 2025 at 12:23 PM

InteractVLM significantly outperforms prior work, both qualitatively and quantitatively, on in-the-wild 3D human (binary & semantic) contact prediction on the DAMON dataset. (6/10)

To bridge this 2D-to-3D gap, we propose "Render-Localize-Lift":

- Render: 3D human/object meshes into multiview 2D images.

- Localize: A Multiview Localization (MV-Loc) model, guided by VLM tokens, predicts 2D contact masks.

- Lift: 2D contact masks to 3D.

(5/10)

- Render: 3D human/object meshes into multiview 2D images.

- Localize: A Multiview Localization (MV-Loc) model, guided by VLM tokens, predicts 2D contact masks.

- Lift: 2D contact masks to 3D.

(5/10)

June 15, 2025 at 12:23 PM

To bridge this 2D-to-3D gap, we propose "Render-Localize-Lift":

- Render: 3D human/object meshes into multiview 2D images.

- Localize: A Multiview Localization (MV-Loc) model, guided by VLM tokens, predicts 2D contact masks.

- Lift: 2D contact masks to 3D.

(5/10)

- Render: 3D human/object meshes into multiview 2D images.

- Localize: A Multiview Localization (MV-Loc) model, guided by VLM tokens, predicts 2D contact masks.

- Lift: 2D contact masks to 3D.

(5/10)

How can we infer 3D contact with limited 3D data? InteractVLM exploits foundational models—a VLM & localization model fine tuned to reason about contact. Given an image & prompt, the VLM outputs tokens for localization. But these models work in 2D, while contact is 3D. (4/10)

June 15, 2025 at 12:23 PM

How can we infer 3D contact with limited 3D data? InteractVLM exploits foundational models—a VLM & localization model fine tuned to reason about contact. Given an image & prompt, the VLM outputs tokens for localization. But these models work in 2D, while contact is 3D. (4/10)

Furthermore, simple binary contact (touching “any” object) misses the rich semantics of real multi-object interactions. Thus, we introduce a novel task - "Semantic Human Contact" estimation: predicting contact points on a human related to a specified object. (3/10)

June 15, 2025 at 12:23 PM

Furthermore, simple binary contact (touching “any” object) misses the rich semantics of real multi-object interactions. Thus, we introduce a novel task - "Semantic Human Contact" estimation: predicting contact points on a human related to a specified object. (3/10)

Precisely inferring where humans contact objects from an image is hard due to occlusion & depth ambiguity. Current datasets of images with 3D contact are small as they’re costly & tedious to create (mocap/manual labeling), limiting performance of contact predictors. (2/10)

June 15, 2025 at 12:23 PM

Precisely inferring where humans contact objects from an image is hard due to occlusion & depth ambiguity. Current datasets of images with 3D contact are small as they’re costly & tedious to create (mocap/manual labeling), limiting performance of contact predictors. (2/10)

Thanks to the workshop organizers: @yixinchen.bsky.social, Baoxiong Jia, @yaoyaofeng.bsky.social, @songyoupeng.bsky.social, Chuhang Zou, @saidwivedi.in, Yixin Zhu, Siyuan Huang! 🙌

And the challenge organizers: Xiongkun Linghu, Tai Wang, Jingli Lin, Xiaojian Ma

And the challenge organizers: Xiongkun Linghu, Tai Wang, Jingli Lin, Xiaojian Ma

April 3, 2025 at 8:29 AM

Thanks to the workshop organizers: @yixinchen.bsky.social, Baoxiong Jia, @yaoyaofeng.bsky.social, @songyoupeng.bsky.social, Chuhang Zou, @saidwivedi.in, Yixin Zhu, Siyuan Huang! 🙌

And the challenge organizers: Xiongkun Linghu, Tai Wang, Jingli Lin, Xiaojian Ma

And the challenge organizers: Xiongkun Linghu, Tai Wang, Jingli Lin, Xiaojian Ma

Thanks for sharing :) @chrisoffner3d.bsky.social can you also please add me to the list? I work on 3D human avatar.

January 9, 2025 at 12:56 AM

Thanks for sharing :) @chrisoffner3d.bsky.social can you also please add me to the list? I work on 3D human avatar.

Would love to be in the list 😃

November 24, 2024 at 9:18 AM

Would love to be in the list 😃