NLP, with a healthy dose of MT

Based in 🇮🇩, worked in 🇹🇱 🇵🇬 , from 🇫🇷

Tuesday @ 4pm

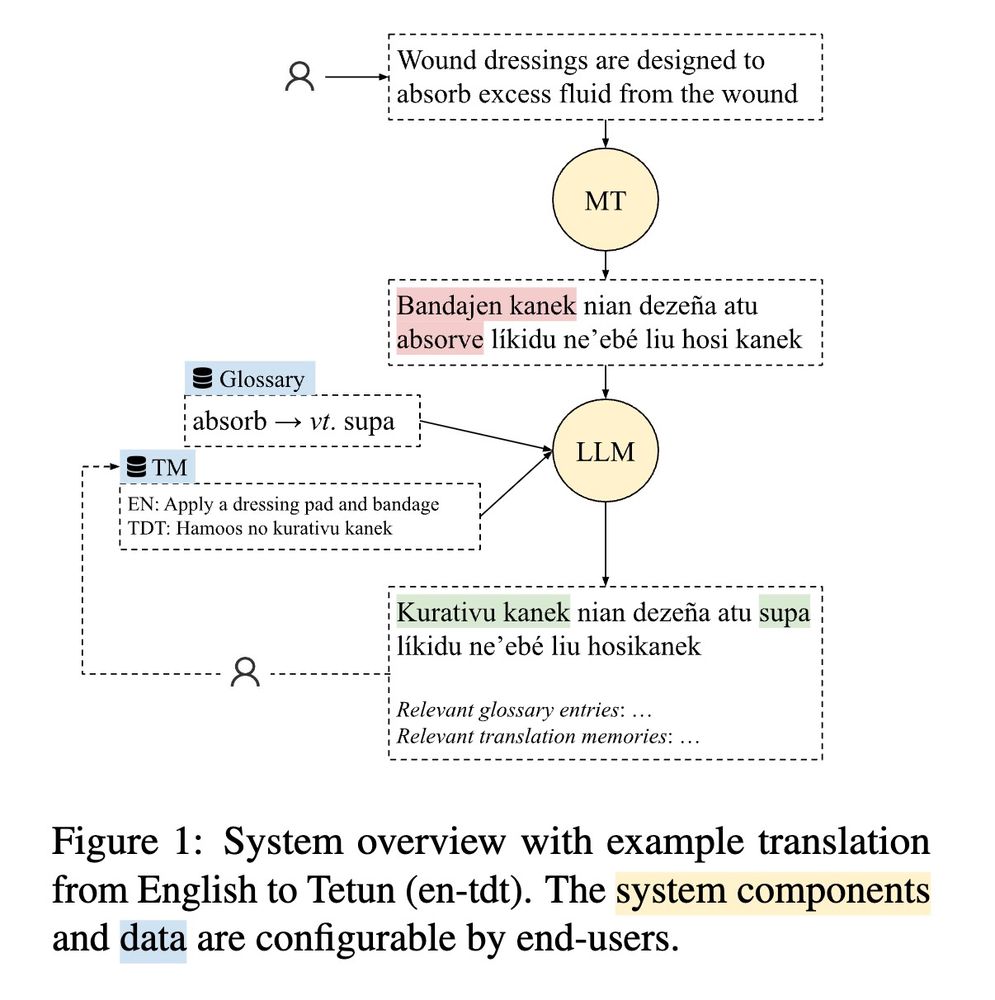

Working w 2 real use cases: medical translation into Tetun 🇹🇱 & disaster relief speech translation in Bislama 🇻🇺

Tuesday @ 4pm

Working w 2 real use cases: medical translation into Tetun 🇹🇱 & disaster relief speech translation in Bislama 🇻🇺

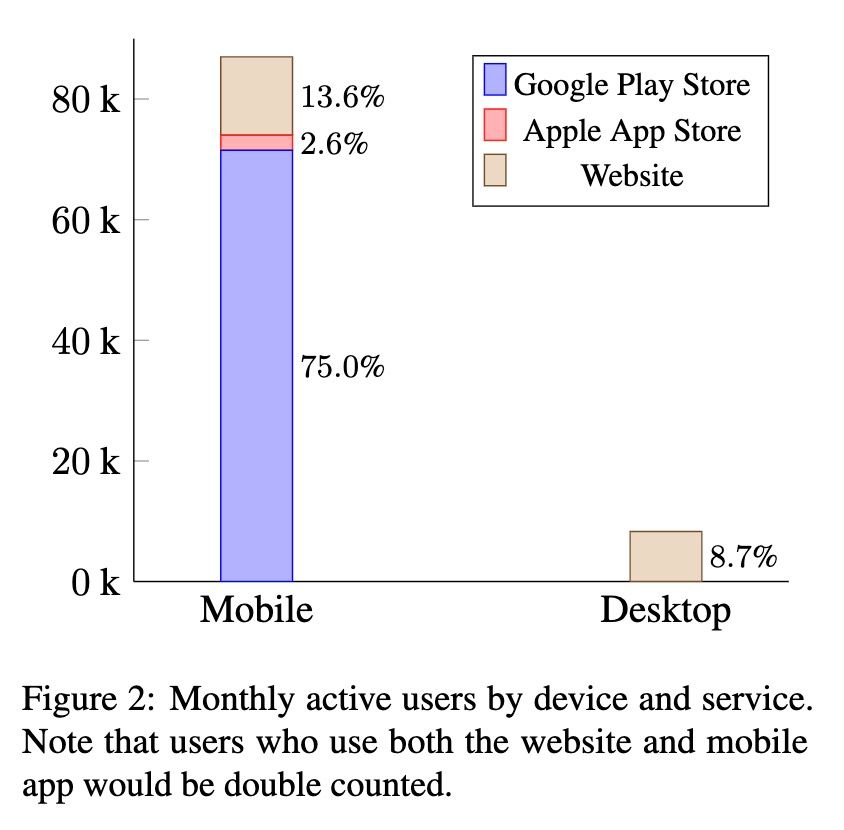

Takeaway: publishing MT model in mobile apps is probably more impactful than setting up a website / HuggingFace space.

Takeaway: publishing MT model in mobile apps is probably more impactful than setting up a website / HuggingFace space.

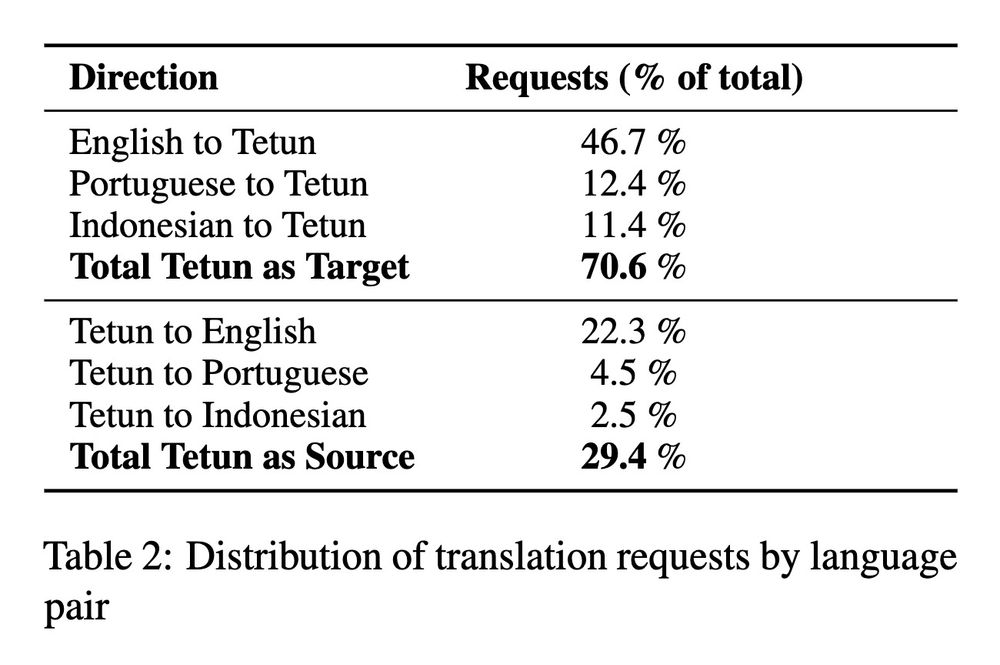

Takeaway for us MT folks: focus on translation into low-res langs, harder but more impactful

Takeaway for us MT folks: focus on translation into low-res langs, harder but more impactful

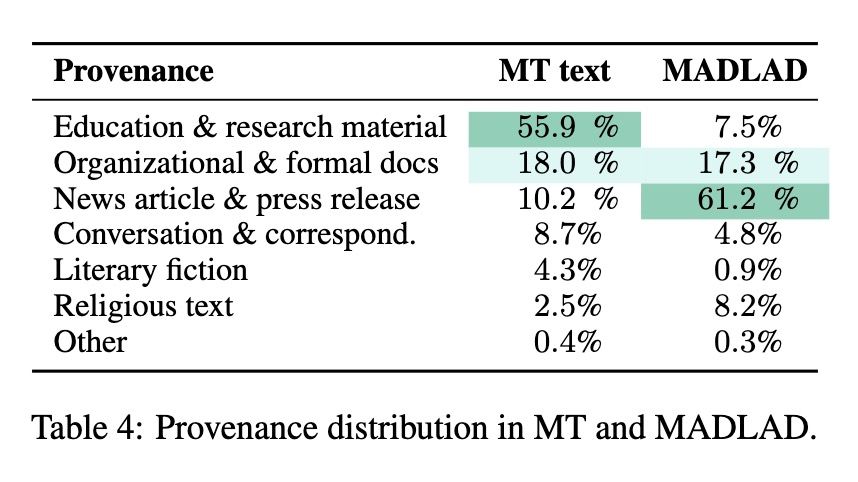

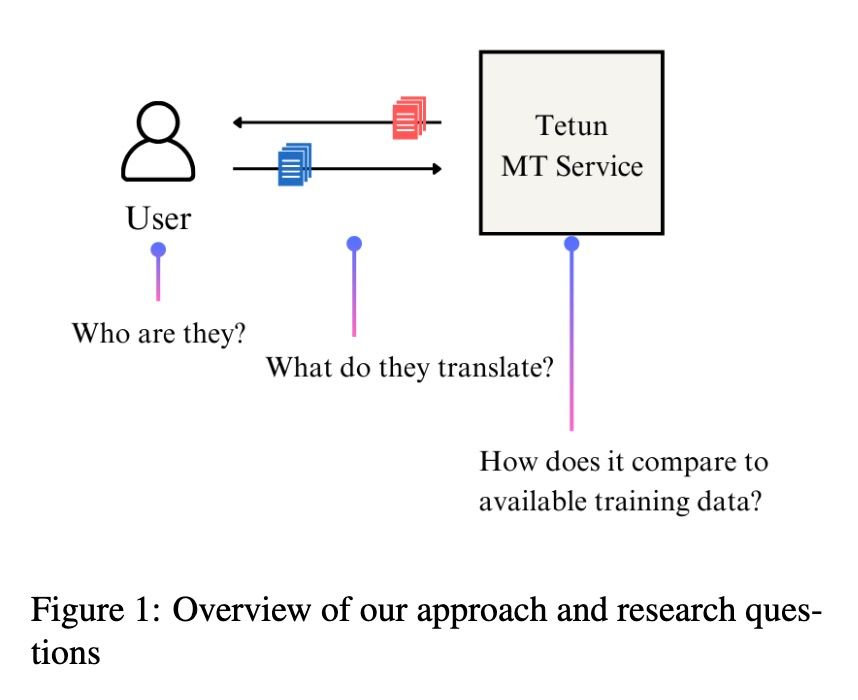

(1) a LOT of usage is for educational purposes (>50% of translated text)

--> contrasts sharply with Tetun corpora (e.g. MADLAD), dominated by news & religion.

Takeaway: don't evaluate MT on overrepresented domains (e.g. religion)! You risk misrepresenting end-user exp.

(1) a LOT of usage is for educational purposes (>50% of translated text)

--> contrasts sharply with Tetun corpora (e.g. MADLAD), dominated by news & religion.

Takeaway: don't evaluate MT on overrepresented domains (e.g. religion)! You risk misrepresenting end-user exp.

In particular Fig. 2 + this discussion point:

In particular Fig. 2 + this discussion point:

arxiv.org/abs/2410.03182

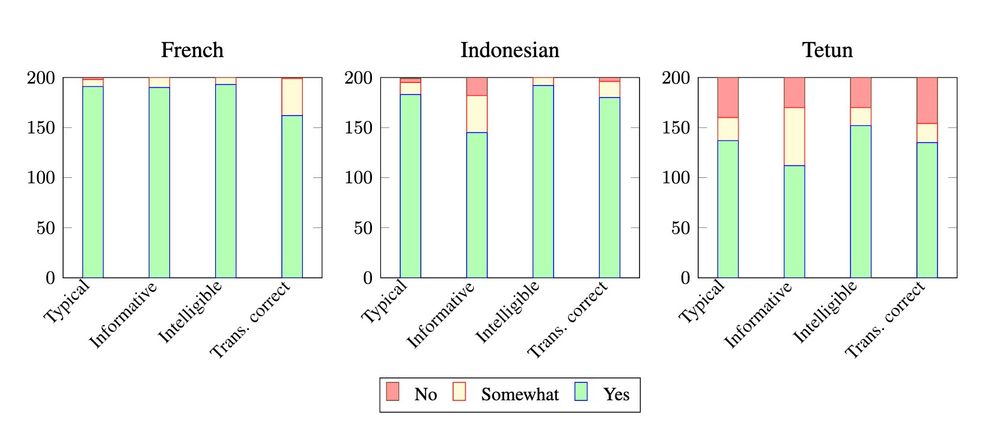

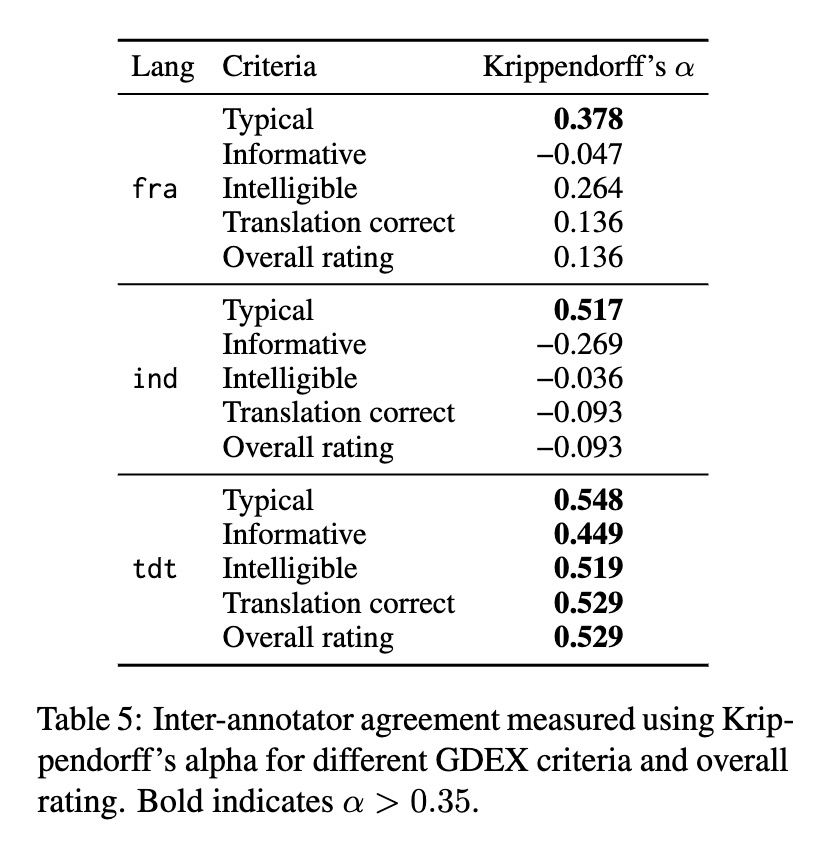

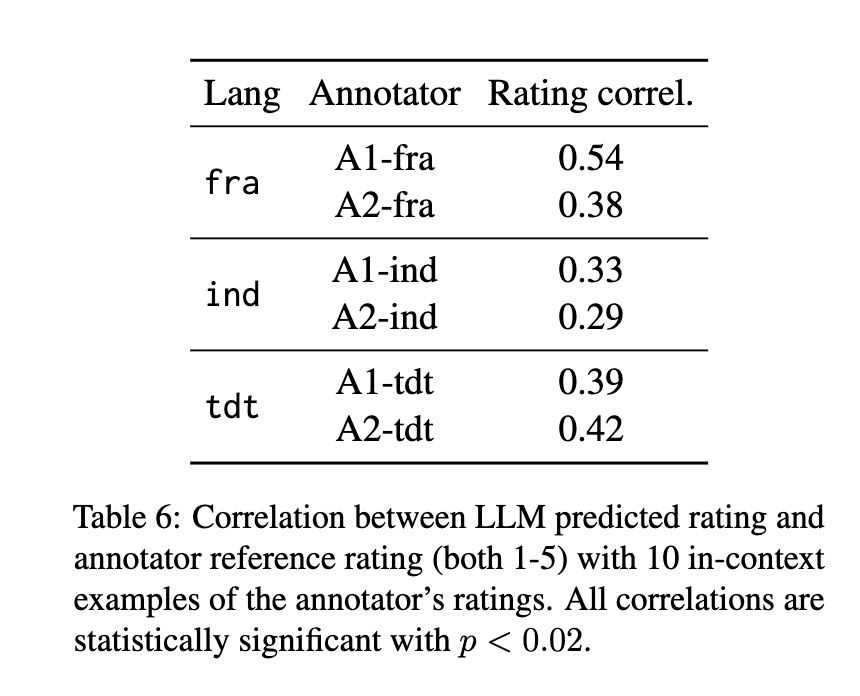

We work with French / Indonesian / Tetun, find that annotators don't agree about what's a "good example", but that LLMs can align with a specific annotator.

arxiv.org/abs/2410.03182

We work with French / Indonesian / Tetun, find that annotators don't agree about what's a "good example", but that LLMs can align with a specific annotator.