Arvind Narayanan

@randomwalker.bsky.social

Princeton computer science prof. I write about the societal impact of AI, tech ethics, & social media platforms. https://www.cs.princeton.edu/~arvindn/

BOOK: AI Snake Oil. https://www.aisnakeoil.com/

BOOK: AI Snake Oil. https://www.aisnakeoil.com/

Happy to hear that AI Snake Oil was a finalist for the Association of American Publishers' 2025 PROSE award in the Computing and Information Sciences category.

March 6, 2025 at 12:02 PM

Happy to hear that AI Snake Oil was a finalist for the Association of American Publishers' 2025 PROSE award in the Computing and Information Sciences category.

It should be no surprise that right now the feature is extremely brittle: news.ycombinator.com/item?id=4270...

This is not to say that the feature will never work well, just that they'll probably have to manually code a lot of logic into the Automations tool that the model uses on the backend.

This is not to say that the feature will never work well, just that they'll probably have to manually code a lot of logic into the Automations tool that the model uses on the backend.

January 15, 2025 at 2:31 PM

It should be no surprise that right now the feature is extremely brittle: news.ycombinator.com/item?id=4270...

This is not to say that the feature will never work well, just that they'll probably have to manually code a lot of logic into the Automations tool that the model uses on the backend.

This is not to say that the feature will never work well, just that they'll probably have to manually code a lot of logic into the Automations tool that the model uses on the backend.

I doubt you can replicate all that simply by prompting a fancy model. Here's the prompt for the ChatGPT Tasks feature extracted by @simonwillison.net: simonwillison.net/2025/Jan/15/...

January 15, 2025 at 2:31 PM

I doubt you can replicate all that simply by prompting a fancy model. Here's the prompt for the ChatGPT Tasks feature extracted by @simonwillison.net: simonwillison.net/2025/Jan/15/...

For building scheduling functionality, handling edge cases isn't just an annoyance; it's the whole ball game. That's why it requires teams of engineers grinding it out. news.ycombinator.com/item?id=4270...

January 15, 2025 at 2:31 PM

For building scheduling functionality, handling edge cases isn't just an annoyance; it's the whole ball game. That's why it requires teams of engineers grinding it out. news.ycombinator.com/item?id=4270...

Really enjoyed "Things we learned about LLMs in 2024" by

@simonwillison.net, especially this analogy between today's datacenter buildout and the 19th century railway boom. The parallels are striking. simonwillison.net/2024/Dec/31/...

@simonwillison.net, especially this analogy between today's datacenter buildout and the 19th century railway boom. The parallels are striking. simonwillison.net/2024/Dec/31/...

January 1, 2025 at 4:00 PM

Really enjoyed "Things we learned about LLMs in 2024" by

@simonwillison.net, especially this analogy between today's datacenter buildout and the 19th century railway boom. The parallels are striking. simonwillison.net/2024/Dec/31/...

@simonwillison.net, especially this analogy between today's datacenter buildout and the 19th century railway boom. The parallels are striking. simonwillison.net/2024/Dec/31/...

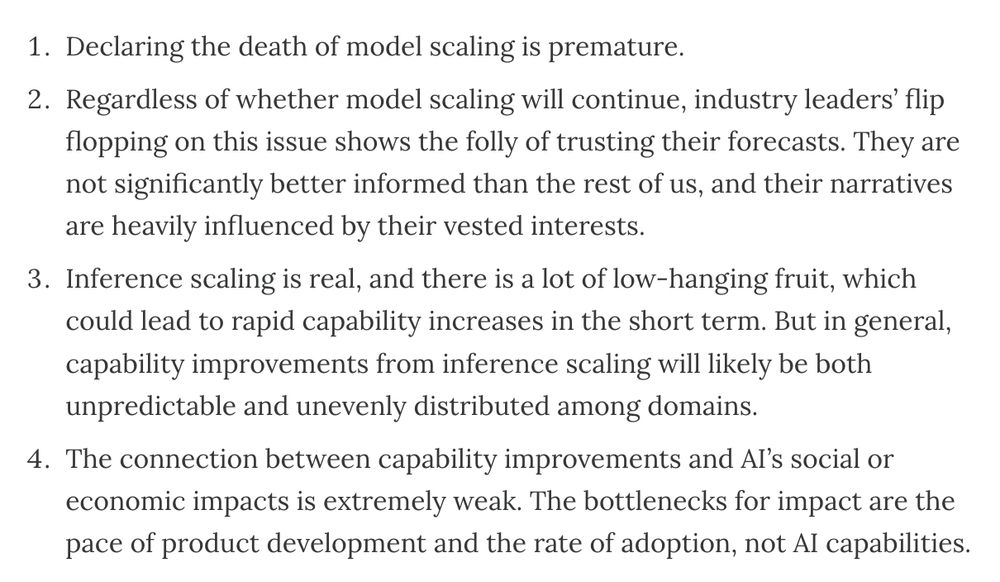

Example—Sutskever had an incentive to talk up scaling when he was at OpenAI and the company needed to raise. But now that he's running a startup with access to much less capital, he's talking about running out of pre-training data as if it were some epiphany and not an endlessly repeated point.

December 19, 2024 at 12:16 PM

Example—Sutskever had an incentive to talk up scaling when he was at OpenAI and the company needed to raise. But now that he's running a startup with access to much less capital, he's talking about running out of pre-training data as if it were some epiphany and not an endlessly repeated point.

New AI Snake Oil essay: Last month the AI industry's narrative suddenly flipped — model scaling is dead, but "inference scaling" is taking over. This has left people outside AI confused. What changed? Is AI capability progress slowing? We look at the evidence. 🧵 www.aisnakeoil.com/p/is-ai-prog...

December 19, 2024 at 12:16 PM

New AI Snake Oil essay: Last month the AI industry's narrative suddenly flipped — model scaling is dead, but "inference scaling" is taking over. This has left people outside AI confused. What changed? Is AI capability progress slowing? We look at the evidence. 🧵 www.aisnakeoil.com/p/is-ai-prog...

Excited to share that AI Snake Oil is one of Nature's 10 best books of 2024! www.nature.com/articles/d41...

The whole first chapter is available online:

press.princeton.edu/books/hardco...

We hope you find it useful.

The whole first chapter is available online:

press.princeton.edu/books/hardco...

We hope you find it useful.

December 18, 2024 at 12:12 PM

Excited to share that AI Snake Oil is one of Nature's 10 best books of 2024! www.nature.com/articles/d41...

The whole first chapter is available online:

press.princeton.edu/books/hardco...

We hope you find it useful.

The whole first chapter is available online:

press.princeton.edu/books/hardco...

We hope you find it useful.

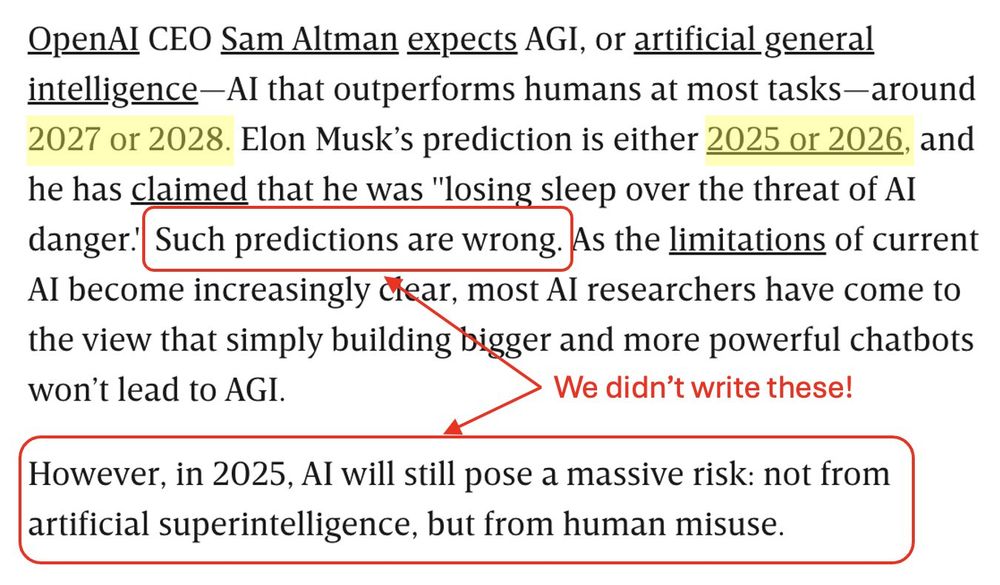

Needless to say, we disagree with that headline. It turns out to be an opinion piece we wrote for them a few months ago and they just published — with a bunch of changes they didn’t tell us about. wired.com/story/human-...

December 15, 2024 at 2:23 PM

Needless to say, we disagree with that headline. It turns out to be an opinion piece we wrote for them a few months ago and they just published — with a bunch of changes they didn’t tell us about. wired.com/story/human-...

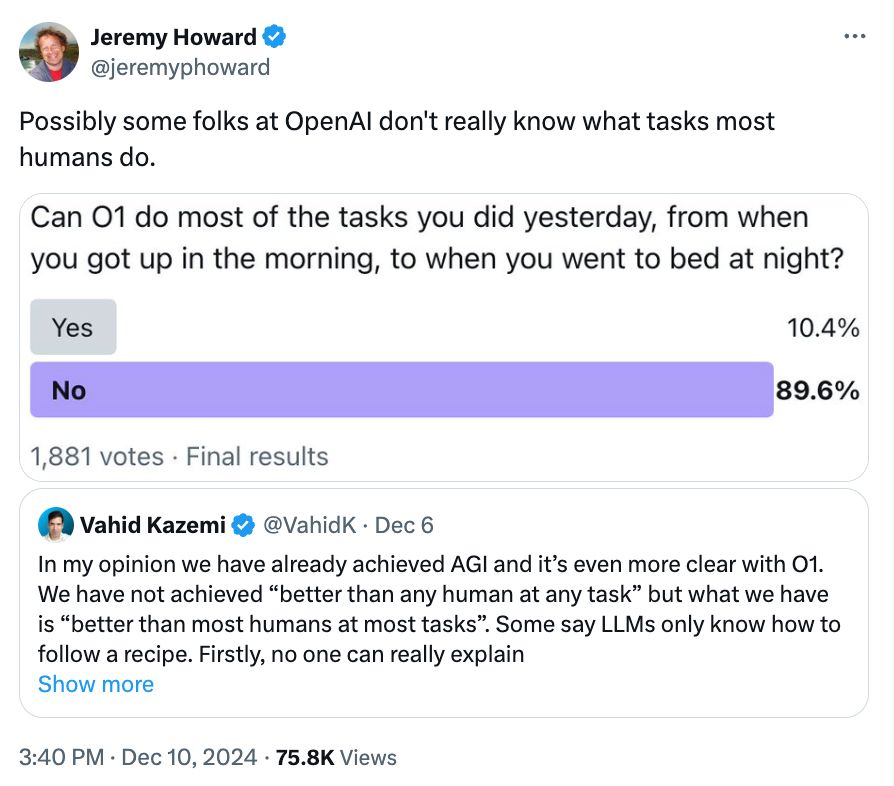

(3) Everyday people in many ways have a better understanding of AI limitations than AI developers in their bubble.

(4) Adoption metrics are far more informative than decontextualized capability measurements.

HT @howard.fm

(4) Adoption metrics are far more informative than decontextualized capability measurements.

HT @howard.fm

December 11, 2024 at 12:58 PM

(3) Everyday people in many ways have a better understanding of AI limitations than AI developers in their bubble.

(4) Adoption metrics are far more informative than decontextualized capability measurements.

HT @howard.fm

(4) Adoption metrics are far more informative than decontextualized capability measurements.

HT @howard.fm

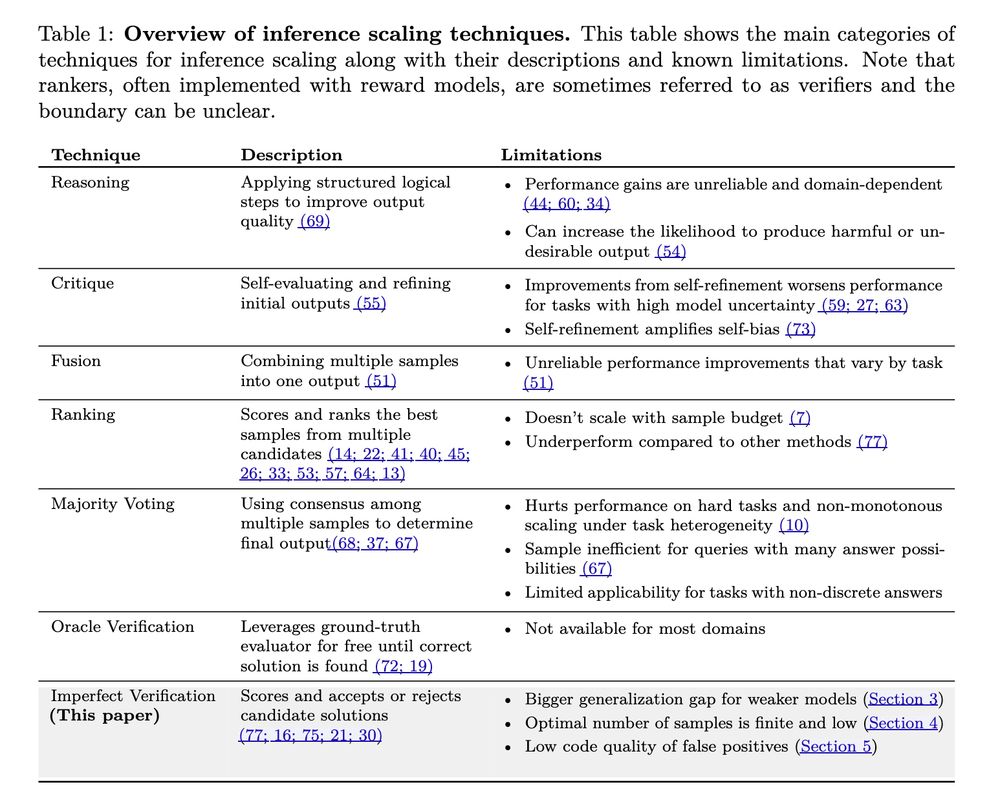

Many types of inference scaling techniques were already known to have limits. But resampling using verifiers seemed promising as a way to increase accuracy across many orders of magnitude of inference compute. The message of our paper is that this only works if the verifier is an oracle.

November 27, 2024 at 12:33 PM

Many types of inference scaling techniques were already known to have limits. But resampling using verifiers seemed promising as a way to increase accuracy across many orders of magnitude of inference compute. The message of our paper is that this only works if the verifier is an oracle.

New short paper on the limits of one type of inference scaling, by @benediktstroebl.bsky.social @sayash.bsky.social & me. The first page has the main findings and message. (The title is a play on Inference Scaling Laws.) More on the limits of inference scaling coming soon. arxiv.org/abs/2411.17501

November 27, 2024 at 12:27 PM

New short paper on the limits of one type of inference scaling, by @benediktstroebl.bsky.social @sayash.bsky.social & me. The first page has the main findings and message. (The title is a play on Inference Scaling Laws.) More on the limits of inference scaling coming soon. arxiv.org/abs/2411.17501

We're organizing a flagship conference at Princeton to help shape the agenda for the next decade of tech policy. We'll discuss various career paths and how you can make an impact.

Details + livestream: citp.princeton.edu/event/tech-p...

Register to attend person: docs.google.com/forms/d/e/1F...

Details + livestream: citp.princeton.edu/event/tech-p...

Register to attend person: docs.google.com/forms/d/e/1F...

November 15, 2024 at 11:47 AM

We're organizing a flagship conference at Princeton to help shape the agenda for the next decade of tech policy. We'll discuss various career paths and how you can make an impact.

Details + livestream: citp.princeton.edu/event/tech-p...

Register to attend person: docs.google.com/forms/d/e/1F...

Details + livestream: citp.princeton.edu/event/tech-p...

Register to attend person: docs.google.com/forms/d/e/1F...

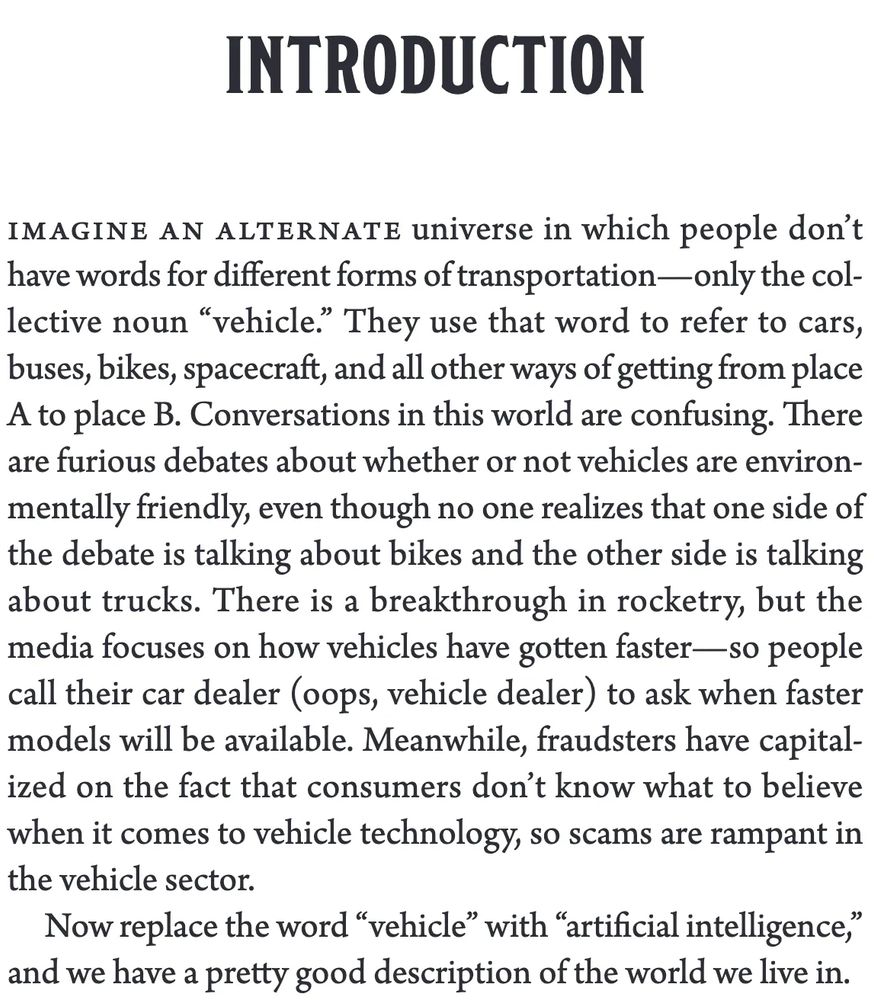

AI Snake Oil is out today! The book has been five years in the making. The first chapter is online. It is 30 pages long and summarizes the book’s main arguments. We're grateful for all the early interest and support. Let us know what you think of the book! www.aisnakeoil.com/p/starting-r...

November 15, 2024 at 11:47 AM

AI Snake Oil is out today! The book has been five years in the making. The first chapter is online. It is 30 pages long and summarizes the book’s main arguments. We're grateful for all the early interest and support. Let us know what you think of the book! www.aisnakeoil.com/p/starting-r...

📢 The first chapter of the AI snake oil book by me and @sayash.bsky.social is now available online. press.princeton.edu/books/hardco...

It is 30 pages long and summarizes our main arguments. The book is available to preorder and will be published in less than two weeks. amazon.com/Snake-Oil-Ar...

It is 30 pages long and summarizes our main arguments. The book is available to preorder and will be published in less than two weeks. amazon.com/Snake-Oil-Ar...

November 15, 2024 at 11:47 AM

📢 The first chapter of the AI snake oil book by me and @sayash.bsky.social is now available online. press.princeton.edu/books/hardco...

It is 30 pages long and summarizes our main arguments. The book is available to preorder and will be published in less than two weeks. amazon.com/Snake-Oil-Ar...

It is 30 pages long and summarizes our main arguments. The book is available to preorder and will be published in less than two weeks. amazon.com/Snake-Oil-Ar...

New on the AI Snake Oil blog: How will the Executive Order impact openness in AI? We did a deep dive. On balance, for now, the EO seems to be good news for those who favor openness in AI. www.aisnakeoil.com/p/what-the-e...

With @sayash.bsky.social and Rishi Bommasani.

With @sayash.bsky.social and Rishi Bommasani.

November 15, 2024 at 11:47 AM

New on the AI Snake Oil blog: How will the Executive Order impact openness in AI? We did a deep dive. On balance, for now, the EO seems to be good news for those who favor openness in AI. www.aisnakeoil.com/p/what-the-e...

With @sayash.bsky.social and Rishi Bommasani.

With @sayash.bsky.social and Rishi Bommasani.

Jevons paradox makes it hard to predict the future impacts of AI. For example, more efficient GPUs might blunt the environmental impact. Or they might worsen it because people might start using AI for more things. There are too many unknowns that will determine which way the chips will fall.

![In economics, the Jevons paradox (/ˈdʒɛvənz/; sometimes Jevons effect) occurs when technological progress or government policy increases the efficiency with which a resource is used (reducing the amount necessary for any one use), but the falling cost of use induces increases in demand enough that resource use is increased, rather than reduced.[1][2][3] Governments typically assume that efficiency gains will lower resource consumption, ignoring the possibility of the paradox arising.[4]

In 1865, the English economist William Stanley Jevons observed that technological improvements that increased the efficiency of coal use led to the increased consumption of coal in a wide range of industries.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:h3imi5zmcoqinwouamcnuh7m/bafkreibn374lrr5duwzz2jvo7umxiaqmld4ov2dr7mmwyb3ubdrtfhh36i@jpeg)

November 15, 2024 at 11:47 AM

Jevons paradox makes it hard to predict the future impacts of AI. For example, more efficient GPUs might blunt the environmental impact. Or they might worsen it because people might start using AI for more things. There are too many unknowns that will determine which way the chips will fall.

Hello, world! My first post here. I'm trying to wrap my head around all the different federated social networking protocols / apps with their differing approaches to decentralization and their pros and cons. This is what I've got so far. Thoughts? Corrections? Additions?

November 15, 2024 at 11:47 AM

Hello, world! My first post here. I'm trying to wrap my head around all the different federated social networking protocols / apps with their differing approaches to decentralization and their pros and cons. This is what I've got so far. Thoughts? Corrections? Additions?