Canada CIFAR AI Chair with Amii

Machine Learning and Program Synthesis

he/him; ele/dele 🇨🇦 🇧🇷

https://www.cs.ualberta.ca/~santanad

Preprint: arxiv.org/abs/2506.14162

Preprint: arxiv.org/abs/2506.14162

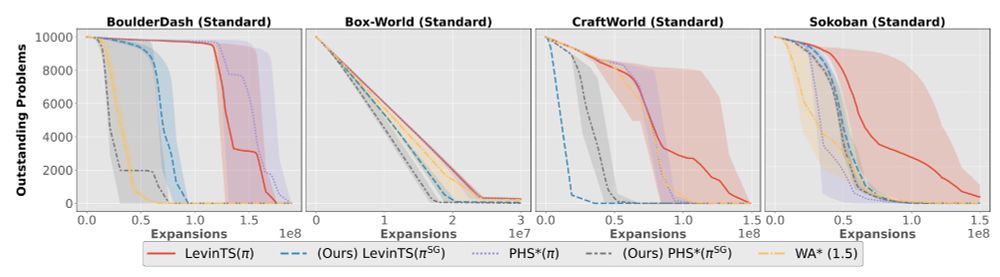

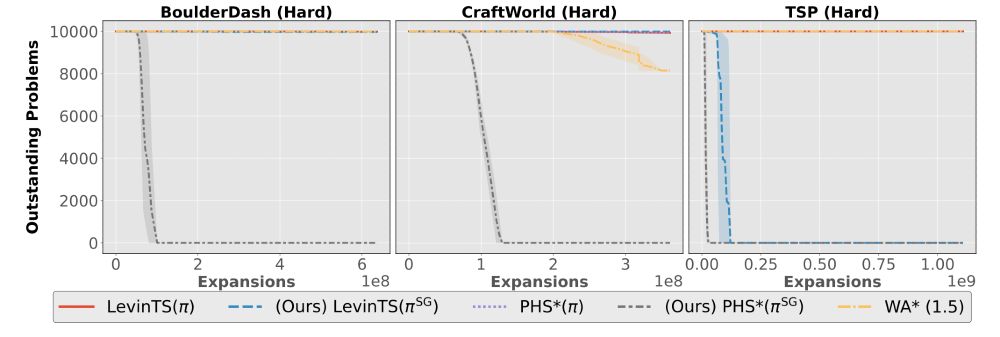

2. Can our search procedure find a policy that generalizes?

2. Can our search procedure find a policy that generalizes?

Rather than treating programmatic policies as the default, we should ask:

Rather than treating programmatic policies as the default, we should ask:

In a grid-world problem, we used the same sparse observation space as used with the programmatic policies augmented with the agent's last action.

In a grid-world problem, we used the same sparse observation space as used with the programmatic policies augmented with the agent's last action.

Preprint: arxiv.org/abs/2506.14162

Preprint: arxiv.org/abs/2506.14162

www.cs.utexas.edu/~swarat/pubs...

Also, the following paper covers most of the recent works on neuro-guided bottom-up synthesis algorithms:

webdocs.cs.ualberta.ca/~santanad/pa...

www.cs.utexas.edu/~swarat/pubs...

Also, the following paper covers most of the recent works on neuro-guided bottom-up synthesis algorithms:

webdocs.cs.ualberta.ca/~santanad/pa...