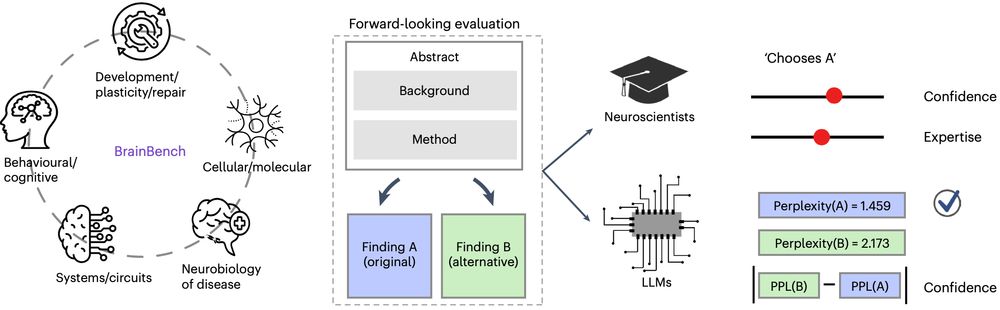

and braingpt.org. LLMs integrate a noisy yet interrelated scientific literature to forecast outcomes. nature.com/articles/s41... 1/8

and braingpt.org. LLMs integrate a noisy yet interrelated scientific literature to forecast outcomes. nature.com/articles/s41... 1/8