The paper is here: arxiv.org/pdf/2503.14499

The paper is here: arxiv.org/pdf/2503.14499

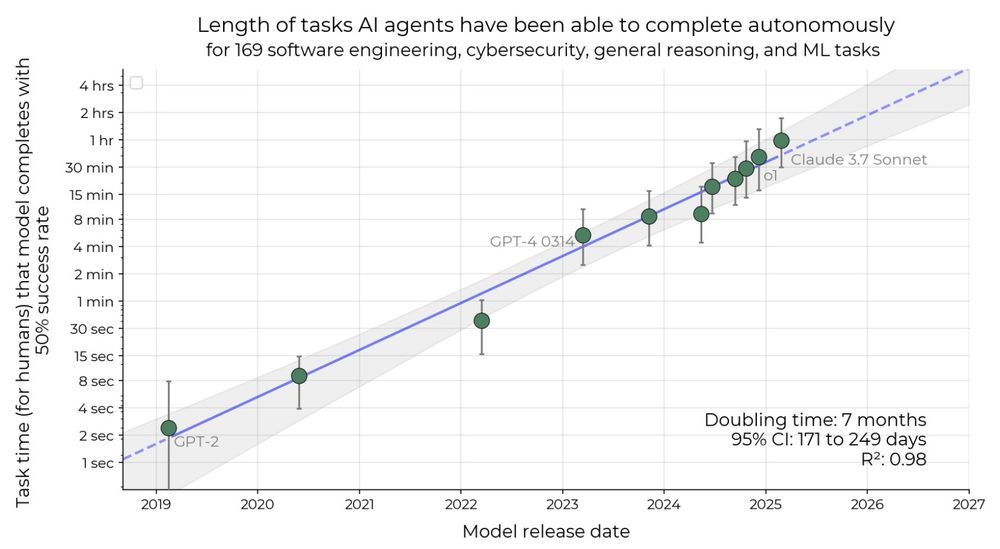

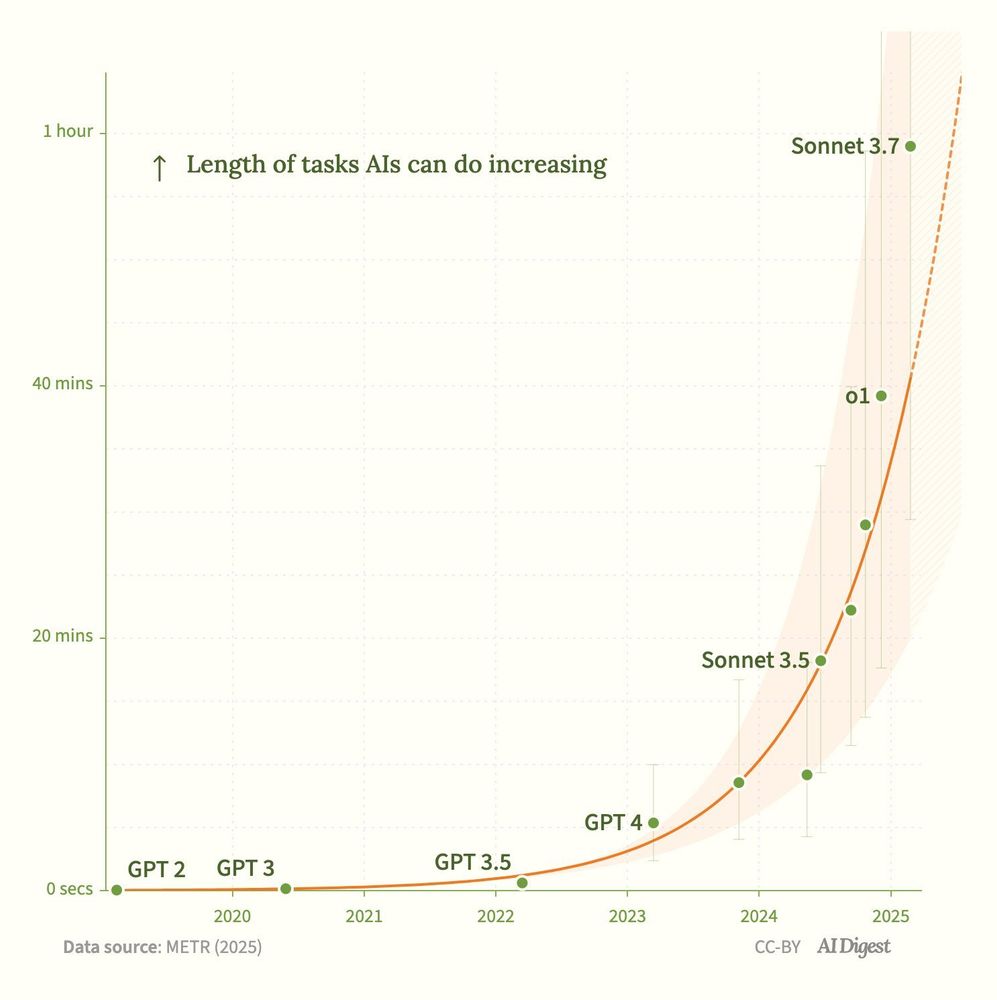

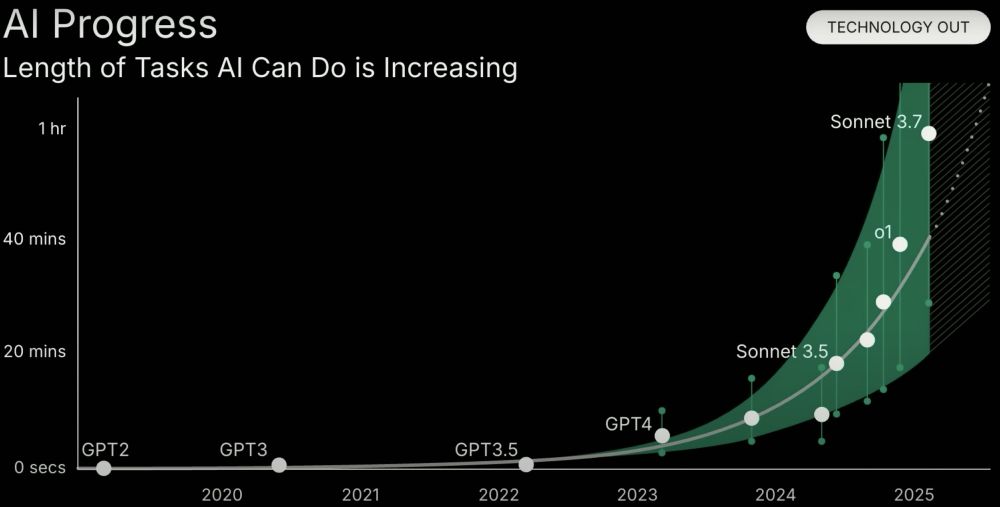

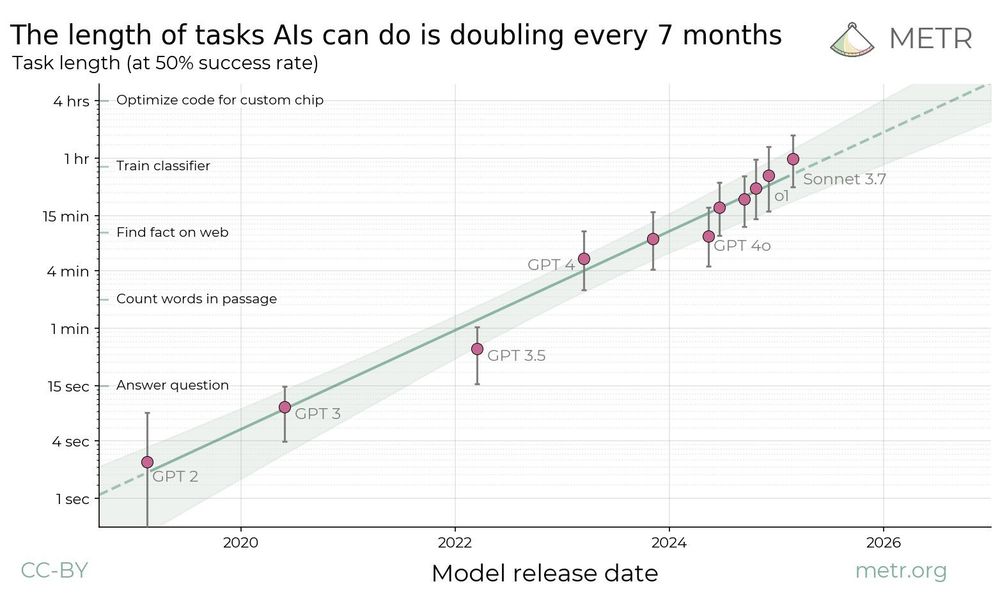

I think agentic AI may move faster than the METR trend, but we should report the data faithfully rather than over‑generalize to fit a belief we hold.

I think agentic AI may move faster than the METR trend, but we should report the data faithfully rather than over‑generalize to fit a belief we hold.

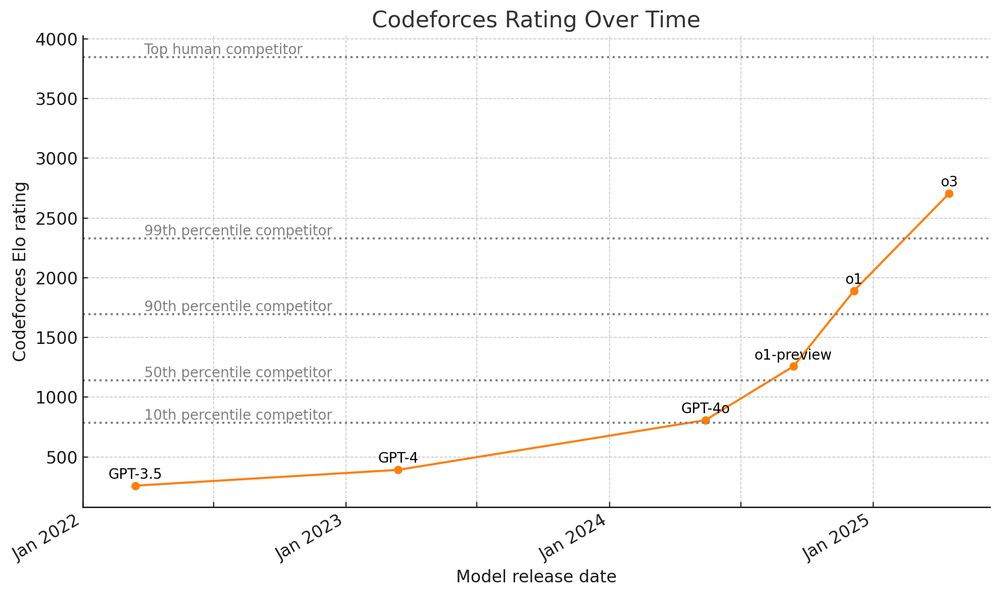

I know there's still a lot of benchmarks where progress is flat, but progress on Codeforces was quite flat for a long time too.

I know there's still a lot of benchmarks where progress is flat, but progress on Codeforces was quite flat for a long time too.

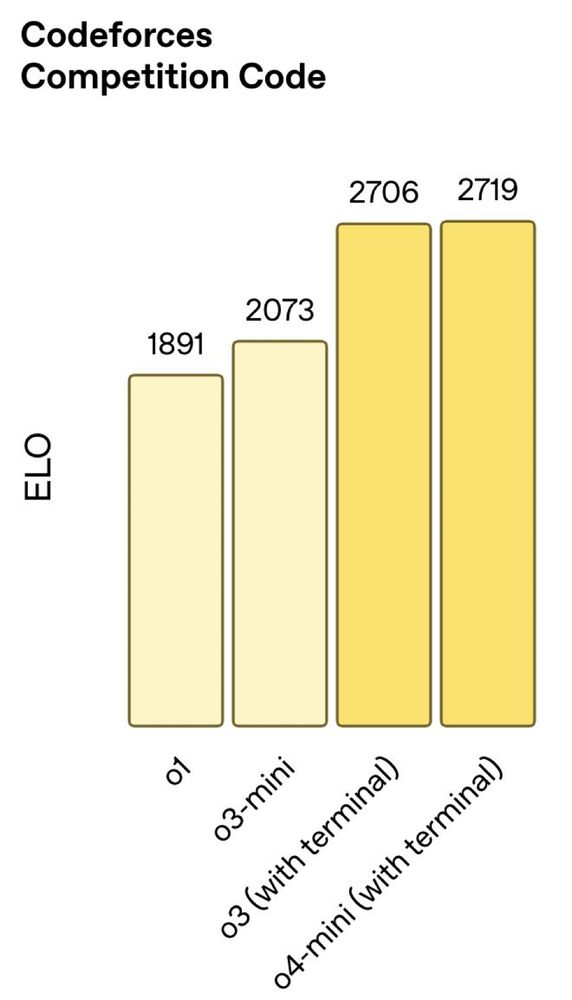

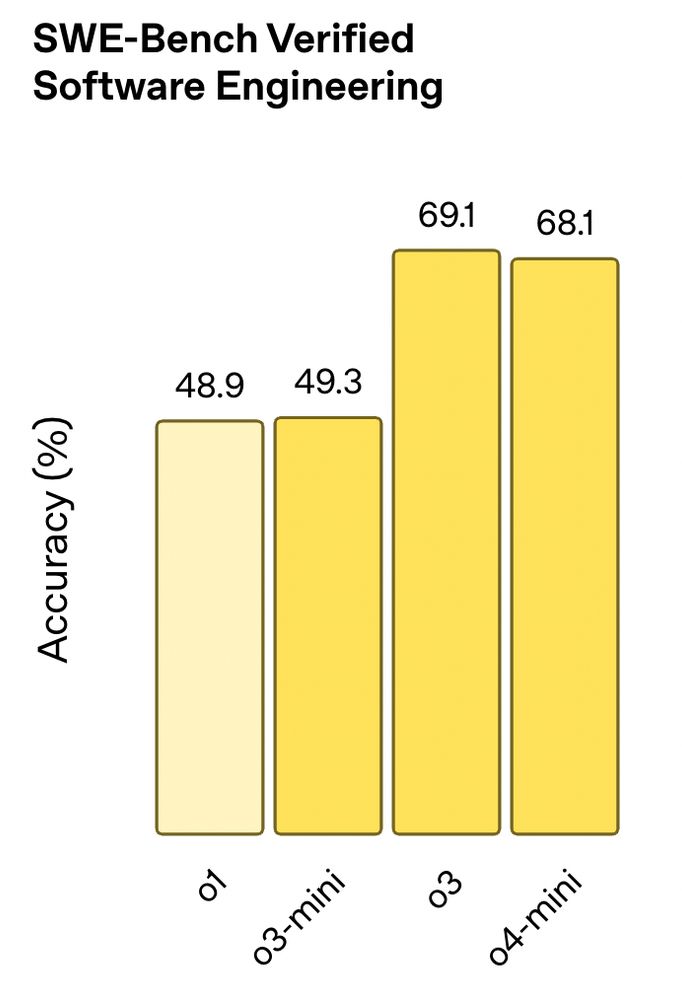

But what I'm most excited about is the stuff we can't benchmark. I expect o3/o4-mini will aid scientists in their research and I'm excited to see what they do!

But what I'm most excited about is the stuff we can't benchmark. I expect o3/o4-mini will aid scientists in their research and I'm excited to see what they do!

(New reasoning models coming soon too.)

(New reasoning models coming soon too.)

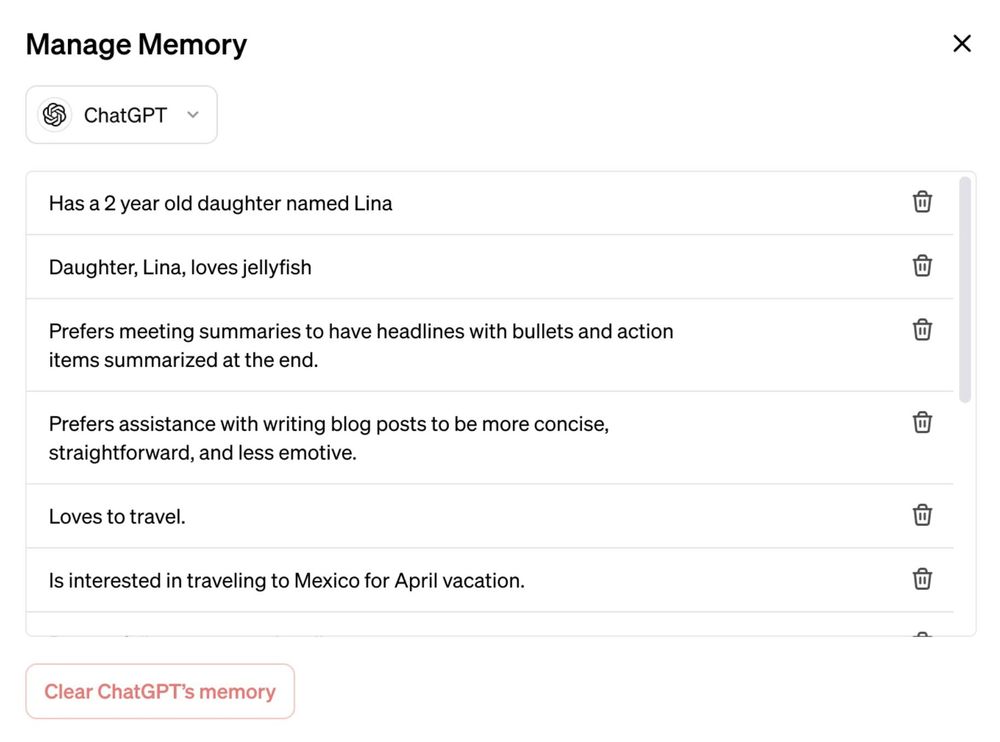

Still a lot of research to do but it's a step toward fundamentally changing how we interact with LLMs openai.com/index/memory...

Still a lot of research to do but it's a step toward fundamentally changing how we interact with LLMs openai.com/index/memory...

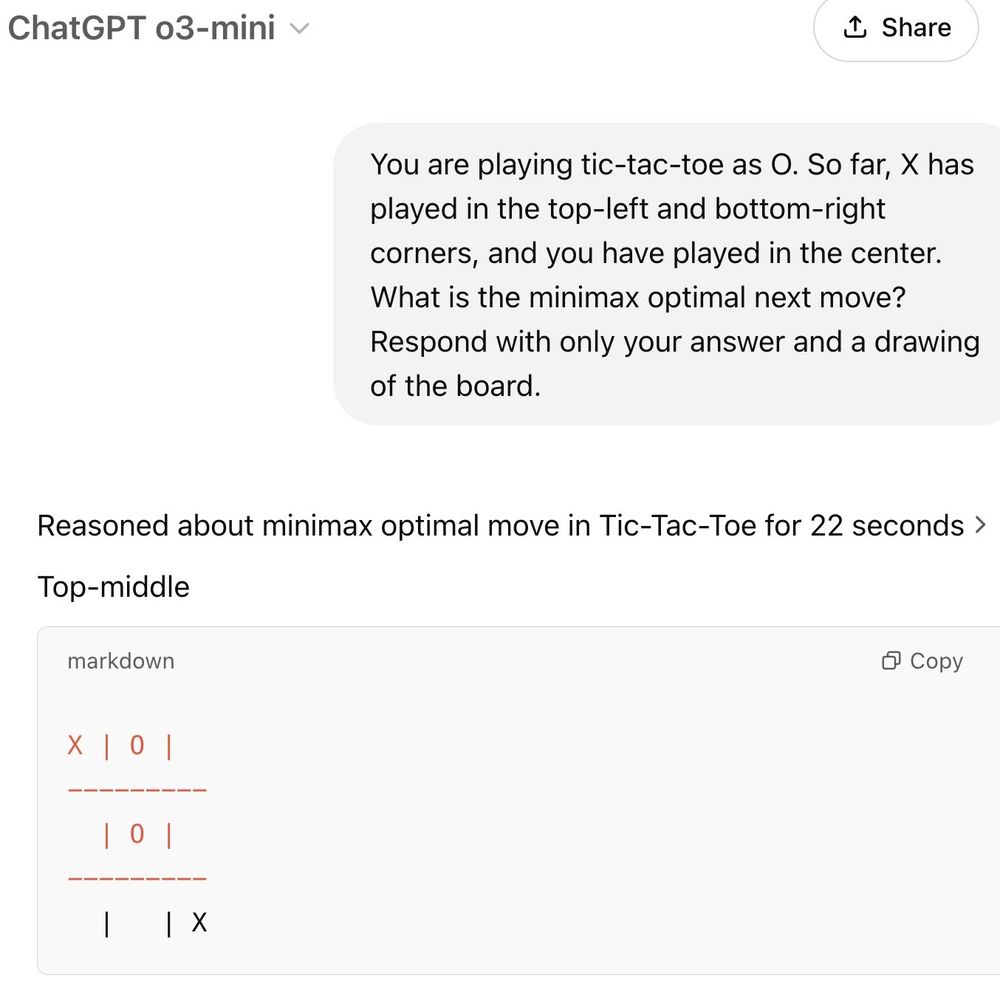

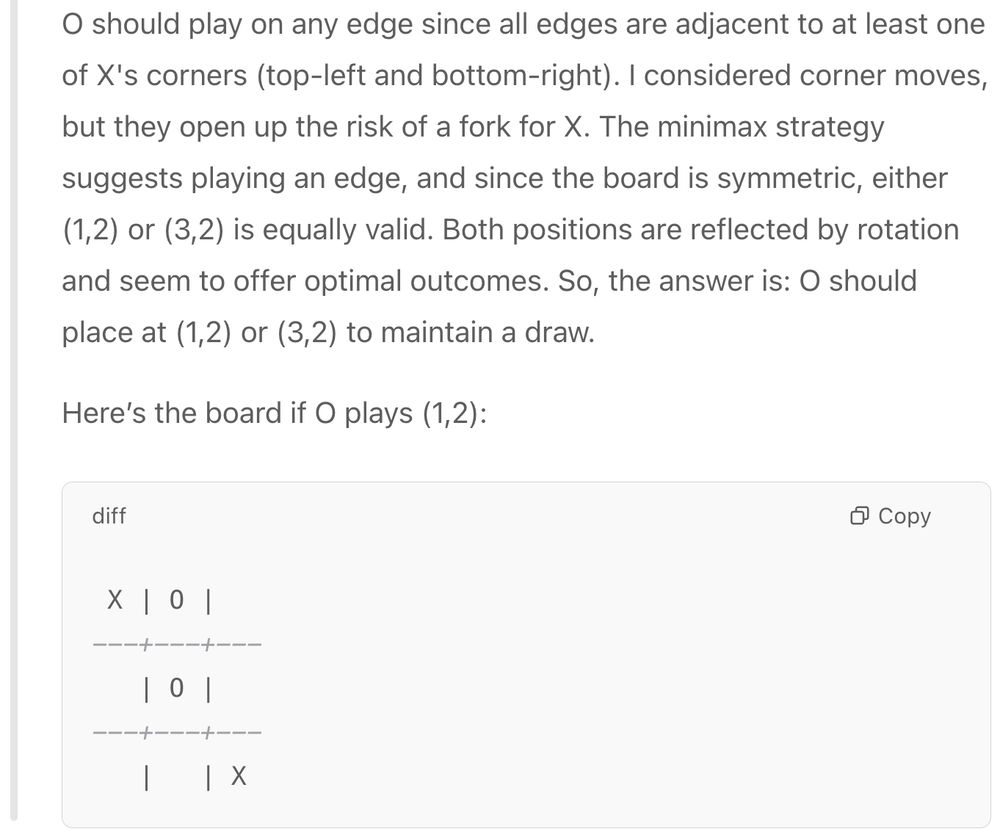

The summarized CoT is pretty unhinged but you can see on the right that by the end it figures it out.

The summarized CoT is pretty unhinged but you can see on the right that by the end it figures it out.