pietrolesci.github.io

Let’s talk about it and why it matters👇

@aclmeeting.bsky.social #ACL2025 #NLProc

Let’s talk about it and why it matters👇

@aclmeeting.bsky.social #ACL2025 #NLProc

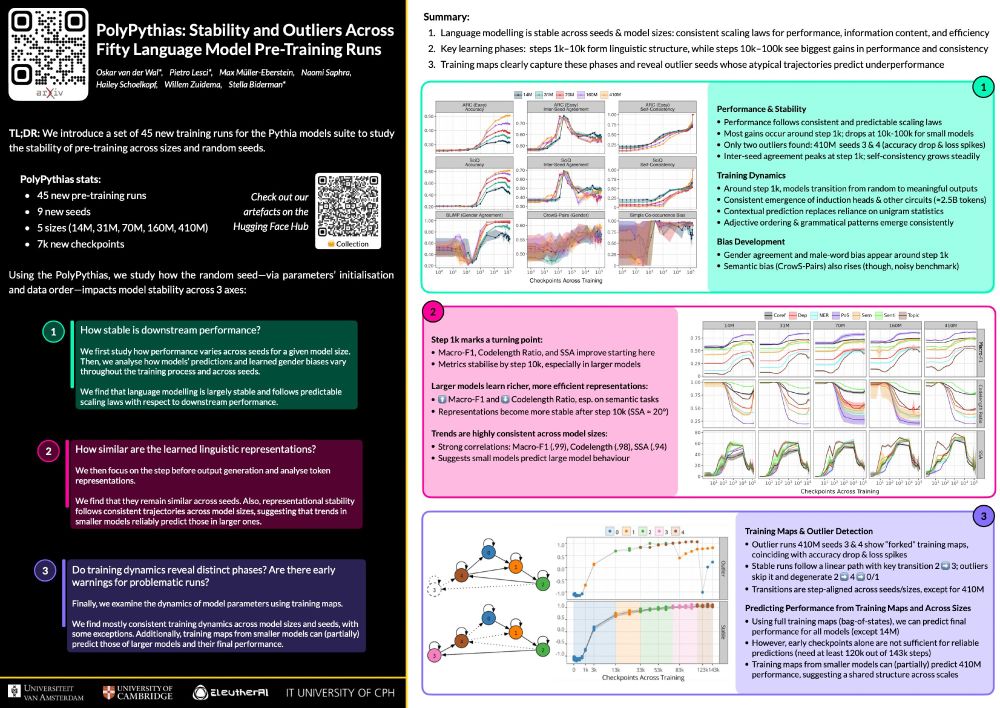

We’ll be presenting our paper on pre-training stability in language models and the PolyPythias 🧵

🔗 ArXiv: arxiv.org/abs/2503.09543

🤗 PolyPythias: huggingface.co/collections/...

We’ll be presenting our paper on pre-training stability in language models and the PolyPythias 🧵

🔗 ArXiv: arxiv.org/abs/2503.09543

🤗 PolyPythias: huggingface.co/collections/...