Former researcher at Stanford University

To generate ~50% of Time Complexity Generation responses, QwQ takes ~30mn whereas Llama 4 needs only a few dozens seconds 🥳.

To generate ~50% of Time Complexity Generation responses, QwQ takes ~30mn whereas Llama 4 needs only a few dozens seconds 🥳.

🏆Leaderboard: facebookresearch.github.io/BigOBench/leaderboard.html

💻Github: github.com/facebookresearch/bigobench

🤗Huggingface: huggingface.co/datasets/facebook/BigOBench

📚ArXiv: arxiv.org/abs/2503.15242

🏆Leaderboard: facebookresearch.github.io/BigOBench/leaderboard.html

💻Github: github.com/facebookresearch/bigobench

🤗Huggingface: huggingface.co/datasets/facebook/BigOBench

📚ArXiv: arxiv.org/abs/2503.15242

It is best on Complexity Predictions tasks, where it even outperforms o1-mini!🎉 But it falls behind on Generation and Ranking tasks.

It is best on Complexity Predictions tasks, where it even outperforms o1-mini!🎉 But it falls behind on Generation and Ranking tasks.

Though our inference budget is large (enough for reasoning models like QwQ, R1 or Nemotron-Ultra 🥵), DeepCoder responses seemed to take even longer.

Though our inference budget is large (enough for reasoning models like QwQ, R1 or Nemotron-Ultra 🥵), DeepCoder responses seemed to take even longer.

Nemotron-Ultra 253B displays high and consistent performance on BigO(Bench) (very often on the podium). It takes the lead on Space Complexity Generation and Ranking!🥳

Nemotron-Ultra 253B displays high and consistent performance on BigO(Bench) (very often on the podium). It takes the lead on Space Complexity Generation and Ranking!🥳

🏆Leaderboard: facebookresearch.github.io/BigOBench/leaderboard.html

💻Github: github.com/facebookresearch/bigobench

🤗Huggingface: huggingface.co/datasets/facebook/BigOBench

📚ArXiv: arxiv.org/abs/2503.15242

🏆Leaderboard: facebookresearch.github.io/BigOBench/leaderboard.html

💻Github: github.com/facebookresearch/bigobench

🤗Huggingface: huggingface.co/datasets/facebook/BigOBench

📚ArXiv: arxiv.org/abs/2503.15242

📚ArXiv: arxiv.org/abs/2503.15242

🏡Homepage: facebookresearch.github.io/BigOBench

🤗Huggingface: huggingface.co/datasets/facebook/BigOBench

🧵6/6

📚ArXiv: arxiv.org/abs/2503.15242

🏡Homepage: facebookresearch.github.io/BigOBench

🤗Huggingface: huggingface.co/datasets/facebook/BigOBench

🧵6/6

👇Happy to provide details/help with any suggestion!

🧵5/6

👇Happy to provide details/help with any suggestion!

🧵5/6

These tasks usually require extensive reasoning skills; "thinking" steps easily take ~20k tokens.

🧵4/6

These tasks usually require extensive reasoning skills; "thinking" steps easily take ~20k tokens.

🧵4/6

On Time Complexity Ranking, QwQ also beats DeepSeekR1 distilled models by ~30% coeffFull, while being on par with DeepSeek on Space.

🧵3/6

On Time Complexity Ranking, QwQ also beats DeepSeekR1 distilled models by ~30% coeffFull, while being on par with DeepSeek on Space.

🧵3/6

All these models have similar active parameters, DeepSeekV3-0324 being MoE with 37B active parameters.

Whereas DeepSeekR1 and QwQ use reasoning tokens (and therefore way more inference tokens), Gemma3 and DeepSeekV3-0324 directly output the result.

🧵2/6

All these models have similar active parameters, DeepSeekV3-0324 being MoE with 37B active parameters.

Whereas DeepSeekR1 and QwQ use reasoning tokens (and therefore way more inference tokens), Gemma3 and DeepSeekV3-0324 directly output the result.

🧵2/6

📚ArXiv: arxiv.org/abs/2503.15242

🏡🏆Homepage: facebookresearch.github.io/BigOBench

💻Github: github.com/facebookresearch/bigobench

🤗Huggingface: huggingface.co/datasets/facebook/BigOBench

📚ArXiv: arxiv.org/abs/2503.15242

🏡🏆Homepage: facebookresearch.github.io/BigOBench

💻Github: github.com/facebookresearch/bigobench

🤗Huggingface: huggingface.co/datasets/facebook/BigOBench

Do they really ‘think’ about notions they ‘know’, or do they only learn by heart patterns of ‘thoughts’ during training?

Do they really ‘think’ about notions they ‘know’, or do they only learn by heart patterns of ‘thoughts’ during training?

any human programmer, usually accustomed to easily

finding non-optimized solutions, but struggling at the

best ones.

any human programmer, usually accustomed to easily

finding non-optimized solutions, but struggling at the

best ones.

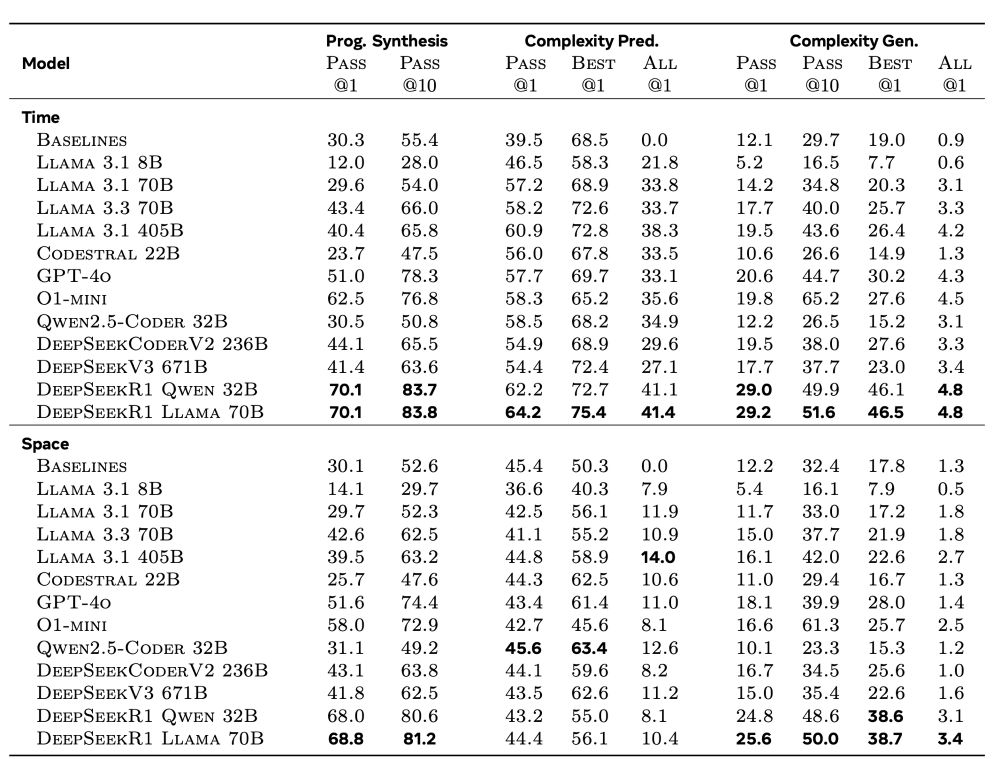

Token-space reasoning models perform best !

Token-space reasoning models perform best !

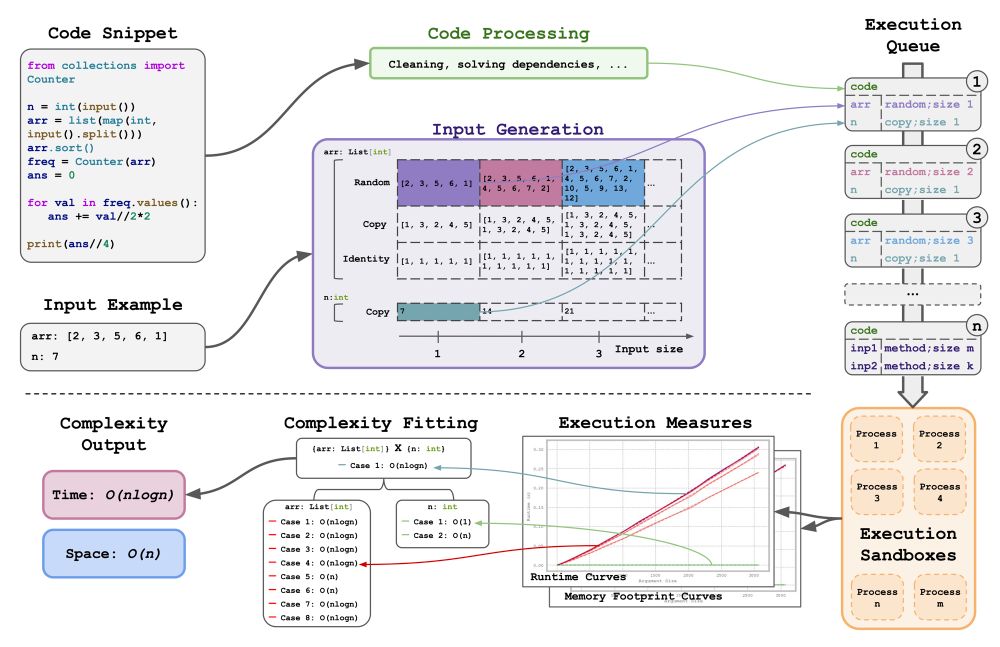

The framework ran on ~1M Code Contests solutions 👉 Data is public too!

Lastly, we designed test sets and evaluated LLMs 👉 Leaderboard is out!

The framework ran on ~1M Code Contests solutions 👉 Data is public too!

Lastly, we designed test sets and evaluated LLMs 👉 Leaderboard is out!

We investigate the performance of LLMs on 3 tasks:

✅ Time/Space Complexity Prediction

✅ Time/Space Complexity Generation

✅ Time/Space Complexity Ranking

We investigate the performance of LLMs on 3 tasks:

✅ Time/Space Complexity Prediction

✅ Time/Space Complexity Generation

✅ Time/Space Complexity Ranking