Oscar Mañas

@oscmansan.bsky.social

Research scientist at Meta, PhD candidate at Mila and Université de Montréal. Working on multimodal vision+language generation. Català a Zúric.

Headed to @cvprconference.bsky.social in Nashville! I'll be presenting our work on Multimodal Reward-guided Decoding. Let's connect if you're around!

June 10, 2025 at 6:21 PM

Headed to @cvprconference.bsky.social in Nashville! I'll be presenting our work on Multimodal Reward-guided Decoding. Let's connect if you're around!

TFW you find a memory leak in your code two days before the rebuttal's deadline

May 15, 2025 at 2:18 PM

TFW you find a memory leak in your code two days before the rebuttal's deadline

Heading to Singapore for the next 1.5 weeks for @iclr-conf.bsky.social. If you're around and want to meet up, hit me up!

April 21, 2025 at 11:49 PM

Heading to Singapore for the next 1.5 weeks for @iclr-conf.bsky.social. If you're around and want to meet up, hit me up!

Reposted by Oscar Mañas

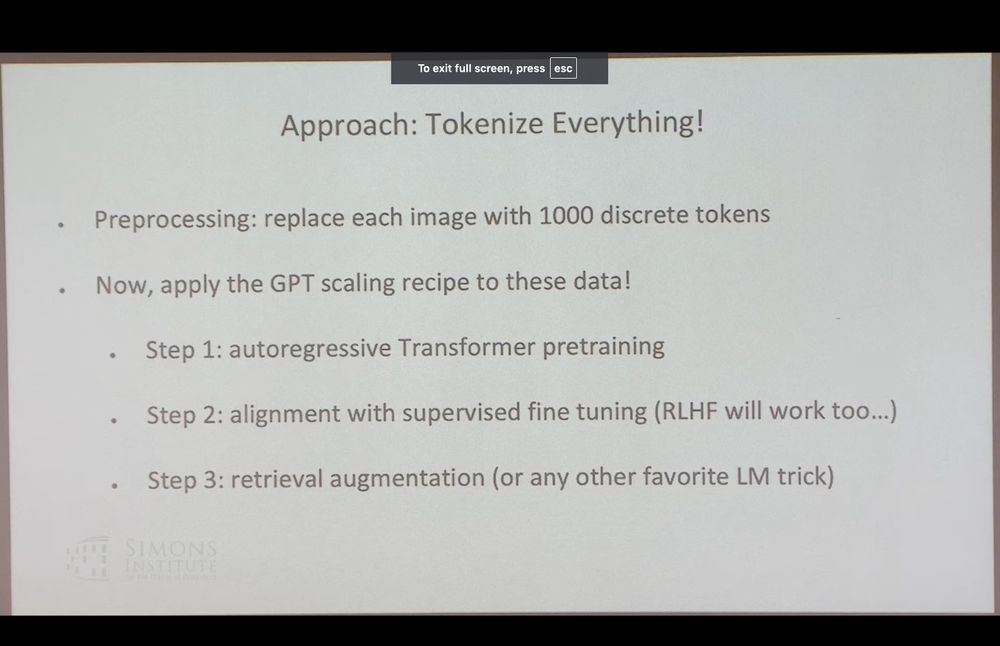

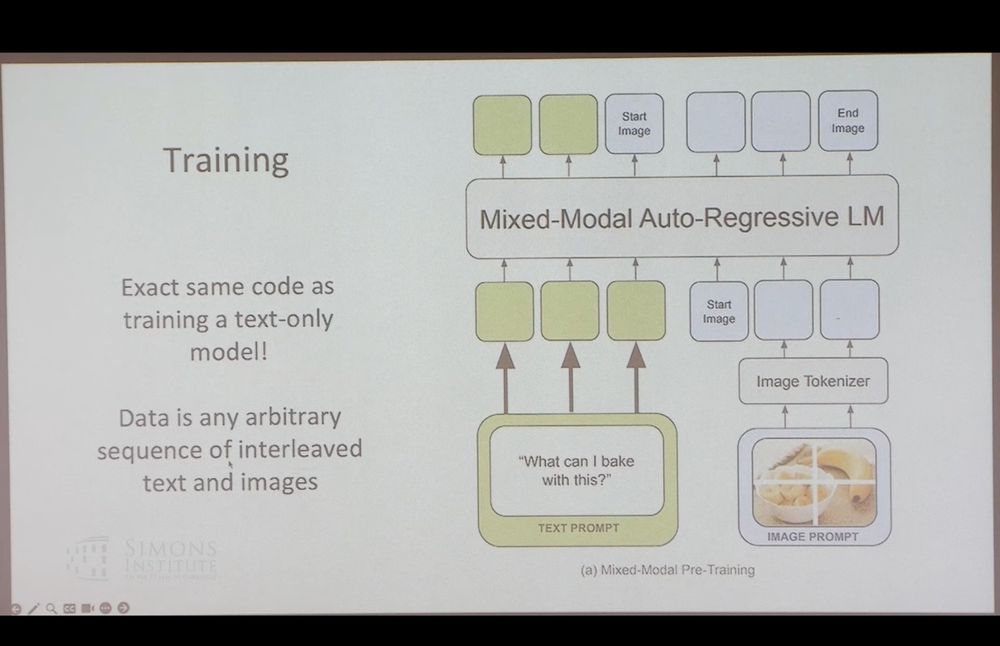

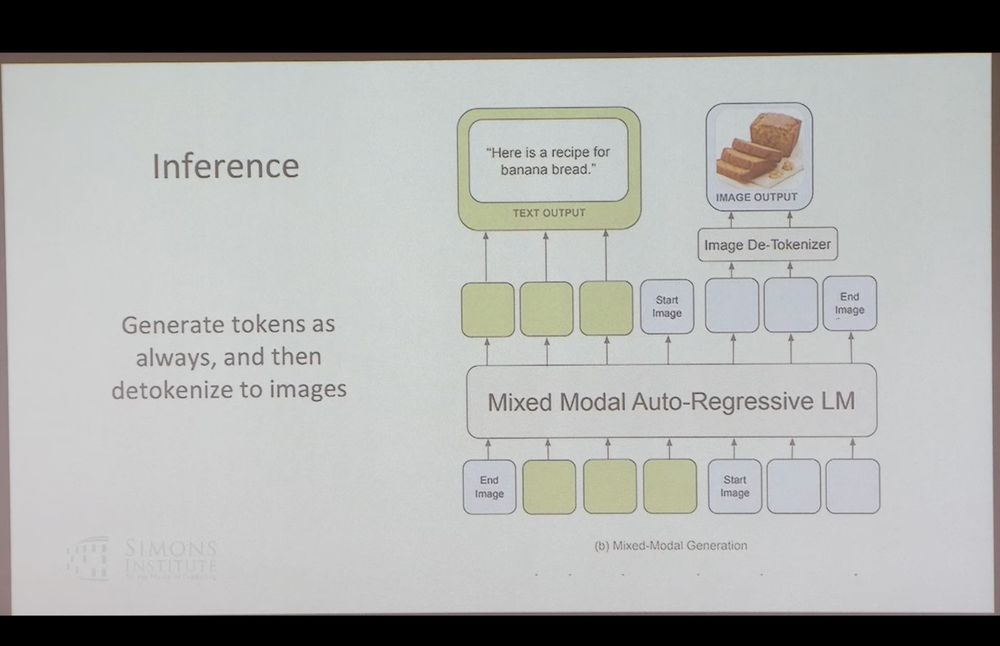

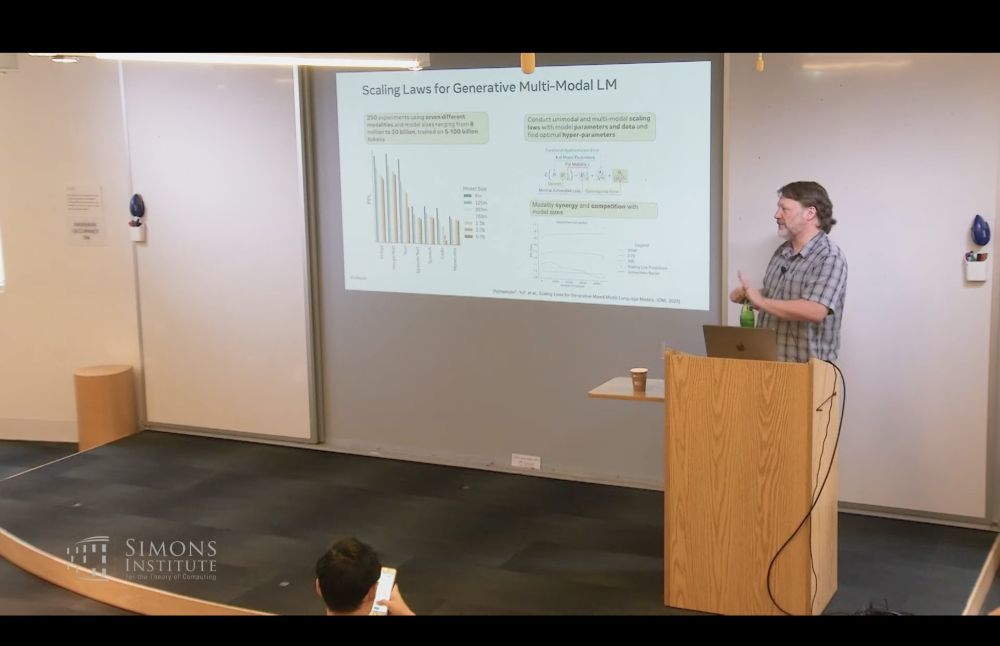

"Tokenize Everything!" Luke Zettlemoyer of

@uofwa.bsky.social on using GPT-like autoregressive techniques for training multimodal models (text, images, audio etc.) at the Simons Institute workshop on The Future of Language Models and Transformers simons.berkeley.edu/workshops/fu...

@uofwa.bsky.social on using GPT-like autoregressive techniques for training multimodal models (text, images, audio etc.) at the Simons Institute workshop on The Future of Language Models and Transformers simons.berkeley.edu/workshops/fu...

April 1, 2025 at 8:55 PM

"Tokenize Everything!" Luke Zettlemoyer of

@uofwa.bsky.social on using GPT-like autoregressive techniques for training multimodal models (text, images, audio etc.) at the Simons Institute workshop on The Future of Language Models and Transformers simons.berkeley.edu/workshops/fu...

@uofwa.bsky.social on using GPT-like autoregressive techniques for training multimodal models (text, images, audio etc.) at the Simons Institute workshop on The Future of Language Models and Transformers simons.berkeley.edu/workshops/fu...

I quite like this analogy by Oriol Vinyals:

* LLM ~= core electric brain

* Agent ~= LLM with a digital body

youtu.be/78mEYaztGaw

* LLM ~= core electric brain

* Agent ~= LLM with a digital body

youtu.be/78mEYaztGaw

Gemini 2.0 and the evolution of agentic AI with Oriol Vinyals

YouTube video by Google DeepMind

youtu.be

December 22, 2024 at 1:32 AM

I quite like this analogy by Oriol Vinyals:

* LLM ~= core electric brain

* Agent ~= LLM with a digital body

youtu.be/78mEYaztGaw

* LLM ~= core electric brain

* Agent ~= LLM with a digital body

youtu.be/78mEYaztGaw

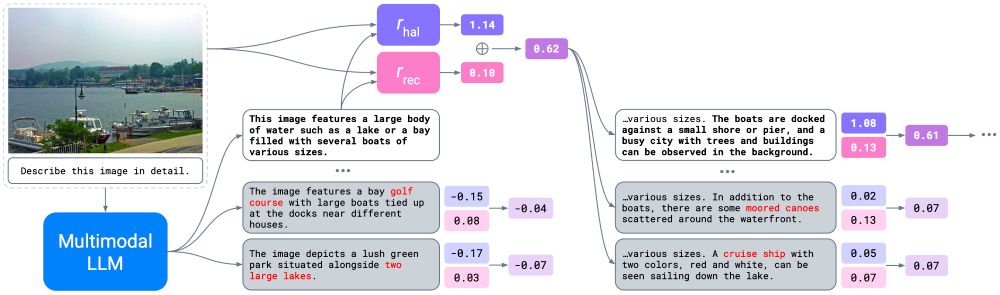

Curious about how to effectively steer the behavior of multimodal LLMs during inference to improve their visual grounding?

Join me today at 4:30pm at the AFM workshop at @NeurIPSConf, where I'll be presenting a poster on my work. Come by to learn more!

openreview.net/forum?id=VWJ...

Join me today at 4:30pm at the AFM workshop at @NeurIPSConf, where I'll be presenting a poster on my work. Come by to learn more!

openreview.net/forum?id=VWJ...

December 14, 2024 at 6:18 PM

Curious about how to effectively steer the behavior of multimodal LLMs during inference to improve their visual grounding?

Join me today at 4:30pm at the AFM workshop at @NeurIPSConf, where I'll be presenting a poster on my work. Come by to learn more!

openreview.net/forum?id=VWJ...

Join me today at 4:30pm at the AFM workshop at @NeurIPSConf, where I'll be presenting a poster on my work. Come by to learn more!

openreview.net/forum?id=VWJ...

Tomorrow at 3:15pm I'll be presenting my work at @mila-quebec.bsky.social's booth (#104) at @neuripsconf.bsky.social. Come to learn more about controlling multimodal LLMs via reward-guided decoding!

🔗 openreview.net/forum?id=VWJ...

🔗 openreview.net/forum?id=VWJ...

Controlling Multimodal LLMs via Reward-guided Decoding

As Multimodal Large Language Models (MLLMs) gain widespread applicability, it is becoming increasingly desirable to adapt them for diverse user needs. In this paper, we study the adaptation of...

openreview.net

December 10, 2024 at 3:04 AM

Tomorrow at 3:15pm I'll be presenting my work at @mila-quebec.bsky.social's booth (#104) at @neuripsconf.bsky.social. Come to learn more about controlling multimodal LLMs via reward-guided decoding!

🔗 openreview.net/forum?id=VWJ...

🔗 openreview.net/forum?id=VWJ...

Reposted by Oscar Mañas

All this being said, Meta/FAIR remains the only place where you can do open AI research with a group of stellar colleagues ten times larger than any university + big-tech computational capabilities level.

December 5, 2024 at 5:48 PM

All this being said, Meta/FAIR remains the only place where you can do open AI research with a group of stellar colleagues ten times larger than any university + big-tech computational capabilities level.